Original Author: Scarlett Wu

On the early morning of June 6th at the WWDC (Apple Worldwide Developers Conference), which is also the fifth day since I tested positive for COVID-19 again, I was sipping on herbal tea and chatting with friends online: One hour passed, I wondered if this One More Thing would be postponed again?

So, at 2 am when Tim Cook appeared and made a grand gesture of "One More Thing," me and my friends on the other side of the screen cheered:

Macintosh introduced personal computing, iPhone introduced mobile internet, and Apple Vision Pro is going to introduce Spatial Computing

Macintosh computer ushered in the era of personal computing, iPhone ushered in the era of mobile internet, and Apple Vision Pro will usher in the era of spatial computing.

As a technology enthusiast, I rejoiced for the new toy I could have next year. But as a Web 3 investor who focuses on gaming, metaverses, and AI, this is a sign of a new era that gives me goosebumps.

You may feel doubtful, "What does the upgrade of MR hardware have to do with Web 3?" Well, let's start with Mint Ventures' thesis on the metaverse track.

Our Thesis on the Metaverse or Web 3 World

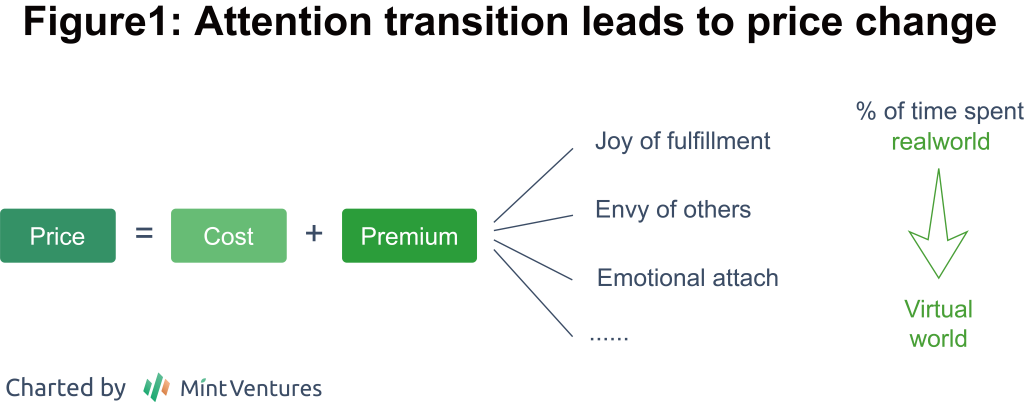

The valuation premium in the blockchain world comes from:

-

The underlying trustable transaction layer, which reduces transaction costs: The asset ownership and protection of tangible goods are based on the forced ownership by the violent machinery of the state, while the asset ownership in the virtual world is based on the "trust in data that should not (or cannot) be tampered with under consensus," as well as the recognition of the asset itself after ownership is established. Although you can still copy and paste, BAYC still has a price equivalent to a house in a third-tier city. This difference is not about how different the image of a copied and pasted picture is from the image of NFT metadata, but rather that assets can be securitized only when there is a consensus on its "non-replicability" in the market.

-

The high securitization of assets leads to a liquidity premium

-

"Permissionless premium" brought by the decentralized consensus mechanism

Virtual goods are easier to securitize than physical goods:

From the popularization history of paying for digital assets, it can be seen that people's habit of paying for virtual content is not developed overnight. However, it is undeniable that the payment for virtual assets has penetrated into the lives of the masses. In April 2003, the launch of iTunes Store allowed people to discover that in addition to downloading songs from the rampant piracy on the Internet, there is also the option of purchasing genuine digital music to support favorite artists. In 2008, the App Store was launched, and one-time purchase apps became popular worldwide, while the subsequent in-app purchase feature continued to contribute to Apple's digital asset revenue.

Hidden among this is a subtle shift in the payment model of the gaming industry. The initial version of the gaming industry was Arcade Games, where the payment model was "pay for the experience" (similar to movies). In the console era, the payment model was "pay for cartridges/discs" (similar to movies and music albums). In the later stage of the console era, purely digital version games were sold, along with the emergence of Steam's digital game market and in-app purchases that allowed some games to achieve revenue miracles. The history of game payment model updates is also a history of decreasing distribution costs, from arcade to console, to personal computers and mobile phones that everyone has and can log in to digital game distribution platforms. As players immerse themselves in the games themselves, the trend of game assets has shifted from being "a part of the experience" to "purchasable goods" (although the recent trend in the past decade has shifted to the increasing distribution costs of digital assets, mainly due to low growth and high competition on the Internet, as well as the monopoly of traffic entry on attention).

So, what will be the next step? Tradable virtual world assets will be a theme that we always have faith in.

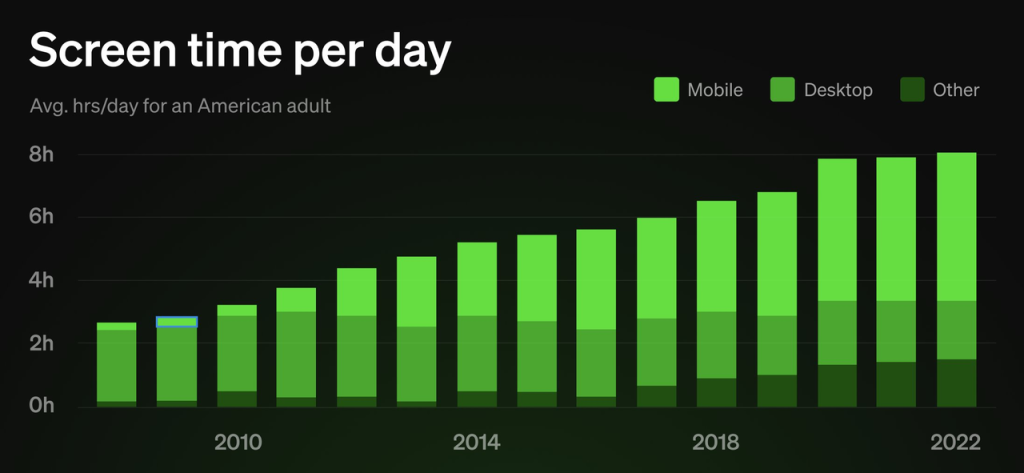

With the improvement of the virtual world experience, people's immersion time in the virtual world will become longer, leading to a shift in attention. This shift in attention will also result in a premium valuation transferring from physical assets to virtual assets. The release of Apple Vision Pro will completely change the interaction between humans and the virtual world, thus increasing the duration of immersion in the virtual world and significantly enhancing the immersive experience.

Source: @FEhrsam

Source: @FEhrsam

Note: This is our definition of a variant in pricing strategy. In premium pricing strategy, brands set prices in a price range that is much higher than the cost, filling the difference between pricing and cost with brand stories and experiences. In addition, cost-based pricing, competition-based pricing, supply-demand relationships, etc., are also factors to consider when pricing products. Here, we only focus on the premium pricing strategy.

History and Present of the MR Industry

The exploration of XR (Extended Reality, including VR and AR) in modern society began more than a decade ago:

In 2010, Magic Leap was established. In 2015, Magic Leap's whale jumping in the stadium advertisement caused a sensation in the entire tech industry. However, when the product was officially launched in 2018, it received extremely poor reviews. In 2021, the company raised $500 million in financing at a post-money valuation of $2.5 billion, which made the company's value less than one-third of its total financing amount of $3.5 billion. In January 2022, there were reports that the Saudi Arabian sovereign wealth fund gained majority control through a $450 million equity and debt transaction, reducing the company's actual valuation to less than $1 billion.

In 2010, Microsoft started developing Hololens and released the first AR device in 2016, followed by the second one in 2019. It was priced at $3,000, but the actual user experience was not satisfactory.

In 2011, the prototype of Google Glass was released, and the first product was launched in 2013. It was once extremely popular and had high expectations, but it ended up being a failure due to privacy issues with the camera and poor user experience. The total sales volume was only tens of thousands. The enterprise edition was released in 2019, and a new test version was field-tested in 2022 with a mediocre response. In 2014, Google's Cardboard VR development platform and SDK were introduced. In 2016, Daydream VR was launched, which is currently the most widely used VR platform for compatible with Android devices.

In 2011, Sony PlayStation started developing its VR platform, and in 2016, PSVR made its first appearance. Although users showed enthusiasm for purchasing it initially due to trust in PlayStation, the subsequent response was not favorable.

In 2012, Oculus was established and acquired by Facebook in 2014. Oculus Rift was launched in 2016, and a total of 4 models were subsequently released. It focused on portability and lower pricing, becoming a high market share device on the market.

2014, Snap acquired Vergence Labs, a company founded in 2011 that focuses on AR glasses, which became the prototype for Snap Spectacles. It was first released in 2016 and has since launched 3 updated versions. Like most of the aforementioned products, Snap Spectacles initially attracted a lot of attention, with people lining up outside stores. However, the number of users was limited, and in 2022, Snap closed its hardware division and shifted its focus to smartphone-based AR.

Around 2017, Amazon began developing AR glasses based on Alexa. The first Echo Frames were released in 2019, followed by a second version in 2021.

When we look back at the history of XR, we can see that the expansion and cultivation of this industry far exceeded the expectations of everyone in the market, whether it's well-funded tech giants with many scientists or smart and capable startup companies focusing on XR with millions of investments. Since the release of consumer-grade VR product Oculus Rift in 2016, the cumulative shipments of all VR brands, such as Samsung's Gear, ByteDance's Pico, Valve's Index, Sony's Playstation VR, HTC's Vive, etc., is less than 45 million units. Due to the fact that the most common use of VR devices is still for gaming, AR devices that people are willing to use occasionally have not emerged before the release of Vision Pro. According to SteamVR's data, it can be roughly inferred that the monthly active users of VR devices may be only a few million.

Why are XR devices not becoming popular? The failure experiences of countless startup companies and the summaries of investment institutions can provide some answers:

1. Hardware Not Ready

Visually, VR devices, even the top-notch ones, have pixels that are still hard to ignore due to their wider field of view and closer proximity to the eyes. A single eye 4K resolution, which is 8K for both eyes, is needed for full immersion. In addition, the refresh rate is a key element in maintaining the visual experience. It is generally believed that XR devices need a refresh rate of 120Hz, or even 240Hz, to maintain a similar experience to the real world and prevent motion sickness. And refresh rate, under the same level of computing power, is a factor that needs to be balanced with the level of rendering: Fortnite supports 4K resolution at 60Hz refresh rate, while it only supports 1440p resolution at 120Hz refresh rate.

Because compared to visual perception, auditory perception seems insignificant in the short term, most VR devices have not focused on this detail. But imagine that in a space, whether it's on the left or right side, the sound of people speaking consistently comes from above, it would greatly reduce the sense of immersion. And when the digital Avatar in an AR space is fixed in the living room, when a player walks from the bedroom to the living room, hearing the Avatar speak with the same volume will subtly diminish the sense of spatial reality.

In terms of interaction, traditional VR devices are equipped with controllers, and devices like the HTC Vive require the installation of cameras at home to confirm the player's movement. Although the Quest Pro has eye tracking, it has high latency and average sensitivity, mainly used for enhancing local rendering. Actual interactive operations still rely on controllers. At the same time, Oculus has also installed 4 - 12 cameras on the headsets to confirm the user's scene status, achieving a certain level of gesture interaction experience (for example, in the VR world, using the left hand to pick up a virtual phone and using the right index finger to click in the air to confirm starting a game).

In terms of weight, a comfortable device for the human body should be between 400-700 g (although compared to normal glasses weighing about 20 g, this is still a massive object). But in order to achieve the aforementioned clarity, refresh rate, level of interaction, and rendering requirements (chip performance, size, and quantity) that match those demands, as well as several hours of basic battery life, the weight of XR devices is a challenging trade-off process.

In summary, in order for XR to become the next generation of smartphones and a new generation of popular hardware, it requires devices with a resolution of 8k or higher and a refresh rate greater than 120 Hz to avoid users feeling dizzy. This device should have more than ten cameras, a battery life of 4 hours or even longer (only need to be taken off during lunch/dinner breaks), be heat-free or have minimal heat, weigh less than 500g, and have a price as low as $500 - $1000. Despite the advancement in technology compared to the previous XR frenzy from 2015-2019, it is still challenging to meet the above standards.

But even so, if users start experiencing existing MR (VR + AR) devices, they will find that the current experience, while not perfect, is an immersive experience that cannot be compared to a 2D screen. However, there is still considerable room for improvement in this experience. Taking Oculus Quest 2 as an example, most of the VR videos that can be watched are only 1440p, and they don't even reach the maximum resolution of Quest 2, which is 4K, and the refresh rate is far from 90Hz. As for existing VR games, there are only relatively poor modeling and not many choices available for trying.

Source: VRChat

Source: VRChat

2. Killer App has not yet emerged

The "yet to appear" of Killer App has its historical reasons, limited by hardware constraints - even if Meta tries to compress profit margins, the appeal of a few hundred dollar MR headset with a relatively simple ecosystem is still not attractive compared to well-established and widely adopted game consoles. The installed base of VR devices is between 25-30 million, whereas the installed base for AAA games (PS5, Xbox, Switch, PC) is 350 million. Therefore, most manufacturers have given up on supporting VR, and the few games that do support VR are more of a "side layout for VR platforms" rather than "exclusive support for VR devices". Additionally, due to the aforementioned issues such as pixelation, motion sickness, poor battery life, and excessive weight, the VR experience is not superior to traditional AAA game consoles. The "immersion" advantage that VR supporters try to emphasize is hampered by the insufficient number of devices, and developers who "side layout for VR devices" rarely create specialized experiences and interaction modes for VR, making it difficult to achieve the desired experience.

As a result, the current situation is that when players choose VR games over non-VR games, they are not only "choosing a new game" but also "giving up the social experience with the majority of their friends", and such game scenarios often prioritize gameplay and immersive experience over social interaction. Of course, you might mention VR Chat, but a deep dive into it reveals that 90% of its users are not VR users, but players who want to experience socializing with new friends through various avatars on a regular screen. Therefore, it is not surprising that the most popular game in VR software is rhythm games like "Beat Saber".

Therefore, we believe that the emergence of a Killer App requires the following elements:

A significant improvement in hardware performance and comprehensive details. As mentioned in the "hardware not ready" section, this is not just about "improving the screen, improving the chip, improving the speaker, etc.", but rather the result of the comprehensive coordination of chips, accessories, interaction design, and operating system - and this is precisely Apple's expertise: compared to the iPod and iPhone of a decade ago, Apple has accumulated decades of experience in the synergy of multiple device operating systems.

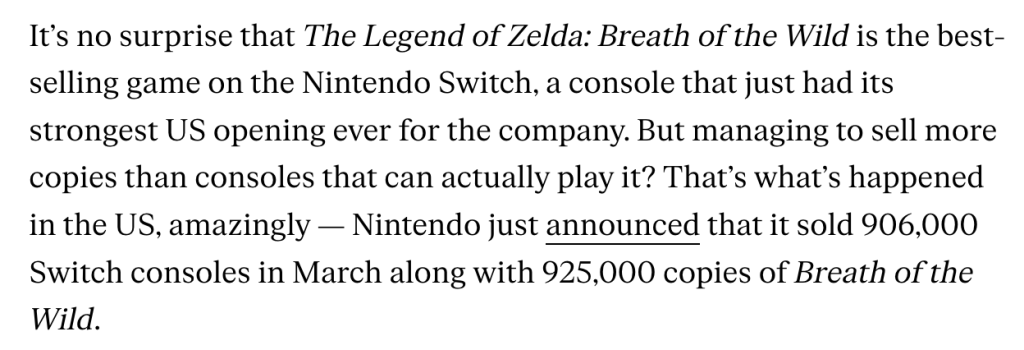

The eve of the explosion in the number of user devices. As mentioned above in the analysis of developers and user attitudes, it is difficult for a "Killer App" to emerge when the MAU (Monthly Active Users) of XR devices is only a few million. At the peak of "The Legend of Zelda: Breath of the Wild," the game cartridge sales in the United States were even higher than the number of Switch consoles—this is an excellent case of how new hardware enters mass adoption. People who purchase devices to experience XR gradually become disappointed due to the limited content and talk about how their headsets are collecting dust. However, a large majority of players attracted by Zelda will stay because they discover other games within the Switch ecosystem.

Source: The Verge

Source: The Verge

And, unified operating habits, and stable device update compatibility. The former is easy to understand - with and without handles, they bring two different user-machine interaction habits and experiences, which is the distinction between Apple Vision Pro and other VR devices on the market. The latter can be seen in the iteration of Oculus hardware - the significant improvement in hardware performance within the same generation will actually restrict the user experience. The Meta Quest Pro, released in 2022, has made significant improvements in hardware performance compared to the Oculus Quest 2 (also known as Meta Quest 2), with the resolution of Quest Pro increased from Quest 2's 4K display to 5.25K, color contrast increased by 75%, and refresh rate increased from the original 90Hz to 120Hz. In addition to the 4 cameras used by Quest 2 to understand the VR external environment, 8 additional external cameras have been added to make the black and white environmental images become color images, significantly improving hand tracking, and also adding facial and eye tracking. At the same time, Quest Pro also uses "foveal rendering", focusing computing power on the area where the eyes are fixated and weakening the fidelity of other areas, thereby saving computing power and power consumption. As mentioned above, Quest Pro has much more functionality than Quest 2, but users of Quest Pro may be less than 5% of Quest 2. This means that developers will develop games for both devices simultaneously, which will greatly limit the utilization of Quest Pro's advantages and inversely reduce Quest Pro's attractiveness to users. History Rhymes, the same story has also happened repeatedly in the game console industry. This is also the reason why console manufacturers update software and hardware every 6-8 years. Users who bought the first generation of Switch do not have to worry about the incompatibility of subsequent hardware such as Switch OLED with newly released game software, but users who bought the Wii series cannot play games in the Switch ecosystem. For software developers targeting console games, the games they produce are not for products with a huge user base like mobile phones (350 million vs. billions), with strong user dependence (leisure at home vs. carrying all day), and stable hardware experience within a few development cycles is needed to avoid excessive diversion of users. Otherwise, they can only do as VR software developers are doing now, backward compatibility to ensure a sufficient user base.

So, can Vision Pro solve the above problems? What kind of changes will it bring to the industry?

The Turning Point Brought by Vision Pro

At the June 7th conference, Apple Vision Pro was released. According to the "challenges faced by MR in hardware and software" framework we analyzed above, the following analogies can be made:

Hardware:

Visually, Vision Pro utilizes two 4K screens, totaling approximately 6K pixels, making it one of the top models in MR devices. The refresh rate can support up to 96 HZ and is compatible with HDR video playback. According to experience from tech bloggers, not only is the image clarity very high, but there is almost no feeling of dizziness.

In terms of audio, Apple has been using spatial audio on Airpods since 2020, allowing users to hear sound from different directions to achieve a three-dimensional audio experience. However, Vision Pro is expected to go a step further and utilize "audio ray technology", fully integrating LiDAR scanning in the device to analyze the acoustic characteristics of the room (physical materials, etc.) and create a "spatial audio effect" that matches the room, complete with direction and depth.

In terms of interaction, the device offers smooth interaction experience without the need for any controllers, utilizing gestures and eye tracking (based on the experience test by tech media, there is almost no perceived delay, which is not only due to sensor accuracy and computing speed, but also the introduction of eye path prediction. This will be further explained in the following text.)

In terms of battery life, Vision Pro offers 2 hours of usage, which is on par with Meta Quest Pro (not particularly impressive, and it's currently one of the criticisms of Vision Pro. However, since Vision Pro has an external power supply and also contains a 5000 mAh battery in the headset, it can be speculated that there's room for replacing the power source to extend the battery life).

In terms of weight, according to the experience of technology media, it weighs about 1 pound (454 g), which is comparable to Pico and Oculus Quest 2, and should be lighter than Meta Quest Pro. It is considered a good experience in MR devices (although the weight of the power supply attached to the waist is not included). However, compared to pure AR glasses weighing around 80 g (such as Nreal, Rokid, etc.), it is still heavy and stuffy. Of course, most pure AR glasses need to be connected to other devices and can only be used as an extended screen. In comparison, MR with built-in chips and a real immersive experience may be a completely different experience.

In addition, in terms of hardware performance, the Vision Pro not only uses the most advanced M 2 series chips for system and program operation, but also adds an R 1 chip specifically developed for MR screen, surround environment monitoring, eye and gesture tracking, etc., for MR-specific display and interaction functions.

In terms of software, Apple not only has the ability to migrate to a certain extent with its millions of developers, but also has already laid out a series of ecosystems with the release of AR Kit:

In 2017, Apple released AR Kit: a virtual reality development framework compatible with iOS devices, allowing developers to create augmented reality applications and leverage the hardware and software capabilities of iOS devices. VR Kit can create maps of the area using the camera on iOS devices, detect objects such as desks, floors, and the device itself in physical space using CoreMotion data, and enable digital assets to interact with the real world under the camera—for example, in Pokemon Go, you can see Pokemon buried in the ground or perched on trees, instead of simply displayed on the screen as the camera moves. Users do not need to calibrate anything—this is a seamless AR experience.

https://pokemongohub.net/

https://pokemongohub.net/

In 2017, AR Kit was released, which can automatically detect positions, topology, and facial expressions of users for modeling and expression capture.

2018, AR Kit 2 was released, bringing improved CoreMotion experience, multiplayer AR games, tracking 2D images, and detecting known 3D objects such as sculptures, toys, and furniture.

In 2019, AR Kit 3 was released, adding further augmented reality capabilities. AR content can now be displayed in front of or behind people using People Occlusion, with support for tracking up to three faces. Coordinated sessions are also supported, enabling a new AR shared gaming experience. Motion capture can be used to understand body position and movement, track joints and skeletons, resulting in new AR experiences involving people, not just objects.

In 2020, AR Kit 4 was released, leveraging the built-in LiDAR sensor on 2020 iPhones and iPads for improved tracking and object detection. ARKit 4 also added Location Anchors, allowing augmented reality experiences to be placed at specific geographic coordinates using Apple Maps data.

In 2021, AR Kit 5 was released, allowing developers to build custom shaders, programmatic mesh generation, object capture, and character control. Additionally, developers can use built-in APIs and capture objects with LiDAR and cameras in iOS 15 devices. Developers can scan an object and immediately convert it into a USDZ file, which can be imported into Xcode and used as a 3D model in your ARKit scenes or applications. This greatly enhances the efficiency of 3D model creation.

In 2022, AR Kit 6 was released, featuring the "MotionCapture" functionality, which can track characters in video frames and provide developers with a character "skeleton" with estimated head and limb positions, enabling developers to create applications that overlay AR content onto characters or hide behind characters for a more seamless integration with the scene.

Looking back at the layout of AR Kit that started seven years ago, it can be seen that Apple's technological accumulation in AR is not achieved overnight, but rather by gradually integrating AR experiences into widely spread devices. By the time of Vision Pro's release, Apple had already achieved some content and developer accumulation. Additionally, due to the compatibility of AR Kit development, the products created are not only targeted towards Vision Pro users, but can also to some extent cater to iPhone and iPad users. Developers may not be limited to developing products for the ceiling of three million monthly active users, but potentially test and experience their products with hundreds of millions of iPhone and iPad users.

In addition, Vision Pro's 3D video shooting partially solves the problem of limited content in MR: content production. Existing VR videos are mostly 1440p, which appear pixelated in the circular screen experience of MR headsets. However, Vision Pro's shooting provides high-resolution spatial videos and decent spatial audio experience, which may greatly enhance MR content consumption experience.

Although the above configuration is already impressive, Apple's imagination for MR does not stop there: on the day of the release of Apple MR, a developer who claimed to have been involved in Apple's neuroscience development, @sterlingcrispin, said:

Generally as a whole, a lot of the work I did involved detecting the mental state of users based on data from their body and brain when they were in immersive experiences.

In general, a lot of my work involved detecting the mental state of users based on data from their body and brain when they were in immersive experiences.

So, a user is in a mixed reality or virtual reality experience, and AI models are trying to predict if you are feeling curious, mind wandering, scared, paying attention, remembering a past experience, or some other cognitive state. And these may be inferred through measurements like eye tracking, electrical activity in the brain, heart beats and rhythms, muscle activity, blood density in the brain, blood pressure, skin conductance, etc.

A user is in a mixed reality or virtual reality experience, and AI models are trying to predict if they are feeling curious, mind wandering, scared, paying attention, remembering a past experience, or some other cognitive state. And these states can be inferred through measurements like eye tracking, electrical activity in the brain, heart beats and rhythms, muscle activity, blood density in the brain, blood pressure, skin conductance, etc.

There were a lot of tricks involved to make specific predictions possible, which the handful of patents I’m named on go into detail about. One of the coolest results involved predicting a user was going to click on something before they actually did. That was a ton of work and something I’m proud of. Your pupil reacts before you click in part because you expect something will happen after you click. So you can create biofeedback with a user’s brain by monitoring their eye behavior, and redesigning the UI in real time to create more of this anticipatory pupil response. It’s a crude brain computer interface via the eyes, but very cool. And I’d take that over invasive brain surgery any day.

To achieve specific predictions, we used a lot of tricks, which are detailed in several patents under my name. One of the coolest outcomes was predicting that a user was about to click on a target before they actually did. It was a challenging task, and I take pride in it. Your pupils react before you click partly because you anticipate that something will happen after the click. Therefore, by monitoring a user's eye movements and redesigning the user interface in real time, we can create biofeedback with the user's brain, resulting in more anticipatory pupil responses. It's a rough brain-computer interface through the eyes, but very cool. I would choose this over invasive brain surgery any day.

Other tricks to infer cognitive state involved quickly flashing visuals or sounds to a user in ways they may not perceive, and then measuring their reaction to it.

Other tricks to infer cognitive state involved quickly flashing visuals or sounds to a user in ways they may not perceive, and then measuring their reaction to it.

Another patent goes into details about using machine learning and signals from the body and brain to predict how focused, or relaxed you are, or how well you are learning. And then updating virtual environments to enhance those states. So, imagine an adaptive immersive environment that helps you learn, or work, or relax by changing what you’re seeing and hearing in the background.

Another patent goes into details about using machine learning and signals from the body and brain to predict how focused, or relaxed you are, or how well you are learning. And then updating virtual environments to enhance those states. So, imagine an adaptive immersive environment that helps you learn, or work, or relax by changing what you’re seeing and hearing in the background.

These highly relevant technologies in neuroscience may mark a fresh new way of synchronization between machines and human will.

Of course, Vision Pro does have its flaws, such as its exorbitant price of $3,499, which is more than double the price of Meta Quest Pro and more than seven times that of Oculus Quest 2. CEO Siqi Chen of Runway commented on this:

it might be useful to remember that in inflation adjusted dollars, the apple vision pro is priced at less than half the original 1984 macintosh at launch (over $7K in today’s dollars)

it might be useful to remember that in inflation adjusted dollars, the apple vision pro is priced at less than half the original 1984 macintosh at launch (over $7K in today’s dollars)

In this analogy, the pricing of Apple Vision Pro doesn't seem too outrageous... However, the first generation of Macintosh only sold 372,000 units, making it hard to imagine Apple, who puts a lot of effort into MR, accepting a similar embarrassing situation. In the coming years, the reality may not change much, AR may not necessarily need glasses, and Vision Pro may not be widely adopted in the short term. It is likely to be used only as a tool for developer experience and testing, a tool for creators, and an expensive toy for digital enthusiasts.

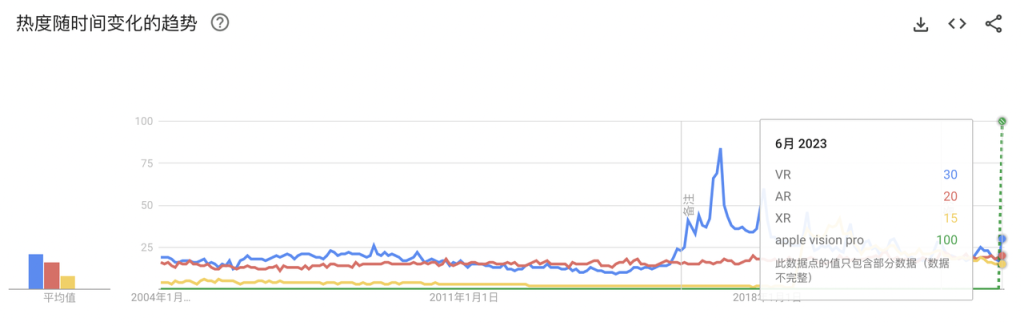

Source: Google Trend

Source: Google Trend

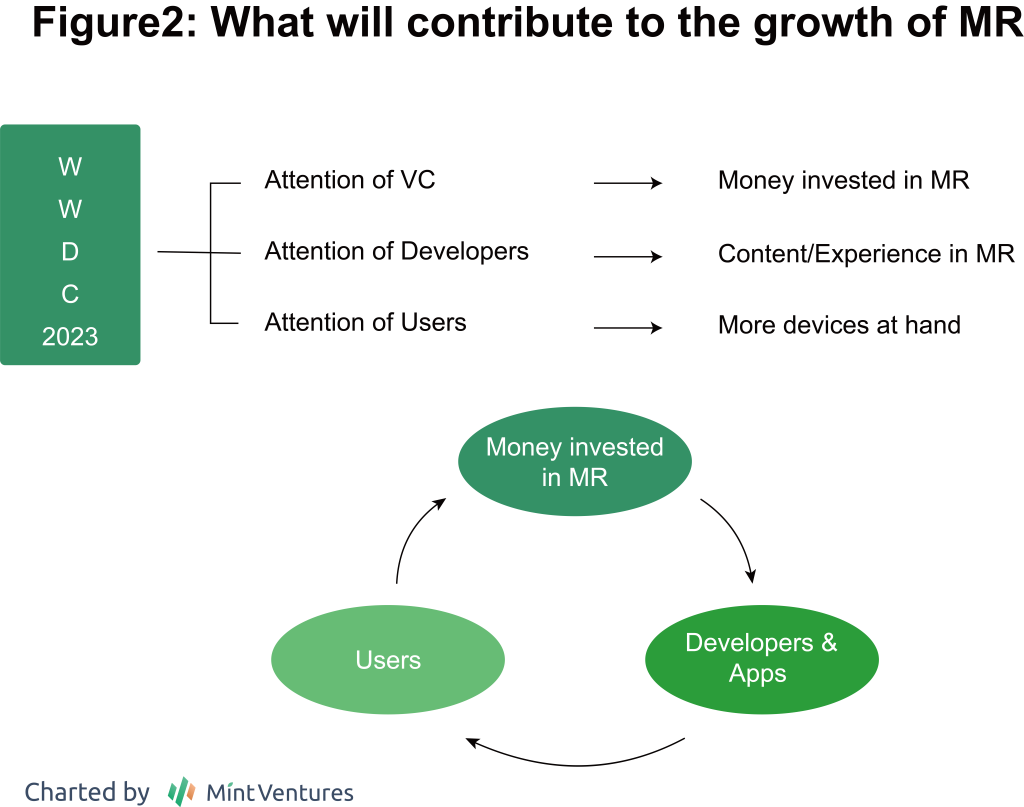

Despite this, we can see that Apple's MR devices have already begun to make waves in the market. They are redirecting the average user's interest in digital products towards MR, making the public aware that MR is a more mature technology and no longer just about presentations or demo videos. It makes users realize that there is an immersive display option in addition to tablets, televisions, and smartphones. It makes developers realize that MR may truly become the new trend of the next generation of hardware. It makes venture capitalists realize that this could be an investment field with limitless potential.

Web 3 ecosystem

1. 3D Rendering + AI concept target: RNDR

RNDR Introduction

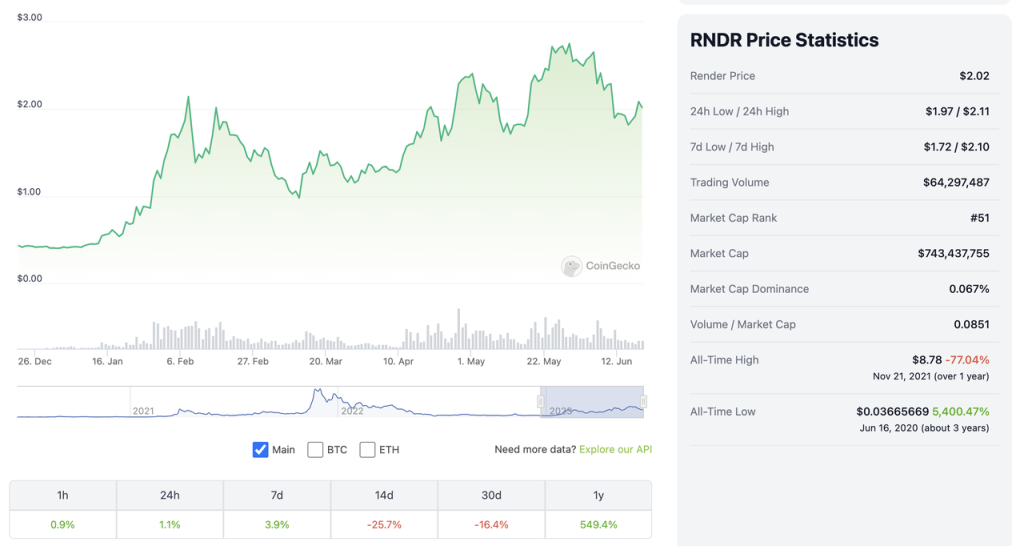

In the past six months, RNDR has been a meme combining the concepts of metaverse, AI, and MR, leading the market multiple times.

The project behind RNDR is Render Network, a protocol that uses decentralized networks for distributed rendering. The company behind Render Network, OTOY.Inc, was founded in 2009 and has optimized its rendering software, OctaneRender, for GPU rendering. For ordinary creators, local rendering consumes a lot of machine resources, which creates a demand for cloud rendering. However, renting servers from AWS, Azure, and other vendors for rendering can be costly. This is where Render Network comes in, enabling rendering without hardware limitations by connecting creators with ordinary users who have idle GPUs. This allows creators to render cheaply, quickly, and efficiently, while node users can earn extra money using their idle GPUs.

For Render Network, there are two types of participants:

Creators: They publish tasks and use fiat currency to purchase credits or pay with RNDR. (Octane X, which is used for task publishing, is available on Mac and iPad, and 0.5-5% of the fees will be used to cover network costs.)

Node provider (idle GPU owner): Idle GPU owners can apply to become node providers and receive priority matching based on their reputation from previous completed tasks. After nodes complete rendering, creators will inspect the rendered files and initiate downloads. Once downloaded, the locked fees in the smart contract will be paid to the node provider's wallet.

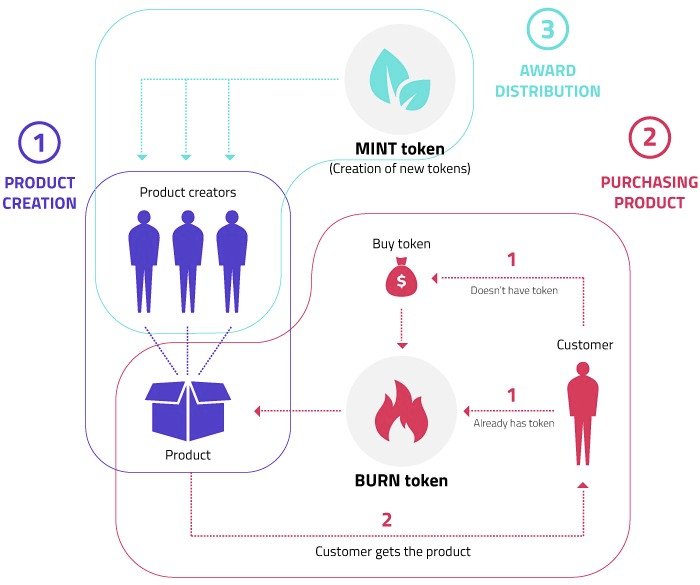

RNDR's tokenomics also underwent changes in February this year, which is one of the reasons for its significant price increase (however, at the time of this article's publication, Render Network has not yet implemented the new tokenomics into the network and has not provided a specific launch date):

Previously, in the network, the purchasing power of $RNDR and Credit was the same, with 1 credit equaling 1 euro. When the price of $RNDR was below 1 euro, buying $RNDR was more cost-effective compared to fiat currency purchasing of Credit. However, when the price of $RNDR rose above 1 euro, due to everyone's preference for fiat currency, $RNDR lost its utility case. (Although protocol revenue may involve $RNDR buybacks, other market participants have no incentive to buy $RNDR.)

The revised economic model adopts the "BME" (Burn-Mint-Emission) model used by Helium. When creators purchase rendering services, regardless of whether they use fiat currency or $RNDR, they will destroy $RNDR worth 95% of the fiat currency value, with the remaining 5% flowing to the foundation as income for engine usage. When nodes provide services, they no longer directly receive income from creators' purchase of rendering services. Instead, they receive newly minted token rewards based on not only task completion metrics but also other comprehensive factors such as customer satisfaction.

It is worth noting that in each new epoch (a specific time period, the duration of which has not been specified), new $RNDR will be minted, with the minting quantity strictly limited and decreasing over time, unrelated to the amount of token burning (see the official whitepaper's release document). As a result, this will lead to changes in the distribution of benefits for the following stakeholders:

Creators / Network service users: Each epoch, creators will receive partial refunds for the consumed RNDR, with the proportion gradually decreasing over time.

Node operators: Node operators will be rewarded based on the amount of work completed and real-time online activity, among other factors.

Liquidity Provider: Dex's liquidity provider will also receive rewards to ensure sufficient $RNDR for burning.

Source: https://medium.com/render-token/behind-the-network-btn-july-29th-2022-7477064c5cd7

Source: https://medium.com/render-token/behind-the-network-btn-july-29th-2022-7477064c5cd7

Compared to the previous model of (periodic) income repurchase, under the new model, miners can earn more income when there is insufficient demand for rendering tasks, while earning less income than the original model when the total amount of task prices corresponding to the demand for rendering tasks exceeds the total amount of released $RNDR rewards (burned tokens > newly minted tokens). The $RNDR token will also enter a deflationary state.

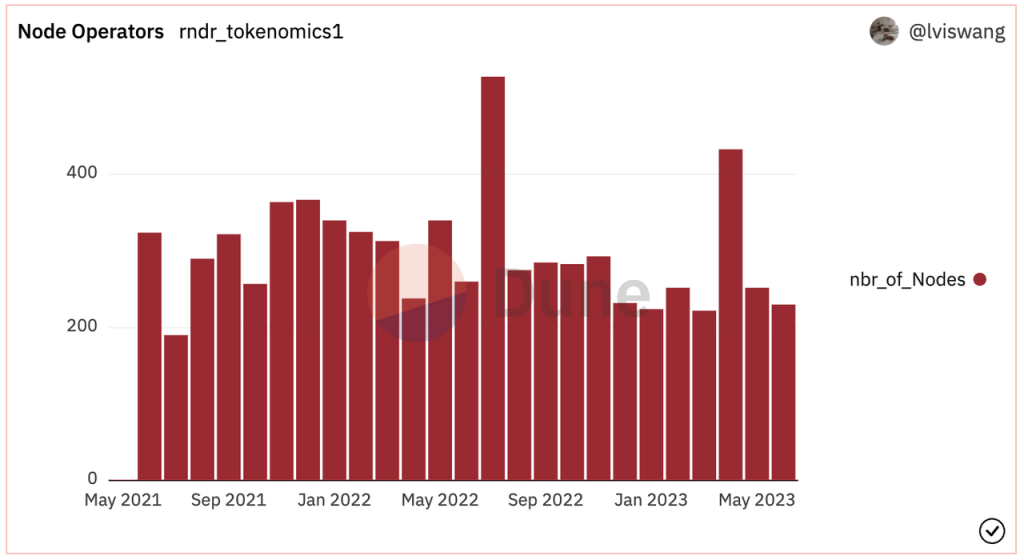

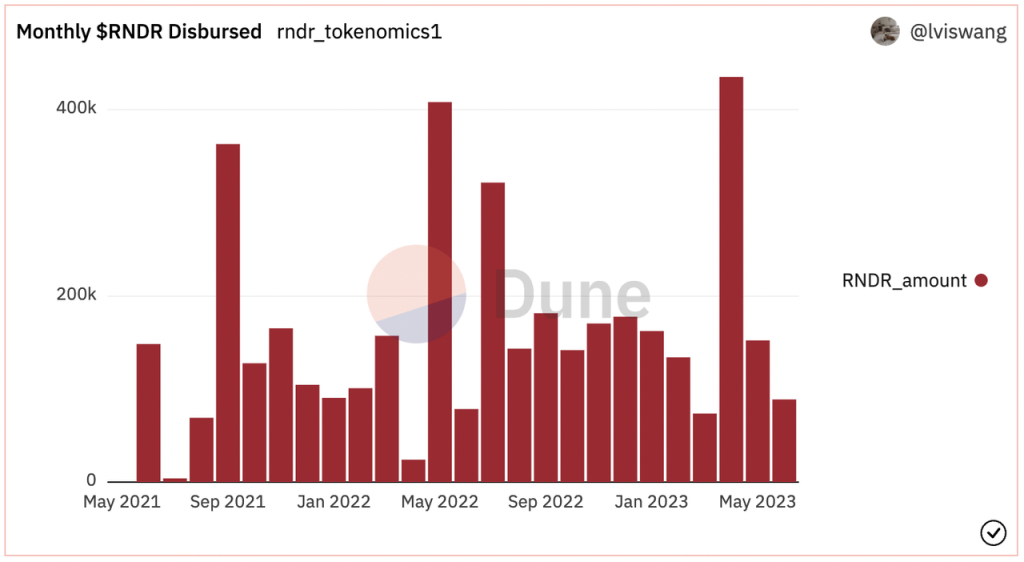

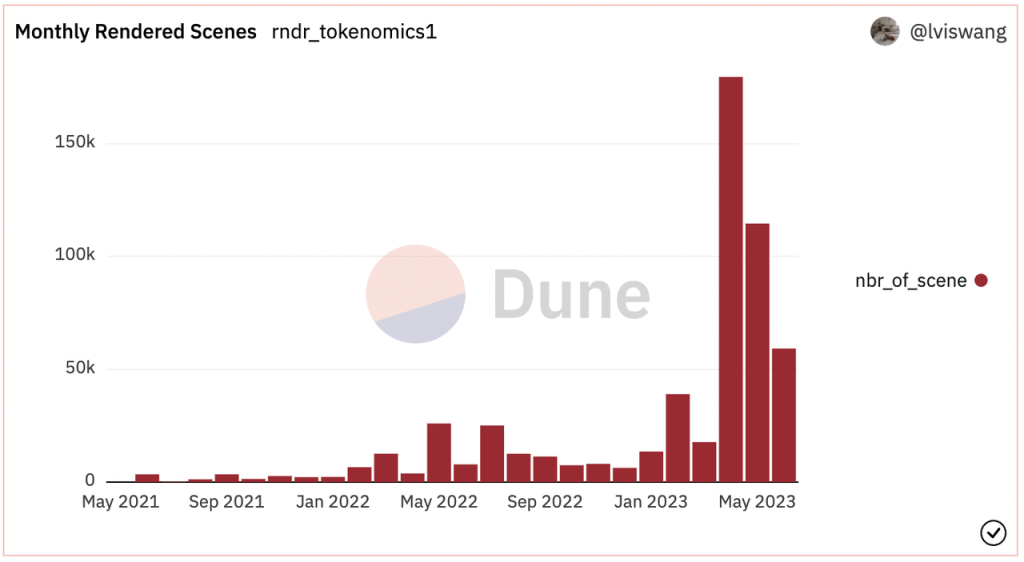

Although the price of $RNDR has been rising in the past six months, the business situation of Render Network has not seen a significant growth. The number of nodes has remained relatively stable over the past two years, and the allocation of $RNDR to nodes has not increased significantly. However, there has been an increase in the number of rendering tasks. This indicates that creators are allocating smaller amounts of tasks to the network instead of larger amounts.

https://dune.com/lviswang/render-network-dollarrndr-mterics

https://dune.com/lviswang/render-network-dollarrndr-mterics

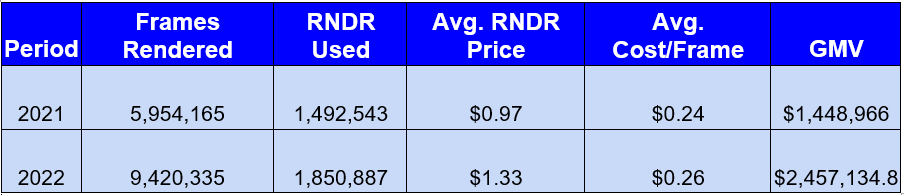

Although it can't catch up with the five-fold increase in coin price in a year, Render Network's GMV has indeed seen significant growth. In 2022, the GMV (Gross Merchandise Value) increased by 70% compared to the previous year. According to the total amount of $RNDR allocated to nodes on the Dune board, the GMV in the first half of 2023 is about $1.19 million, which is basically unchanged compared to the same period in 2022. Such GMV is obviously not enough for a market cap of $700 million.

Source: https://globalcoinresearch.com/2023/04/26/render-network-scaling-rendering-for-the-future/Potential impact of Vision Pro on RNDR

Source: https://globalcoinresearch.com/2023/04/26/render-network-scaling-rendering-for-the-future/Potential impact of Vision Pro on RNDR

Impact of Vision Pro on RNDR

In a Medium article released on June 10th, Render Network claimed that Octane's rendering capabilities for M1 and M2 are unique - because Vision Pro also uses the M2 chip, rendering in Vision Pro is no different from ordinary desktop rendering.

But the question is: why release rendering tasks on a device that has a 2-hour battery life and is primarily used for gaming and not productivity tools? If the price of Vision Pro drops, battery life improves significantly, and the weight decreases, only then can there be a potential for Octane to be used effectively and achieve mass adoption.

What can be confirmed is that the migration of digital assets from flat devices to MR devices does indeed bring increasing infrastructure demands. The announcement of a partnership with Apple to research how to create a more compatible game engine, Unity, for Vision Pro resulted in a 17% increase in stock price on the same day, indicating market optimism. With the collaboration of Disney and Apple, traditional film and television content may also experience similar demand growth in 3D conversion. Render Network, which specializes in film rendering, launched AI-integrated 3D rendering technology NeRFs in February of this year. By utilizing artificial intelligence computation and 3D rendering, NeRFs creates real-time immersive 3D assets that can be viewed on MR devices. With support from Apple AR Kit, anyone can generate 3D assets by using a higher configuration iPhone to photoscan objects, and NeRF technology renders the crude Photoscan 3D into immersive 3D assets with different light refractions from different angles through AI-enhanced rendering. This spatial rendering will be an essential tool for MR device content production, providing potential demand for Render Network.

But will this demand be met by RNDR? Observing its GMV of 2 million dollars in 2022, it is a drop in the bucket compared to the cost invested in the film and television industry. In conclusion, RNDR may continue to create price glory in the "metaverse, XR, AI" track, but it is still difficult to generate revenue that matches its valuation.

2. Metaverse – Otherside, Sandbox, Decentraland, HighStreet, etc.

Although I believe that there are limited fundamental changes in substance, the topics related to MR seem to revolve around these large-scale metaverse projects: Otherside by Monkeys, The Sandbox by Animoca, the oldest blockchain-based metaverse Decentraland, and HighStreet, which aims to be the VR world equivalent of Shopify. (For a detailed analysis of the metaverse track, refer to the "4. Business Analysis – Industry Analysis and Potential" section of https://research.mintventures.fund/2022/10/14/zh-apecoin-values-revisited-with-regulations-overhang-and-staking-rollout/)

However, as analyzed in the section "Killer App has not yet appeared" above, most existing developers who support VR are not exclusively focused on VR (even if they exclusively support VR and achieve top market share in a segmented market with millions of MAUs, their competitiveness is not overwhelming). Existing products have not made detailed adaptations to the user habits and operational interactions of MR. The unreleased projects are actually not far behind other major companies and startups that see the potential of Vision Pro: After better integration with Unity and Vision Pro, the learning costs for MR game development are expected to decrease, and the experience accumulated in the previously narrow market will be difficult to reuse in a product that is about to achieve mass adoption.

Of course, if we talk about the first-mover advantage, projects that have already entered the VR space may have a slight advantage in terms of development progress, technology, and talent accumulation.

One More Thing

If you haven't watched the video below, it will give you the most intuitive impression of the MR world: convenient, immersive, but also chaotic and disordered. The fusion of virtual and reality is so seamless that people who have been spoiled by virtual reality see "losing their identity in devices" as a doomsday-like event. The details in the video may still seem sci-fi and hard to comprehend to us now, but this is likely to be the future we will face in the next few years.

https://www.youtube.com/watch?v=YJg02ivYzSs

This reminds me of another video, in 2011, 12 years ago, Microsoft released Windows Phone 7 (as a Gen Z with little memory of that era, it's hard to remember that Microsoft once put a lot of effort into the phone market) and made a satirical advertisement about smartphones called "Really?": The people in the ad were constantly holding their phones, staring at their phones while riding bicycles, staring at their phones while sunbathing on the beach, holding their phones while taking showers, falling down the stairs at parties because they were looking at their phones, and even dropping their phones into the urinal because they were distracted... Microsoft's intention was to show users that "Microsoft phones can save us from phone addiction" - of course, this was a failed attempt, and the ad "Really?" could even be renamed "Reality". The "presence" and intuitiveness of smartphones are more addictive than the inhuman "mobile version of Windows computer", just as the combination of reality and virtuality is more addictive than pure reality.

https://www.youtube.com/watch?v=4mhrKWVQ0sk

How can we grasp such a future? We have several directions that we are exploring:

Immersive experience and narrative creation: First is video. After the release of Vision Pro, filming "3D depth" movies has never been easier, which will change the way people consume digital content - from "distant appreciation" to "immersive experience". Apart from video shooting, "3D spaces with content experiences" may also be another track worth attention. This does not mean creating scenes that are all the same from a template library or extracting a few seemingly explorable spaces from games. It means creating spaces with interactive, native content, and 3D-friendly experiences. Such spaces could include a cool piano coach who sits next to you on the piano bench, highlights the corresponding keys, and gives gentle encouragement when you're feeling down; a virtual sprite hidden in a corner of your room that holds the key to the next level of the game; or a virtual girlfriend who understands you and accompanies you on a virtual walk... The creator economy that emerges here can be well supported by blockchain technology, which enables trust, automatic settlement, digitization of assets, and low communication costs in transactions. Creators can use them to interact with fans without intermediaries, without the hassle of registering a company and setting up Stripe for payment, and without worrying about platforms taking a 10% (Substack) to 70% (Roblox) cut or the platform shutting down and taking away your hard work... A wallet, a composable content platform, and decentralized storage can solve these problems. Similar upgrades will occur in gaming and social spaces, to the extent that the boundaries between games, movies, and social spaces will become increasingly blurred. When the experience is no longer a large screen suspended several meters away, but rather right in front of you, with depth, distance, and spatial audio interaction, players are no longer just "viewers" but participants in the scene, and their actions can even affect the virtual world environment (e.g., lifting your hand in the jungle causes a butterfly to fly onto your fingertips).

Infrastructure and community for 3D digital assets: The 3D shooting feature of Vision Pro will greatly reduce the difficulty of creating 3D videos, thereby giving rise to a new market for content production and consumption. The corresponding upstream and downstream infrastructure for material trading, editing, etc. may continue to be dominated by existing giants or may be pioneered by startups like AIGC.

Hardware/software upgrades to enhance immersive experiences: Whether it is Apple's research on "more detailed observation of the human body to create adaptive environments" or the enhancement of tactile and taste experiences, these are promising fields.

Of course, entrepreneurs in this field are likely to have a deeper understanding, thinking, and more creative exploration than us - feel free to DM @0 x scarlettw to discuss and explore the possibilities of the spatial computing era.

Acknowledgements and References:

Thank you to the partners at Mint Ventures, @fanyayun and research partner @xuxiaopengmint, for their advice, review, and proofreading during the writing process of this article. The XR analysis framework is derived from the series of articles by @ballmatthew, Apple WWDC and developer courses, as well as the author's experience with various XR devices on the market.