IOSG Ventures: GPU supply crisis, a way out for AI startups

Original author: Mohit Pandit, IOSG Ventures

Summary

GPU shortages are a reality, supply and demand are tight, but the number of underutilized GPUs can meet todays tight supply needs.

An incentive layer is needed to facilitate cloud computing participation and then ultimately coordinate computing tasks for inference or training. The DePIN model is perfect for this purpose.

Because of the supply-side incentives, because the computational cost is lower, the demand side finds this attractive.

Not everything is rosy, there are certain trade-offs you have to make when choosing a Web3 cloud: like latency. Compared with traditional GPU cloud, the trade-offs faced also include insurance, service level agreements (Service Level Agreements), etc.

The DePIN model has the potential to solve the GPU availability problem, but a fragmented model will not make the situation better. For situations where demand is growing exponentially, fragmented supply is the same as no supply.

Market convergence is inevitable given the number of new market players.

introduction

We are on the verge of a new era of machine learning and artificial intelligence. While AI has been around in various forms for some time (AI are computer devices that are told to perform things that humans can do, such as washing machines), we are now witnessing the emergence of sophisticated cognitive models capable of performing tasks that require intelligent human behavior Task. Notable examples include OpenAI’s GPT-4 and DALL-E 2, and Google’s Gemini.

In the rapidly growing field of artificial intelligence (AI), we must recognize the dual aspects of development: model training and inference. Inference includes the capabilities and outputs of an AI model, while training includes the complex process (including machine learning algorithms, data sets, and computing power) required to build intelligent models.

In the case of GPT-4, all the end user cares about is inference: getting output from a model based on textual input. However, the quality of this inference depends on model training. To train effective AI models, developers need access to comprehensive underlying data sets and massive computing power. These resources are mainly concentrated in the hands of industry giants including OpenAI, Google, Microsoft and AWS.

The formula is simple: better model training >> leads to increased inference capabilities of the AI model >> thereby attracting more users >> leading to more revenue and consequently increased resources for further training.

These major players have access to large underlying data sets and, crucially, control large amounts of computing power, creating barriers to entry for emerging developers. As a result, new entrants often struggle to acquire sufficient data or leverage the necessary computing power at an economically feasible scale and cost. With this scenario in mind, we see that networks have great value in democratizing access to resources, primarily related to accessing computing resources at scale and reducing costs.

GPU supply issues

NVIDIA CEO Jensen Huang said at CES 2019 that Moores Law is over. Todays GPUs are extremely underutilized. Even during deep learning/training cycles, GPUs are underutilized.

Here are typical GPU utilization numbers for different workloads:

Idle (just booted into Windows operating system): 0-2%

General productivity tasks (writing, light browsing): 0-15%

Video playback: 15 - 35%

PC Games: 25 - 95%

Graphic design/photo editing active workload (Photoshop, Illustrator): 15 - 55%

Video Editing (Active): 15 - 55%

Video Editing (Rendering): 33 - 100%

3D Rendering (CUDA/OptiX): 33 - 100% (frequently reported incorrectly by Win Task Manager - using GPU-Z)

Most consumer devices with GPUs fall into the first three categories.

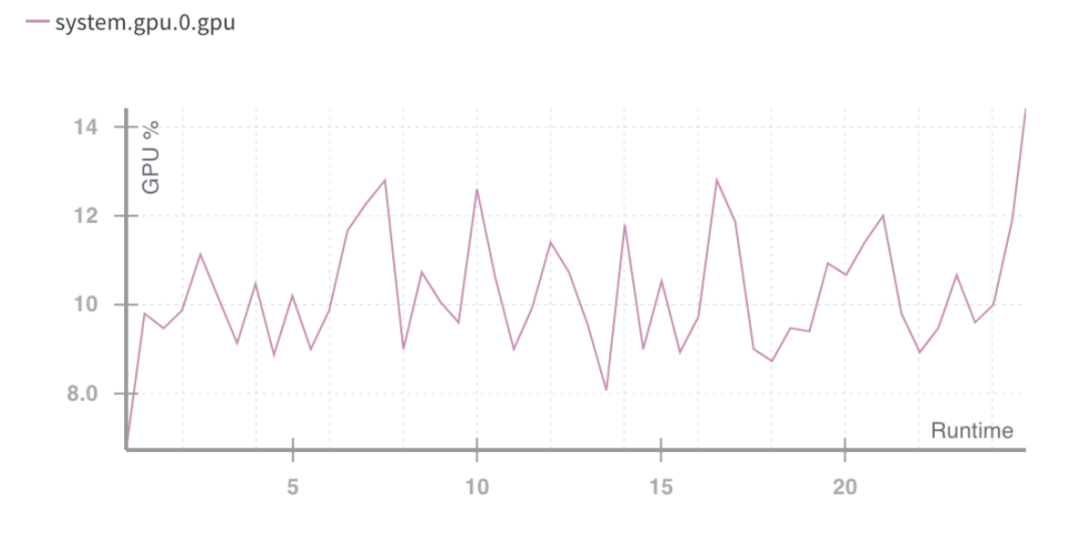

GPU runtime utilization %. Source: Weights and Biases

The above situation points to a problem: poor utilization of computing resources.

There is a need to better utilize the capacity of consumer GPUs, which is sub-optimal even when GPU utilization spikes. This clarifies two things to be done in the future:

Resource (GPU) aggregation

Parallelization of training tasks

In terms of the types of hardware that can be used, there are currently 4 types on offer:

· Data center GPU (e.g., Nvidia A 100s)

· Consumer GPU (e.g., Nvidia RTX 3060)

· Custom ASIC (e.g. Coreweave IPU)

· Consumer SoCs (e.g. Apple M 2)

In addition to ASICs (because they are built for a specific purpose), other hardware can be brought together to be utilized most efficiently. With many of these chips in the hands of consumers and data centers, a converged supply-side DePIN model may be the way to go.

GPU production is a volume pyramid; consumer GPUs produce the most, while premium GPUs like the NVIDIA A 100s and H 100s produce the least (but have higher performance). These advanced chips cost 15 times more than consumer GPUs to produce, but sometimes dont deliver 15 times the performance.

The entire cloud computing market is worth approximately $483 billion today and is expected to grow at a compound annual growth rate of approximately 27% over the next few years. There will be approximately 13 billion hours of ML computing demand by 2023, which equates to approximately $56 billion in spending on ML computing in 2023 at current standard rates. This entire market is also growing rapidly, growing 2x every 3 months.

GPU requirements

Computing needs come primarily from AI developers (researchers and engineers). Their main needs are: price (low-cost computing), scale (large amounts of GPU computing), and user experience (ease of access and use). Over the past two years, GPUs have been in huge demand due to the increased demand for AI-based applications and the development of ML models. Developing and running ML models requires:

Heavy computation (from access to multiple GPUs or data centers)

Ability to perform model training, fine tuning, and inference, with each task deployed on a large number of GPUs for parallel execution

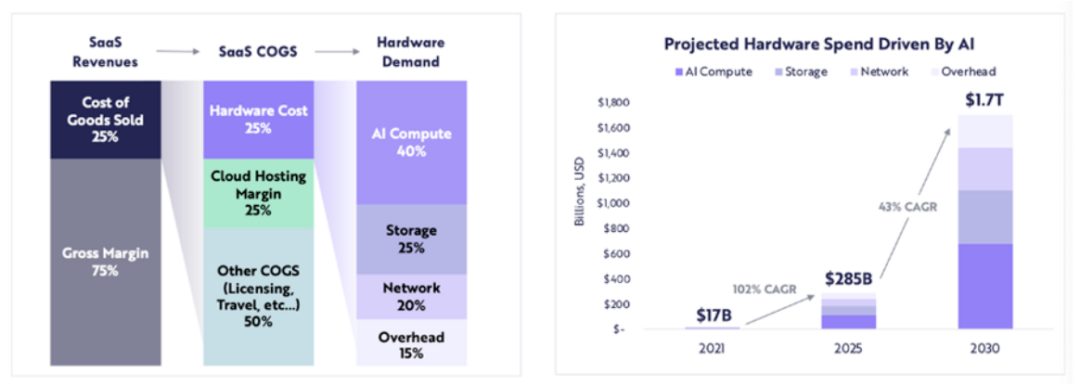

Computing-related hardware spending is expected to grow from $17 billion in 2021 to $285 billion in 2025 (approximately 102% CAGR), and ARK expects computing-related hardware spending to reach $1.7 trillion by 2030 (43% compound annual growth rate).

ARK Research

With a large number of LLMs in the innovation stage and competition driving computational demands for more parameters, as well as retraining, we can expect continued demand for high-quality computation in the coming years.

As supply of new GPUs tightens, where does blockchain come into play?

When resources are insufficient, the DePIN model will provide help:

Start the supply side and create a large supply

Coordinate and complete tasks

Make sure tasks are completed correctly

Properly reward providers for getting the job done

Aggregating any type of GPU (consumer, enterprise, high-performance, etc.) can cause issues with utilization. When computing tasks are divided, the A 100 chip is not supposed to perform simple calculations. GPU networks need to decide what types of GPUs they think should be included in the network, based on their go-to-market strategy.

When the computing resources themselves are distributed (sometimes globally), a choice needs to be made by the user or the protocol itself as to which type of computing framework will be used. Providers like io.net allow users to choose from 3 computing frameworks: Ray, Mega-Ray or deploy a Kubernetes cluster to perform computing tasks in containers. There are more distributed computing frameworks such as Apache Spark, but Ray is the most commonly used. Once the selected GPU completes the computational task, the output is reconstructed to give the trained model.

A well-designed token model would subsidize computing costs for GPU providers, and many developers (the demand side) would find such a scheme more attractive. Distributed computing systems inherently have latency. There is computational decomposition and output reconstruction. So developers need to make a trade-off between the cost-effectiveness of training a model and the time required.

Does a distributed computing system need its own chain?

The network operates in two ways:

Charge by task (or computing cycle) or by time

Charged by time unit

In the first approach, one could build a proof-of-work chain similar to what Gensyn is attempting, where different GPUs share the “work” and are rewarded for it. For a more trustless model, they have the concept of verifiers and whistleblowers, who are rewarded for maintaining the integrity of the system, based on the proofs generated by the solvers.

Another proof-of-work system is Exabits, which instead of task partitioning treats its entire GPU network as a single supercomputer. This model seems to be more suitable for large LLMs.

Akash Network added GPU support and started aggregating GPUs into this space. They have an underlying L1 to achieve consensus on status (showing the work done by the GPU provider), a marketplace layer, and a container orchestration system such as Kubernetes or Docker Swarm to manage the deployment and scaling of user applications.

If a system is trustless, the proof-of-work chain model will be most effective. This ensures the coordination and integrity of the protocol.

On the other hand, systems like io.net do not structure themselves as a chain. They chose to solve the core problem of GPU availability and charge customers per time unit (per hour). They dont need a verifiability layer because they essentially lease the GPU and use it as they please for a specific lease period. There is no division of tasks in the protocol itself, but is done by developers using open source frameworks like Ray, Mega-Ray or Kubernetes.

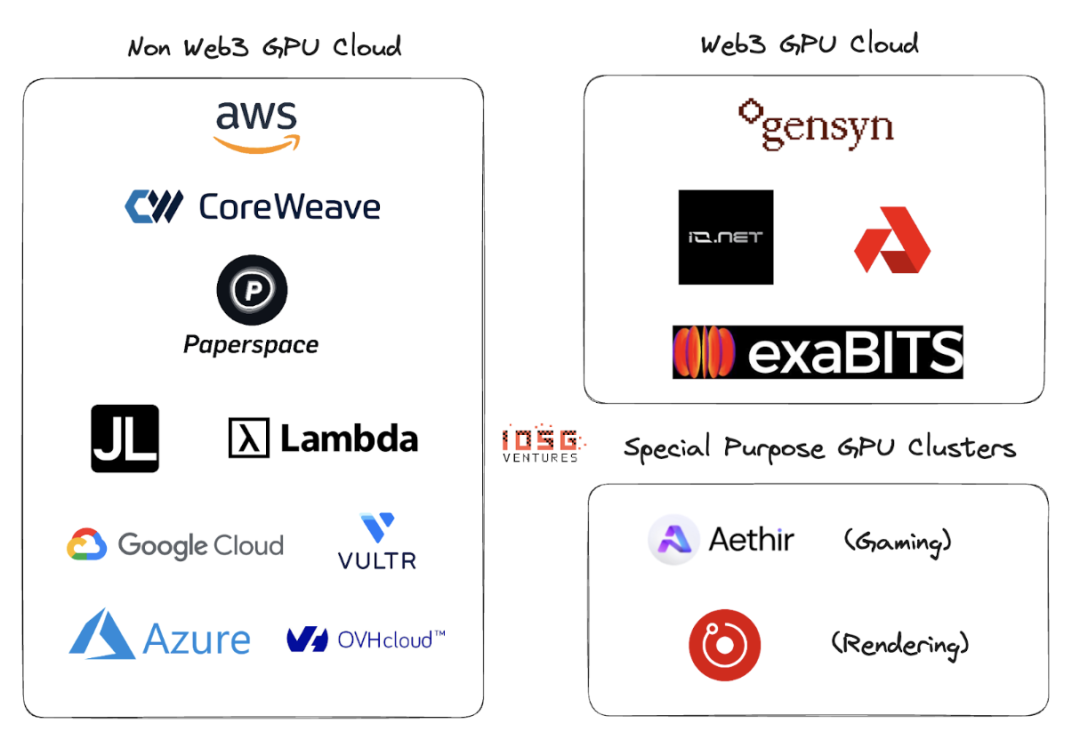

Web2 and Web3 GPU Cloud

Web2 has many players in the GPU cloud or GPU as a service space. Major players in this space include AWS, CoreWeave, PaperSpace, Jarvis Labs, Lambda Labs, Google Cloud, Microsoft Azure, and OVH Cloud.

This is a traditional cloud business model where customers rent a GPU (or multiple GPUs) by a unit of time (usually an hour) when they need computing. There are many different solutions for different use cases.

The main differences between Web2 and Web3 GPU clouds are the following parameters:

1. Cloud setup costs

Due to token incentives, the cost of setting up a GPU cloud is significantly reduced. OpenAI is raising $1 trillion to fund the production of computing chips. It appears that without token incentives, it would take at least $1 trillion to defeat the market leader.

2. Calculation time

A non-Web3 GPU cloud will be faster because the rented GPU clusters are located within a geographical area, while the Web3 model may have a more widely distributed system, and the latency may come from inefficient problem partitioning, load balancing, and most importantly bandwidth .

3. Calculate costs

Due to token incentives, the cost of Web3 computing will be significantly lower than the existing Web2 model.

Computational cost comparison:

These numbers may change as more supply and utilization clusters become available for these GPUs. Gensyn claims to offer the A 100s (and their equivalents) for as little as $0.55 per hour, and Exabits promises a similar cost-savings structure.

4. Compliance

Compliance is not easy in a permissionless system. However, Web3 systems like io.net, Gensyn, etc. do not position themselves as permissionless systems. Handled compliance issues like GDPR and HIPAA during GPU onboarding, data loading, data sharing and result sharing stages.

ecosystem

Gensyn、io.net、Exabits、Akash

risk

1. Demand risk

I think the top LLM players will either continue to accumulate GPUs or use GPU clusters like NVIDIAs Selene supercomputer, which has a peak performance of 2.8 exaFLOP/s. They wont rely on consumers or long-tail cloud providers to pool GPUs. Currently, top AI organizations compete on quality more than cost.

For non-heavy ML models, they will seek cheaper computing resources, like blockchain-based token-incentivized GPU clusters that can provide services while optimizing existing GPUs (the above is an assumption: those organizations prefer to train their own models, instead of using LLM)

2. Supply risk

With massive amounts of capital being poured into ASIC research, and inventions like the Tensor Processing Unit (TPU), this GPU supply issue may go away on its own. If these ASICs can offer good performance:cost trade-offs, then existing GPUs hoarded by large AI organizations may return to the market.

Do blockchain-based GPU clusters solve a long-term problem? While blockchain can support any chip other than GPUs, what the demand side does will completely determine the direction of projects in this space.

in conclusion

A fragmented network with small GPU clusters won’t solve the problem. There is no place for long tail GPU clusters. GPU providers (retail or smaller cloud players) will gravitate toward larger networks because the incentives for the network are better. Will be a function of a good token model and the ability of the supply side to support multiple computing types.

GPU clusters may see a similar aggregation fate as CDNs. If large players were to compete with existing leaders such as AWS, they might start sharing resources to reduce network latency and geographic proximity of nodes.

If the demand side grows larger (more models need to be trained and the number of parameters need to be trained is larger), Web3 players must be very active in supply side business development. If there are too many clusters competing from the same customer base, there will be fragmented supply (which invalidates the entire concept) while demand (measured in TFLOPs) grows exponentially.

Io.net has stood out from many competitors, starting with an aggregator model. They have aggregated GPUs from Render Network and Filecoin miners to provide capacity, while also bootstrapping supply on their own platform. This could be the winning direction for DePIN GPU clusters.