Original author: Patrick Bush, Matthew Sigel

Original compilation: Lynn, Mars Finance

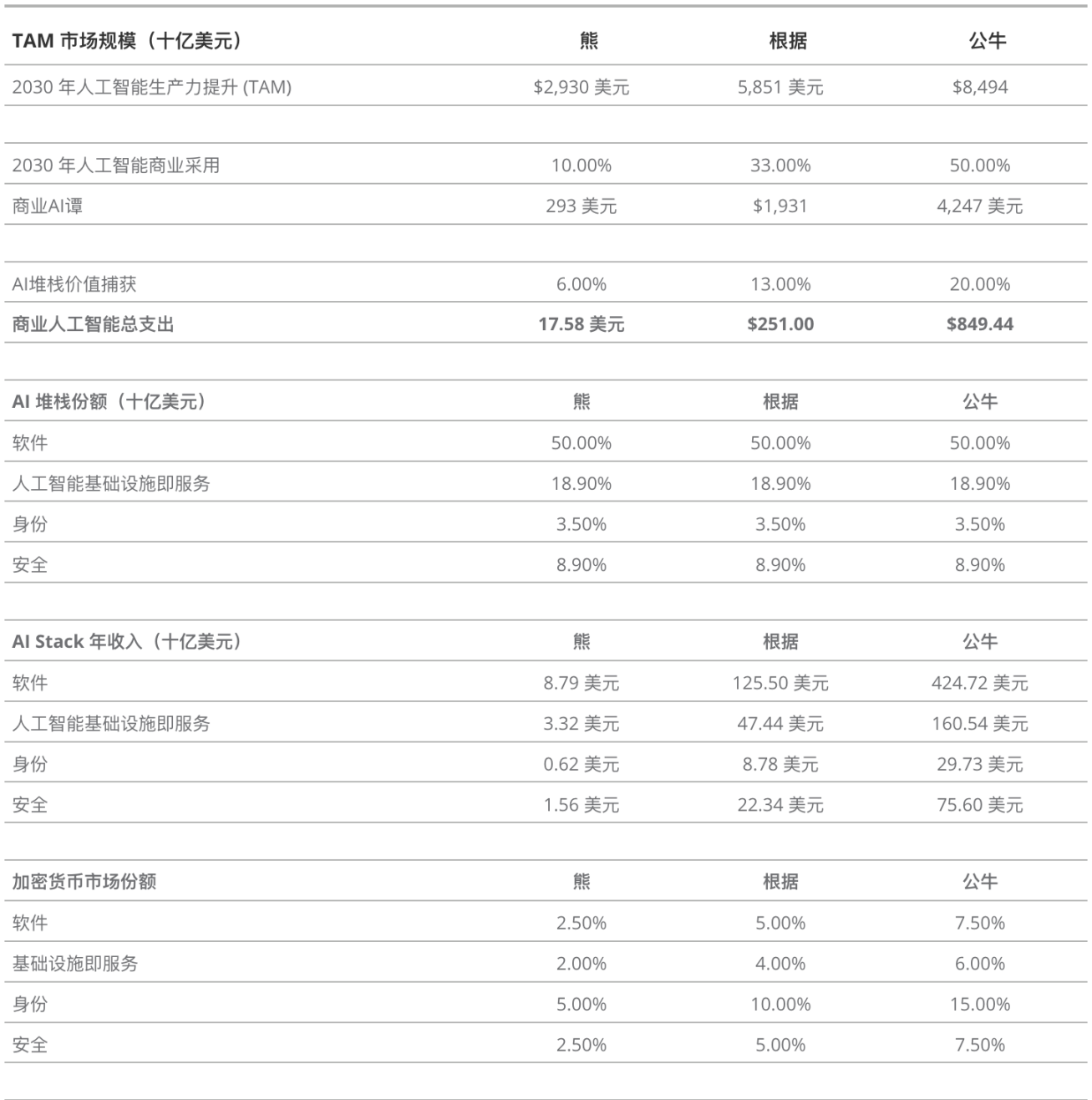

We outline the AI cryptocurrency revenue scenario by 2030, highlighting the $10.2B base case and highlighting the critical role of public blockchains in driving AI adoption through essential functionality.

Please note that VanEck may hold positions in the digital assets listed below.

Key points:

Crypto AI revenue is expected to reach $10.2B by 2030, according to our base case

Blockchain technology could become a key driver of AI adoption and advancement of decentralized AI solutions

Integration with cryptographic incentives improves security and efficiency of AI models

Blockchain could be the solution to AI authentication and data integrity challenges

Public blockchains may very well be the key to unlocking the widespread adoption of artificial intelligence (AI), and AI applications will become cryptocurrencies’ raison d’être. This is because cryptocurrencies provide important foundational elements required for artificial intelligence, such as transparency, immutability, well-defined ownership properties, and adversarial testing environments. We believe these features will help enable AI to reach its full potential. Based on estimates of AI growth, we assert that AI-focused crypto projects will reach $10.2B in annual revenue by 2030. In this article, we speculate on the role of crypto in promoting AI adoption and the value that crypto will bring to the AI business:

Check out this blog herePDF version。

We’ve found that the best applications of cryptocurrencies in artificial intelligence are:

Provide distributed computing resources

Model testing, fine-tuning and validation

Copyright protection and data integrity

Artificial Intelligence Security

identity

Cryptocurrency is very useful for artificial intelligence because it already solves many of the current and future challenges of artificial intelligence. In essence, cryptocurrencies solve the coordination problem. Cryptocurrencies bring together human, computational, and monetary resources to run open source software. It does this by providing rewards to those who create, support and use each blockchain network in the form of tokens tied to the value of each network. This reward system can be used to guide different components of the AI value stack. An important implication of combining cryptography with artificial intelligence is to use cryptocurrency incentives to develop the necessary physical infrastructure, such as GPU clusters, dedicated to training, fine-tuning, and supporting the use of generative models. Since cryptocurrency is an adversarial environment that uses cryptocurrencies to reward desired user behavior, it is the best basis for testing and fine-tuning artificial intelligence models to optimize outputs that meet certain quality standards.

Blockchain also brings transparency to digital ownership, which could help resolve some of the open source software issues AI will face in court, as has been highlighted in the New York Times’ lawsuit against OpenAI and Microsoft. That is, encryption can transparently prove ownership and copyright protection of data owners, model builders, and model users. This transparency will also extend to publishing mathematical proofs of a model’s validity onto a public blockchain. Finally, due to unforgeable digital signatures and data integrity, we believe public blockchains will help mitigate identification and security issues that would otherwise undermine the effectiveness of AI.

Defining the role of cryptocurrencies in artificial intelligence enterprises

Estimated Crypto AI Revenues in 2030: Bear, Base Case, Bull Scenario

Source: Morgan Stanley, Bloomberg, VanEck Research As of January 29, 2024. Past performance is no guarantee of future results. The information, valuation scenarios and price targets provided in this blog are not intended to serve as financial advice or any call to action, recommendation to buy or sell, or as a prediction of the future performance of the AI business. Actual future performance is unknown and may differ materially from the hypothetical results described herein. There may be risks or other factors not considered in the scenarios presented that could hinder performance. These are merely simulation results based on our research and are for illustrative purposes only. Please do your own research and draw your own conclusions.

To forecast the market for crypto-AI, we first estimate the total addressable market (TAM) of business productivity gains from AI, with our baseline for this number coming from McKinsey’s assumptions for 2022. We then apply economic and productivity growth assumptions to McKinseys forecasts to calculate a base case TAM of $5.85T in 2030. In this base case, we assume that AI productivity growth is 50% higher than GDP growth, which is 3%. We then forecast the market penetration of AI in global enterprises (33% in the base case) and apply this to our initial TAM, predicting that AI will bring $1.93T in productivity improvements to enterprises. To calculate revenue for all AI businesses, we assume that 13% of these productivity gains are captured by AI businesses (or spent by enterprise consumers) as revenue. We estimate the AI revenue share by applying the average revenue share of labor costs among SP 500 companies, assuming that AI spending should be similar. The next part of our analysis applies Bloomberg Intelligences forecasts of the AI value stack distribution to estimate annual revenue for each AI business group. Finally, we provide specific estimates of the cryptocurrency market share of each AI business to arrive at final figures for each case and each market.

We envision a future where decentralized AI models built using open source public repositories are applied to every imaginable use case. In many cases, these open source models outperform centralized AI creation. The basis for this assumption stems from the assumption that open source communities bring together enthusiasts and hobbyists who have unique motivations to improve things. Weve seen open source internet projects disrupt traditional businesses. The best examples of this phenomenon are Wikipedia, which effectively ended the commercial encyclopedia business, and Twitter, which disrupted the news media. These open source communities succeed where traditional enterprises fail because open source groups coordinate and inspire people to deliver value through a combination of social influence, ideology, and group solidarity. Simply put, these open source communities are successful because their members care.

Combining open source AI models with cryptocurrency incentives can expand the reach of these emerging communities, giving them the financial power to create the necessary infrastructure to attract new participants. Applying this premise to artificial intelligence would be a fascinating combination of passion and financial resources. Artificial intelligence models will be tested in cryptocurrency incentive competitions, establishing an environment for model evaluation benchmarks. In this environment, the most effective models and evaluation criteria win because the value of each model is clearly quantified. Therefore, in our base case, we expect blockchain-generated AI models to account for 5% of all AI software revenue. This estimate includes hardware, software, services, advertising, games and more, reflecting shifts in the volume of business operations. Of the total AI software revenue, we expect this to account for about half of all AI revenue, or about $125.5B. Therefore, we estimate that a 5% market share for open source models equates to $6.27B in revenue from crypto-token-backed AI models.

We predict that TAM for compute (or AI infrastructure as a service) for fine-tuning, training, and inference may reach $47.44 B by 2030. As AI becomes widely adopted, it will become integral to many functions of the world economy, and the provision of computing and storage can be envisioned as a utility similar to electricity generation and distribution. In this dynamic, the vast majority of base load will come from GPU cloud hyperscalers such as Amazon and Google, whose market share will approach the 80% Pareto distribution. We see blockchain-distributed backend server infrastructure catering to specialized needs and acting as a peak provider during periods of high network demand. For producers of customized AI models, encrypted storage and compute providers offer benefits such as on-demand service delivery, shorter SLA lock-in periods, more customized computing environments, and greater latency sensitivity. Additionally, decentralized GPUs can be seamlessly integrated with decentralized AI models in smart contracts, enabling permissionless use cases where AI agents scale their own computing needs. Thinking of GPUs provided by blockchain as the Uber/Lyft equivalent of artificial intelligence computing infrastructure, we believe that computing and storage provided by blockchain will account for 20% of the non-hyperscale market for artificial intelligence infrastructure and may generate $ 1.90B of revenue.

Defining “identity” in the context of artificial intelligence agents and models through provable on-chain humanity can be viewed as a witch defense mechanism for the world’s computer networks. We can estimate the cost of this service by examining the fees associated with securing different blockchain networks. In 2023, these costs for Bitcoin, Ethereum, and Solana will be approximately 1.71%, 4.3%, and 5.57% of each networks inflationary issuance value, respectively. Conservatively, we can infer that identity recognition should account for around 3.5% of the AI market. Considering that AI Software has a TAM of $125.5B, this corresponds to annual revenue of $8.78B. Because we believe cryptocurrencies offer the best solution to the identity problem, we believe it will capture 10% of this end market, with annual revenue expected to be approximately $878 million.

AI security is expected to become another important component of AI devices, with the basic requirement being to use uncorrupted, relevant and up-to-date data to verify that the model is functioning correctly. As AI expands into applications where human lives are at risk, such as self-driving cars, factory robots, and healthcare systems, the tolerance for failure becomes slim. The need for accountability in the event of an accident will drive the insurance market to require concrete proof of safety. Public blockchains are ideal for this functionality because they can publish “proofs of security” on an immutable ledger that can be seen by anyone. This business can be considered similar to compliance for financial institutions. Considering that U.S. commercial and investment banks generate $660B in revenue while spending $58.75B in compliance costs (8.9% of revenue), we expect AI security should account for approximately $22.34B of the $251B AI TAM. While cryptocurrencies have the potential to enhance AI security, given the U.S. government’s focus on AI, we believe much of AI compliance will be centralized. Therefore, we estimate that cryptocurrencies will account for around 5% of this market, or around $1.1 2B.

Organize distributed computing resources

Cryptocurrencies can apply their immense social and financial coordination benefits to democratize access to computing, thereby solving the pain points currently plaguing AI developers. In addition to high costs and limited access to quality GPUs, AI model builders currently face other thorny issues. These include vendor lock-in, lack of security, limited compute availability, poor latency, and geofencing required by state laws.

Cryptocurrencys ability to meet artificial intelligences demand for GPUs stems from its ability to pool resources through token incentives. The Bitcoin network has a token value of $850B and an equity value of $20B, which is a testament to this capability. Therefore, both current Bitcoin miners and the promising decentralized GPU market have the potential to add significant value to artificial intelligence by providing decentralized computing.

A useful analogy for understanding the provision of GPUs via blockchain is the power generation business. Simply put, there are entities that operate large, expensive plants that can reliably generate electricity to meet most grid needs. These base load plants have stable demand but require significant capital investment in construction, resulting in relatively low but guaranteed returns on capital. Supplementing the base load is another type of generator called peak power. These companies provide electricity when demand exceeds baseload generation capacity. This involves high-cost, small-scale energy production strategically positioned close to the demand for that energy. We expect similar dynamics to emerge in the on-demand computing space.

Bitcoin miners diversify into artificial intelligence

Bitcoin and other proof-of-work cryptocurrencies, like artificial intelligence, have high energy demands. This energy must be created, harvested, transported, and broken down into usable electricity to power mining equipment and computing clusters. The supply chain requires miners to make significant investments in power plants, power purchase agreements, grid infrastructure and data center facilities. The monetary incentives brought by mining PoW cryptocurrencies have led to the emergence of many globally distributed Bitcoin miners with energy and power rights and integrated grid architecture. Much of this energy comes from lower-cost, carbon-intensive sources that society avoids. Therefore, the most compelling value proposition that Bitcoin miners can offer is low-cost energy infrastructure to power AI backend infrastructure.

Hyperscale computing providers such as AWS and Microsoft have pursued strategies of investing in vertically integrated operations and building their own energy ecosystems. Big tech companies have moved upstream, designing their own chips and sourcing their own energy, much of it renewable. Currently, data centers consume two-thirds of the renewable energy available to U.S. businesses. Microsoft and Amazon have both committed to achieving 100% renewable energy supply by 2025. However, if expected computing demand exceeds expectations, as some say, the number of AI-centric data centers could double by 2027, and capital expenditures could triple current estimates. Big tech companies already pay $0.06-0.10/kWh for electricity, which is much more expensive than what competitive Bitcoin miners typically pay (0.03-0.05 kWh). If the energy demands of AI exceed the current infrastructure plans of big tech companies, then Bitcoin miners’ power cost advantage over hyperscale miners could increase significantly. Miners are increasingly attracted to the high-margin artificial intelligence business associated with GPU supply. Notably, Hive reported in October that its HPC and AI operations generated 15 times the revenue of Bitcoin mining on a per megawatt basis. Other Bitcoin miners seizing the opportunity of artificial intelligence include Hut 8 and Applied Digital.

Bitcoin miners have experienced growth in this new market, which has helped diversify revenue and enhance earnings reports. During Hut 8s Q3 2023 analyst call, CEO Jaime Leverton said: In our HPC business, we created some momentum in the third quarter with the addition of new customers and growth with existing customers. Last week, we launched an on-demand cloud service that provides Kubernetes-based applications that can support artificial intelligence, machine learning, visual effects and rendering workloads to customers seeking HPC services from our GPUs. This The service puts control in the hands of our customers while reducing provisioning time from days to minutes, which is particularly attractive for those looking for short-term HPC projects. Hut 8 HPC Business from Q3 2023 It achieved $4.5 million in revenue, accounting for more than 25% of the companys revenue during the same period. Growing demand for HPC services and new products should aid future growth of this business line, with the Bitcoin halving approaching, HPC revenue could soon exceed mining revenue, depending on market conditions.

Although their businesses sound promising, Bitcoin miners turning to artificial intelligence may run into trouble due to a lack of data center building skills or the inability to expand power supplies. These miners may also find challenges related to operational overhead due to the cost of hiring new data center-focused sales staff. Additionally, current mining operations do not have adequate network latency or bandwidth because their optimization for cheap energy results in them being located in remote locations that often lack high-speed fiber optic connections.

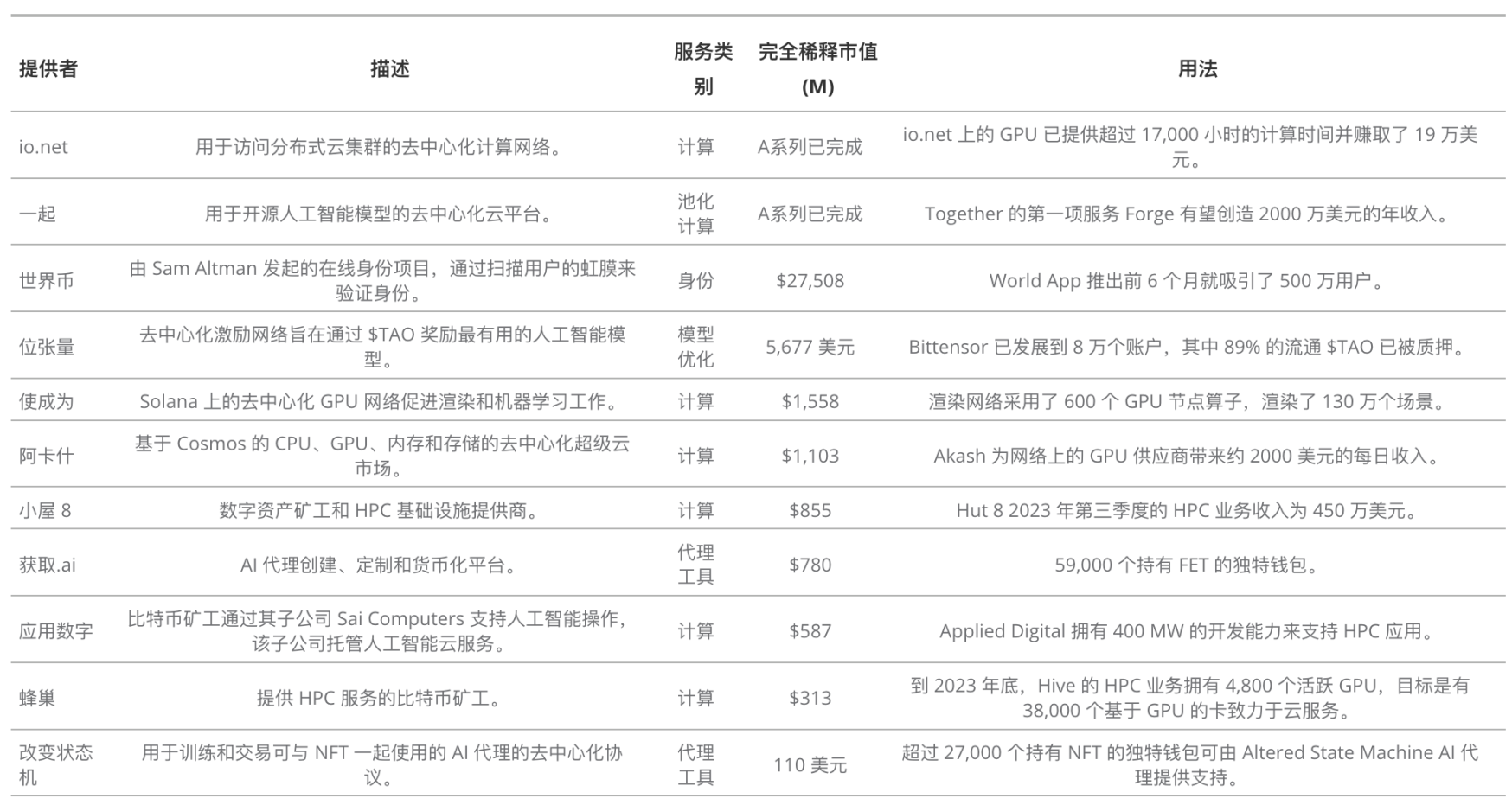

Implementing a decentralized cloud for artificial intelligence

We also see a long tail of compute-centric crypto projects that will capture a small but significant portion of the AI server resource market. These entities will coordinate computing clusters beyond hyperscale to deliver value propositions tailored to the needs of upstart AI builders. The benefits of decentralized computing include customizability, open access, and better contract terms. These blockchain-based computing companies enable smaller AI players to avoid the huge expense and general unavailability of high-end GPUs like the H 100 and A 100. Crypto AI businesses will meet demand by creating a network of physical infrastructure built around crypto token incentives, while providing proprietary IP to create software infrastructure to optimize compute usage for AI applications. The blockchain computing project will use market methods and cryptographic rewards to discover cheaper computing from independent data centers, entities with excess computing power, and former PoW miners. Some projects that provide decentralized computing for AI models include Akash, Render, and io.net.

Akash is a Cosmos-based project that can be considered a general decentralized super cloud that provides CPU, GPU, memory and storage. In fact, it is a two-way market connecting cloud service users and cloud service providers. Akashs software is designed to coordinate computing supply and demand while creating tools that facilitate the training, fine-tuning and running of AI models. Akash also ensures that marketplace buyers and sellers fulfill their obligations honestly. Akash is coordinated through its $AKT token, which can be used to pay for cloud services at a discount. $AKT also serves as an incentive mechanism for GPU computing providers and other network participants. On the supply side, Akash has made great strides in adding computing vendors, as there are 65 different vendors on the Akash marketplace. Although compute demand had been sluggish ahead of the launch of Akashs AI Super Cloud on August 31, 2023, compute buyers have spent $138,000 since the launch date.

Render, which recently moved to Solana, initially focused on connecting artists with dispersed groups that would provide GPU power to render images and video. However, Render has begun to focus its decentralized GPU clusters on machine learning workloads to support deep learning models. Through network improvement proposal RNP-004, Render now has an API to connect to external networks (such as io.net), which will leverage Renders GPU network for machine learning. A subsequent proposal from the Render community was approved to allow access to its GPUs through Beam and FEDML for machine learning tasks. As a result, Render has become a decentralized facilitator of GPU workloads, coordinated by paying providers RNDR dollars and providing RNDR incentives to entities running the networks backend infrastructure.

io.net GPU price comparison. Source: io.net As of January 4, 2024.

Another interesting project on Solana is io.net, which is considered DePIN or Decentralized Physical Infrastructure Network. The purpose of io.net is also to provide GPU, but its focus is only on applying GPU to drive AI models. In addition to simply coordinating computations, Io.net has added more services to its core stack. Its system claims to handle all components of AI, including creation, consumption and fine-tuning to properly facilitate and troubleshoot AI workloads across the network. The project also leverages other decentralized GPU networks such as Render and Filecoin as well as its own GPUs. Although io.net currently lacks tokens, it is planned to launch in the first quarter of 2024.

Overcoming the bottleneck of decentralized computing

However, leveraging this distributed computing remains a challenge due to the network demands imposed by the typical 633+ TB of data required to train deep learning models. Computer systems located around the world also present new obstacles to parallel model training due to delays and differences in computer capabilities. One company that is aggressively moving into the open source base model market is Together, which is building a decentralized cloud to host open source AI models. Together will enable researchers, developers and companies to leverage and improve AI through an intuitive platform that combines data, models and computation, expanding the accessibility of AI and powering the next generation of technology companies. Working with leading academic research institutions, Together built the Together research computer to enable labs to centralize computing for artificial intelligence research. The company also collaborated with Stanfords Center for Research on Fundamental Models (CRFM) to create the Holistic Evaluation of Language Models (HELM). HELM is a living benchmark designed to increase transparency in artificial intelligence by providing a standardized framework for evaluating such underlying models.

Since Togethers inception, founder Vipul Ved Prakash has spearheaded multiple projects, including 1) GPT-JT, an open LLM with a 6 B parameter model trained over <1 Gbps links, 2) OpenChatKit, a powerful , an open source foundation for creating specialized and general-purpose chatbots, and 3) RedPajama, a project that creates leading open source models that aim to become the basis for research and commercial applications. The Together platform is a foundational model consisting of an open model on commodity hardware, a decentralized cloud and a comprehensive developer cloud, bringing together different computing sources including consumer miners, crypto mining farms, T2-T4 cloud providers and academic computing.

We believe that decentralized and democratized cloud computing solutions like Together can significantly cut the cost of building new models, potentially disrupting and competing with established giants such as Amazon Web Services, Google Cloud, and Azure. For context, comparing AWS Capacity Blocks and AWS p 5.48 xlarge instances to a Together GPU cluster configured with the same number of H 100 SXM 5 GPUs, Together is priced approximately 4x lower than AWS.

As Open LLM becomes more accurate and more widely adopted, Together may become the industry standard for open source models, much like Red Hat is for Linux. Competitors in this space include model providers Stability A and HuggingFace, and artificial intelligence cloud providers Gensyn and Coreweave.

Augmenting AI models with cryptocurrency incentives

Blockchain and cryptocurrency incentives demonstrate that network effects and rewards related to the size of the network effect compel people to do useful work. In the context of Bitcoin mining, the task is to secure the Bitcoin network through the use of expensive electricity, technical manpower, and ASIC machines. This coordination of economic resources provides a Sybil attack defense mechanism against economic attacks on Bitcoin. In exchange, miners who coordinate these resources will receive BTC USD. However, the green space for useful work in AI is much larger, and several projects are already driving improvements in AI and machine learning models.

The most original of these projects is Numerai. Currently, Numerai can be considered a decentralized data science tournament aimed at identifying the best machine learning models to optimize financial returns by building stock portfolios. In each epoch, anonymous Numerai participants are given access to hidden raw data and asked to use this data to build the best-performing stock portfolio. In order to participate, users are not only required to submit predictions, but are also forced to stake NMR tokens behind their models’ predictions in order to prove the value of those models. Other users can also stake tokens on the model they believe performs best. The output of each pledged, submitted model is then fed into a machine learning algorithm to create a meta-model that informs the Numerai One hedge fund’s investment decisions. Users who submit “inferences” with the best information coefficient or effectiveness will be rewarded with NMR tokens. At the same time, those who staked the worst models will have their tokens slashed (confiscated and reused to reward winners).

Subnets and use cases on Bittensor. Source: https://taostats.io/api/ As of January 2, 2024.

Bittensor is a similar project that massively extends Numerais core concepts. Bittensor can be considered the “Bitcoin of machine intelligence” because it is a network that provides economic incentives for AI/ML models. This is done by “miners” who build AI models and “validator” entities who evaluate the quality of the output of these models. Bittensors architecture is that of a base network and many smaller subnets (subnets). Each subnetwork focuses on a different area of machine intelligence. Validators pose various questions or requests to miners on these subnets to assess the quality of their AI models.

The best-performing model will be rewarded with the highest TAO tokens, while validators are compensated for their accurate evaluation of miners. At a high level, both validators and miners must stake tokens to participate in each subnet, and each subnets proportion of total staking determines how many TAO tokens it receives from the total inflation of all Bittensors. Therefore, each miner not only has an incentive to optimize his model to win the most rewards, but also has an incentive to focus his model on the best artificial intelligence domain subnet. Additionally, since miners and validators must maintain funds in order to participate, everyone must exceed the capital cost barrier or exit the system.

As of January 2024, there are 32 different subnets, each dedicated to a specific area of machine learning or artificial intelligence. For example, Subnet 1 is text that prompts LLM similar to ChatGPT. On this subnet, miners run various versions of LLM tuned to best respond to validator prompts that evaluate response quality. On subnet 8 called Taoshi, miners submit short-term predictions for the price of Bitcoin and various financial assets. Bittensor also has subnets dedicated to human language translation, storage, audio, web scraping, machine translation, and image generation. Subnet creation is permissionless and anyone with 200 TAO can create a subnet. Subnet operators are responsible for creating evaluation and reward mechanisms for each subnets activities. For example, Opentensor, the foundation behind Bittensor, runs subnet 1 and recently released a model in collaboration with Cerebras to evaluate the LLM output of miners on that subnet.

While these subnets are initially fully subsidized by inflation rewards, each subnet must eventually sustain itself financially. Therefore, subnet operators and validators must coordinate to create tools that allow external users to access each subnets services for a fee. As inflationary TAO rewards decrease, each subnet will increasingly rely on external revenue to sustain itself. In this competitive environment, there is direct economic pressure to create the best models and incentivize others to create profitable real-world applications for these models. Bittensor is unlocking the potential of AI by leveraging scrappy small businesses to identify and monetize AI models. As noted Bittensor evangelist MogMachine puts it, this dynamic can be viewed as a “Darwinian competition for artificial intelligence.”

Another interesting project is using cryptography to incentivize the creation of artificial intelligence agents that are programmed to complete tasks autonomously on behalf of humans or other computer programs. These entities are essentially adaptive computer programs designed to solve specific problems. Agent is a catch-all term that encompasses chatbots, automated trading strategies, game characters, and even virtual universe assistants. One notable project in this space is Altered State Machine, a platform that uses NFTs to create owned, powered, and trained artificial intelligence agents. In Altered State Machine, users create their agents and then train them using a distributed cluster of GPUs. These agents are optimized for specific use cases. Another project, Fetch.ai, is a platform for creating agents customized to each users needs. Fetch.ai is also a SaaS business that allows registration and leasing or selling agents.

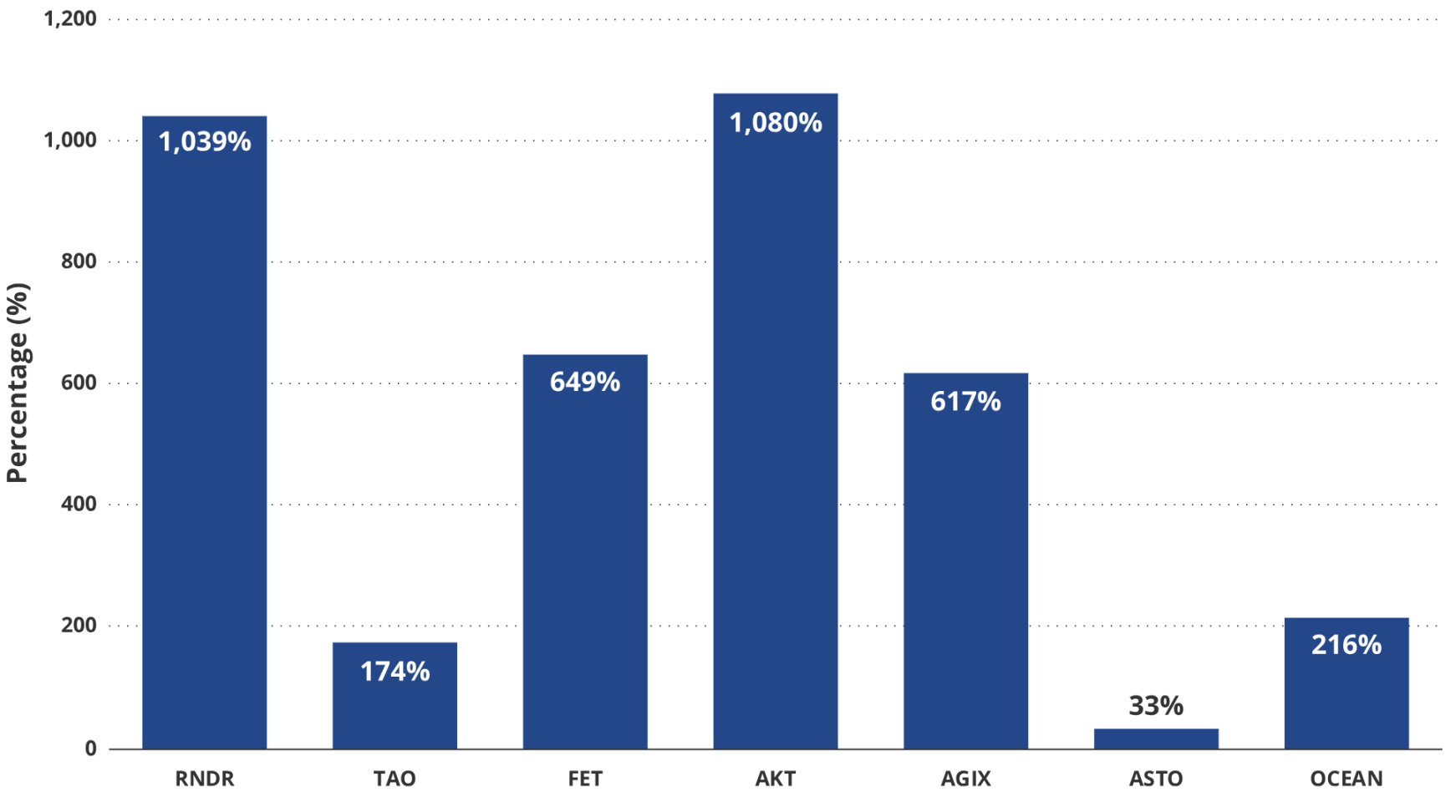

AI Token Returns from January 1, 2023

Source: Artemis XYZ As of January 10, 2024. Past performance is no guarantee of future results.

Verification via zero-knowledge (zk) proofs

2023 is a landmark year for new AI models. OpenAI launched ChatGPT, Meta launched LLAMA-2, and Google launched BERT. Due to the promise of deep learning, there are more than 18,563 artificial intelligence-related startups in the United States as of June 2023. These startups and others have produced thousands of new base and fine-tuned models. However, in a space where $1 of every $4 in venture capital is invested in AI-related companies, the proliferation of many new entities should be cause for serious concern.

Who actually creates and owns each model?

Is the output actually produced by the specified model?

Does the model really work as advertised?

What is the data source for each model and who owns that data?

Does training, fine-tuning and/or inference violate any copyright or data rights?

Both investors and users of these models should be 100% certain that they can solve these problems. Currently, many benchmarks exist for different components of LLM output, such as HumanEval for code generation, Chatbot Arena for LLM auxiliary tasks, and ARC Benchmark for LLM inference capabilities. However, despite attempts at model transparency like Hugging Faces Open LLM Leaderboard, there is no concrete proof of the models validity, ultimate provenance, or the source of its training/inference data. Not only can the benchmark be gamed, but theres no way to determine whether a particular model is actually running (as opposed to using an API that connects to another model), nor is there any guarantee that the leaderboard itself is honest.

This is the unification of public blockchain, artificial intelligence, and a cutting-edge field of mathematics called zero-knowledge (zk) proofs. A zk proof is an application of cryptography that allows someone to prove to a desired level of mathematical certainty that a statement they make about data is correct without revealing the underlying data to anyone. Statements can include simple statements (such as rankings) but can be extended to complex mathematical calculations. For example, not only can someone demonstrate that he or she knows the relative wealth of a sample without revealing that wealth to another party, but he or she can also demonstrate the correct calculation of the mean and standard deviation of the group. Essentially, you can demonstrate that you understand the data and/or that you used the data to make true assertions, without revealing the details of the data or how you performed the calculations. Outside of artificial intelligence, proof-of-zk has been used to extend Ethereum, allowing transactions to occur off-chain on layer 2 blockchains. Recently, zk-proofs have been applied to deep learning models to prove:

Use specific data to generate the model or provide inference output (also, what data/sources are not used)

Use a specific model to generate inferences

Inference output has not been tampered with

zk proofs can be published to a public, permanent blockchain and verified via smart contracts. The result is that blockchain can publicly and irrefutably prove important properties of artificial intelligence models. Two cutting-edge projects applying ZK to AI are called zero-knowledge machine learning (ZKML), namely EZKL and Modulus. EZKL uses the Halo 2 proof system to generate zk-snarks, which are zero-knowledge proofs that can then be publicly verified on Ethereums EVM. Although the model size that EZKL can currently prove is relatively small, with about 100 M parameters, compared with 175 B parameters for ChatGPT 4, EZKL CEO Jason Morton believes that they are considering engineering issues rather than technical limitations question. EZKL believes they can overcome the proof problem by splitting the proof for execution in parallel, thereby reducing memory constraints and computation time. In fact, Jason Morton believes that one day, “validating a model will be as simple as signing a blockchain transaction.”

ZKML proves that applied to artificial intelligence can solve important pain points of artificial intelligence implementation, including copyright issues and artificial intelligence security. As the New York Times’ recent lawsuit against Open AI and Microsoft illustrates, copyright law will apply to data ownership, and AI projects will be forced to provide proof of the provenance of their data. ZKML technology can be used to quickly resolve disputes over model and data ownership in court. In fact, one of the best applications of ZKML is allowing data/model marketplaces such as Ocean Protocol and SingularityNet to prove the authenticity and validity of their lists.

AI models will eventually expand into areas where accuracy and safety are critical. It is estimated that by 2027, there will be 5.8B AI edge devices, which may include heavy machinery, robots, autonomous drones and vehicles. Since machine intelligence is applied to things that can hurt and kill, its important to use high-quality data from reliable sources to prove that a reputable model is running on the device. While it may be economically and technically challenging to build continuous real-time proofs from these edge devices and publish them to the blockchain, it may be more feasible to validate the model upon activation or publish to the blockchain periodically. However, Zupass from the 0x PARC Foundation has built primitive proofs derived from “data-carrying proofs” that can cheaply establish proof of what happened on edge devices. Currently, this is related to event attendance, but it can be expected that this will soon migrate to other areas such as identity and even healthcare.

How good is your robotic surgeons AI model?

From the perspective of a business that could be held liable for equipment failure, having verifiable evidence that their model was not the source of a costly incident seems ideal. Likewise, from an insurance perspective, it may become economically necessary to validate and prove the use of reliable models trained on actual data. Likewise, in a world of AI deepfakes, leveraging cameras, phones, and computers that are verified and certified by blockchain to perform various actions may become the norm. Of course, proof of the authenticity and accuracy of these devices should be posted to a public open source ledger to prevent tampering and fraud.

While these proofs hold great promise, they are currently limited by gas costs and computational overhead. According to the current ETH price, it costs about 300-500k Gas to submit a proof on the chain (about 35-58 US dollars according to the current ETH price). From a computational perspective, Eigenlayers Sreeram Kennan estimates that a proof computation that would cost $50 to run on AWS would cost about 1,000,000 times as much using current ZK proof technology. As a result, ZK proofs The pace of development is much faster than anyone expected a few years ago, but there is still a long way to go before practical use cases can be opened. Suppose someone is curious about the applications of ZKML. In this case, they can participate in a decentralized singing competition judged by a proven on-chain smart contract model and have their results permanently uploaded to the blockchain.

Building humanity through blockchain-based identity

One possible consequence of widespread, advanced machine intelligence is that autonomous agents will become the most prolific Internet users. The release of AI agents will most likely result in entire networks being disrupted by purposeful bot-generated spam or even harmless task-based agents clogging the network (“get rid of spam”). Solanas data traffic reached 100 gigabytes per second as bots competed for an arbitrage opportunity worth approximately $100,000. Imagine the flood of web traffic that will occur when AI agents can hold millions of corporate websites hostage and extort billions of dollars. This suggests that the future Internet will impose restrictions on non-human traffic. One of the best ways to limit such attacks is to impose an economic tax on the overuse of cheap resources. But how do we determine the best framework for spam charging, and how do we determine humanity?

Fortunately, blockchains already have built-in defenses against AI bot-style Sybil attacks. A combination of metering non-human users and charging non-human users would be an ideal implementation, while slightly more computationally heavy work (like Hashcash) would inhibit bots. In terms of proof of humanity, blockchain has long struggled with overcoming anonymity to unlock activities such as undercollateralized lending and other reputation-based activities.

One way to gain momentum for proving identity is to use JSON Web Tokens (JWT). JWT is a 0 Auth credential, similar to a cookie, that is generated when you log in to websites such as Google. They allow you to reveal your Google identity when you visit sites on the Internet while signed in to Google. Created by L1 blockchain Sui, zkLogin allows users to link their wallet private keys and actions to a Google or Facebook account that generates a JWT. zk P2P extends this concept further, leveraging JWT to permissionlessly allow users to exchange fiat currencies for cryptocurrencies on the Base blockchain. This is done by confirming peer-to-peer cash transfers via payment app Venmo, which when confirmed via email JWT, unlocks smart contract-escrowd USDC tokens. The result of both projects is that they have strong ties to off-chain identities. For example, zkLogin connects wallet addresses to Google identities, while zk P2P is only available for Venmos KYC users. While both lack solid guarantees robust enough to enable on-chain identity, they create important building blocks that others can use.

While many projects seek to confirm the human identity of blockchain users, the boldest is WorldCoin, founded by OpenAI CEO Sam Altman. Although controversial due to the dystopian “Orb” machines that users must scan their irises with, WorldCoin is moving towards an immutable identity system that cannot be easily faked or overwhelmed by machine intelligence. This is because WorldCoin creates a cryptographic identifier based on each person’s unique eye “fingerprint,” which can be sampled to ensure uniqueness and authenticity. After verification, the user receives a digital passport called a World ID on the Optimism blockchain, allowing the user to prove their humanity on the blockchain. Best of all, a persons unique signature is never revealed and cannot be traced because it is encrypted. World ID simply asserts that the blockchain address belongs to a human being. Projects like Checkmate already link World IDs with social media profiles to ensure users’ uniqueness and authenticity. In an AI-led future internet, explicit demonstrations of humanity in every online interaction may become commonplace. When artificial intelligence overcomes the limitations of CAPTCHAs, blockchain applications can prove identities cheaply, quickly, and concretely.

Contribute to artificial intelligence through blockchain technology

There is no doubt that we are in the early stages of the artificial intelligence revolution. However, if the growth trajectory of machine intelligence matches the boldest predictions, AI will have to be challenged to excel while outperforming its potential harms. We believe that cryptocurrencies are the ideal grid to properly “train” outcome-rich but potentially insidious artificial intelligence plants. Blockchain’s set of AI solutions can increase the output of machine intelligence creators by providing them with more responsive, flexible, and potentially cheaper decentralized computing. It also incentivizes builders who can create better models while providing a financial incentive for others to build useful businesses using these AI models. Equally important, model owners can demonstrate the validity of their models while demonstrating that protected data sources are not used. For AI users, cryptographic applications can be useful to confirm that the models they are running meet security standards. For others, blockchain and cryptocurrencies may be a tangle of punishments and rewards, shackling the Gulliver that artificial intelligence is bound to become.

Source: VanEck Research, project website, as of January 15, 2024.

Disclosure: VanEck has a presence in Together through our strategic partnership with early-stage venture capital manager Cadenza, who was kind enough to contribute to the Overcoming the Bottlenecks in Decentralized Computing section.