From 0 to 1: Deploying your first AI application on Gonka

- 核心观点:Gonka去中心化AI网络可低成本部署应用。

- 关键要素:

- 兼容OpenAI API,迁移成本极低。

- 采用GNK代币激励计算节点。

- 30分钟快速部署西班牙语学习应用。

- 市场影响:降低AI应用开发门槛与成本。

- 时效性标注:中期影响

introduction

In just 30 minutes, a Spanish AI assistant capable of generating an unlimited number of practice questions and providing real-time intelligent grading was successfully launched. Its backend doesn't run on an expensive central cloud, but rather relies entirely on Gonka, an emerging decentralized AI computing network. Below is the complete practical record, core code, and online demo.

A New Paradigm for Decentralized AI Computing

Gonka is a proof-of-work AI computing network. By load balancing OpenAI-like inference tasks across verified hardware providers, it is building a new decentralized AI service ecosystem. The Gonka network uses GNK tokens for payment incentives and employs a randomized inspection mechanism to effectively prevent fraud. Developers can quickly connect to the Gonka network and deploy applications simply by mastering the OpenAI API.

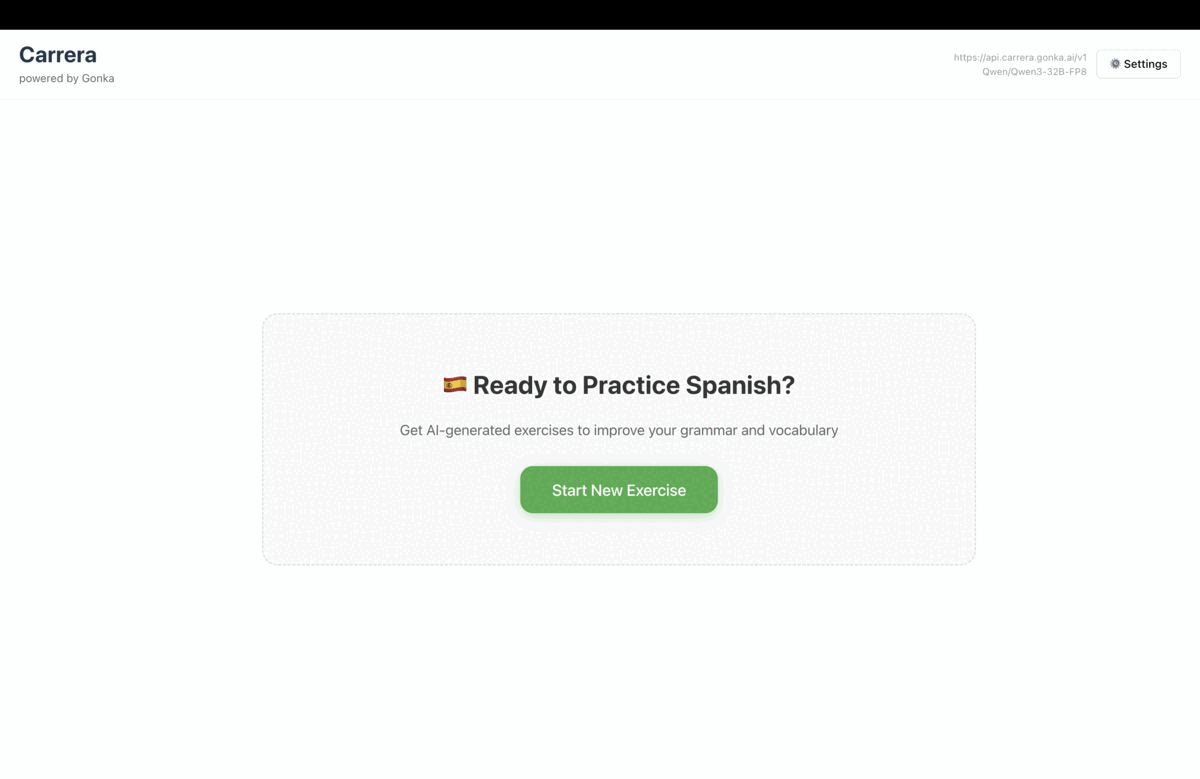

Real-world case study: Intelligent Spanish learning application

We will build a Spanish learning application that continuously generates personalized practice exercises. Imagine this use case:

- After the user clicks "Start New Exercise", the AI generates a cloze sentence containing a single blank.

- The user enters the answer and clicks "Check", and AI immediately gives a score and personalized analysis

- The system automatically moves on to the next exercise, creating an immersive learning cycle.

Online demo: carrera.gonka.ai

Technical Architecture Analysis

The application adopts the classic front-end and back-end separation architecture:

- React front-end: built on Vite, fully compatible with OpenAI interface standards

- Node proxy layer: only 50 lines of core code, responsible for request signing and forwarding

The only difference from the traditional OpenAI integration is the addition of a server-side signing step, which ensures the security of the key. All other operations remain consistent with the standard OpenAI chat completion calls.

Quick Start Guide

Environment requirements: Node.js 20+

Clone the repository

git clone git@github.com:product-science/carrera.git

cd carrera

Create a Gonka account and set environment variables

# Create an account using the inferenced CLI

# See the quick start guide for CLI information. Download method:

# https://gonka.ai/developer/quickstart/#2-create-an-account

# ACCOUNT_NAME can be any locally unique name, serving as a readable identifier for the account key pair.

ACCOUNT_NAME="carrera-quickstart"

NODE_URL=http://node2.gonka.ai:8000

inferenced create-client "$ACCOUNT_NAME" \

--node-address "$NODE_URL

# Export private key (for server use only)

export GONKA_PRIVATE_KEY=$(inferenced keys export "$ACCOUNT_NAME" --unarmored-hex --unsafe)

Start the proxy service

cd gonka-proxy

npm install && npm run build

NODE_URL=http://node2.gonka.ai:8000 ALLOWED_ORIGINS=http://localhost:5173 PORT=8080 npm start

Health Check

curl http://localhost:8080/healthz

# Expected return: {"ok":true,"ready":true}

Running the front-end application

cd web

npm install

VITE_DEFAULT_API_BASE_URL=http://localhost:8080/v1 npm run dev

In the application, open "Settings" → the base URL is pre-filled. Select a model (e.g., Qwen/Qwen3-235B-A22B-Instruct-2507-FP8; you can visit https://node1.gonka.ai:8443/api/v1/models to view the list of currently available models), and click "Test Connection".

Core technology implementation

The Gonka proxy service is central to integration with Gonka. The proxy service signs requests using a key and then forwards OpenAI-like calls over the network. Once developers deploy such a proxy for their applications, they can begin processing inference tasks on Gonka. In practice, it is recommended to add authentication mechanisms to ensure that only authorized users can request inference.

Environment variables used by the proxy service:

// gonka-proxy/src/env.ts

export const env = {

PORT: num("PORT", 8080),

GONKA_PRIVATE_KEY: str("GONKA_PRIVATE_KEY"),

NODE_URL: str("NODE_URL"),

ALLOWED_ORIGINS: (process.env.ALLOWED_ORIGINS ?? "*")

.split(",")

.map((s) => s.trim())

.filter(Boolean),

};

Use the Gonka OpenAI TypeScript SDK (gonka-openai) to create a client (Go and Python versions are also provided, and more language support is being updated continuously, please pay attention to the codebase):

// gonka-proxy/src/gonka.ts

import { GonkaOpenAI, resolveEndpoints } from "gonka-openai";

import { env } from "./env";

export async function createGonkaClient() {

const endpoints = await resolveEndpoints({ sourceUrl: env.NODE_URL });

return new GonkaOpenAI({ gonkaPrivateKey: env.GONKA_PRIVATE_KEY, endpoints });

}

Expose an OpenAI-compatible chat completion endpoint (/v1/chat/completions):

// gonka-proxy/src/server.ts

app.get("/healthz", (_req, res) => res.json({ ok: true, ready }));

app.post("/v1/chat/completions", async (req, res) => {

If `!ready` or `!client` is found, return `res.status(503).json({ error: { message: "Proxy not ready" } });`

const body = req.body as ChatCompletionRequest;

if (!body || !body.model || !Array.isArray(body.messages)) {

return res.status(400).json({ error: { message: "The 'model' and 'messages' parameters must be provided" } });

}

try {

const streamRequested = Boolean(body.stream);

const { stream: _ignored, ...rest } = body;

if (!streamRequested) {

const response = await client.chat.completions.create({ ...rest, stream: false });

return res.status(200).json(response);

}

// …

The server.ts file also includes pass-through support for streaming services (SSE), which developers can enable in clients that support streaming by setting `stream: true`. Gonka also provides a Dockerfile to ensure reproducible builds and easy deployment.

# gonka-proxy/Dockerfile

# ---- Build Phase ----

FROM node:20-alpine AS build

WORKDIR /app

COPY package*.json tsconfig.json ./

COPY src ./src

RUN npm ci && npm run build

# ---- Operation phase ----

FROM node:20-alpine

WORKDIR /app

ENV NODE_ENV=production

COPY --from=build /app/package*.json ./

Run npm ci --omit=dev

COPY --from=build /app/dist ./dist

EXPOSE 8080

CMD ["node", "dist/server.js"]

Technical Analysis: Front-End (Vendor-Agnostic Design)

React applications remain compatible with OpenAI without requiring knowledge of Gonka's implementation details. They simply call the OpenAI-like endpoint and render the aforementioned practice loop interface.

All backend interactions are handled in web/src/llmClient.ts, which makes a single call to the chat completion endpoint:

// web/src/llmClient.ts

const url = `${s.baseUrl.replace(/\/+$/, "")}/chat/completions`;

const headers: Record<string, string> = { "Content-Type": "application/json" };

if (s.apiKey) headers.Authorization = `Bearer ${s.apiKey}`; // Optional parameter

const res = await fetch(url, {

method: "POST",

headers,

body: JSON.stringify({ model: s.model, messages, temperature }),

signal

});

if (!res.ok) {

const body = await res.text().catch(() => "");

throw new Error(`LLM Error ${res.status}: ${body}`);

}

const data = await res.json();

const text = data?.choices?.[0]?.message?.content ?? "";

return { text, raw: data };

To specify an API service provider, simply configure the base URL in the "Settings" pop-up at the top. You can also set the model here; the default is Qwen/Qwen3-235B-A22B-Instruct-2507-FP8. For local testing in an offline environment, set the base URL to mock:; for actual calls, set it to the developer's proxy address (pre-filled in the quickstart section):

// web/src/settings.ts

export function getDefaultSettings(): Settings {

const prodBase = (import.meta as any).env?.VITE_DEFAULT_API_BASE_URL || "";

const baseUrlDefault = prodBase || "mock:";

return { baseUrl: baseUrlDefault, apiKey: "", model: "Qwen/Qwen3-235B-A22B-Instruct-2507-FP8" };

}

Application prompts and rating mechanisms

We use two prompt templates:

Generate prompt: "You are a Spanish teacher... Output only in strict JSON format... Generate a cloze sentence with exactly one blank (____) and include an answer, explanation, and difficulty level."

Grading instructions: "You are a Spanish teacher grading assignments... Output in strict JSON format, including pass/fail status and parsing instructions."

Example code snippet for the build process:

// web/src/App.tsx

const sys: ChatMsg = {

role: "system",

Content: You are a Spanish teacher who designs interactive exercises.

Output only in strict JSON format (no descriptive text, no code markup). Objects must contain the following keys:

{

"type": "cloze",

"text": "<Contains a Spanish sentence with exactly one blank marked with ____>",

"answer": "<the only correct answer to the blank>",

"instructions": "<clear instructions>",

"difficulty": "beginner|intermediate|advanced"

}

Content specifications:

- Use natural life scenes

- Leave only one blank (use the exact ____ mark in the sentence)

- Keep sentences between 8 and 20 words

- Sentences are in Spanish only; explanations may be in English.

- Diverse practice focus: se/estar, past tense and imperfect tense, subjunctive mood, por/para, consistency, common prepositions, core vocabulary.

};

We added several few-shot examples to help the model understand the output format, then parsed the JSON and rendered the exercise. The scoring process also uses a strict JSON format to output pass/fail results along with a brief explanation.

The parsing mechanism is fault-tolerant—it automatically cleans up code markers and extracts the first JSON data block when needed.

// web/src/App.tsx

// Fault-tolerant JSON extractor, adapting model output that may contain explanatory text or code markup.

function extractJsonObject<TExpected>(raw: string): TExpected {

const trimmed = String(raw ?? "").trim();

const fence = trimmed.match(/```(?:json)?\s*([\s\S]*?)\s*```/i);

const candidate = fence ? fence[1] : trimmed;

const tryParse = (s: string) => {

try {

return JSON.parse(s) as TExpected;

} catch {

return undefined;

}

};

const direct = tryParse(candidate);

if (direct) return direct;

const start = candidate.indexOf("{");

const end = candidate.lastIndexOf("}");

if (start !== -1 && end !== -1 && end > start) {

const block = candidate.slice(start, end + 1);

const parsed = tryParse(block);

If parsed, return parsed;

}

throw new Error("Unable to parse JSON from model response");

}

Follow-up suggestions for developers:

• Add custom exercise types and scoring rules

• Enable fluid UI (SSE is already supported by the proxy)

• Add authentication and rate limiting to the Gonka broker

• Deploy the agent to the developer's infrastructure and set VITE_DEFAULT_API_BASE_URL when building the web application.

Summary and Outlook

Why choose Gonka?

- Cost advantage: Compared to traditional cloud services, decentralized computing significantly reduces inference costs.

- Privacy protection: Requests are signed through a proxy, and the key is never exposed.

- Compatibility: Fully compatible with the OpenAI ecosystem, with extremely low migration costs.

- Reliability: Distributed networks ensure high service availability.

Gonka provides AI developers with a smooth transition into the era of decentralized computing. Through the integration approach described in this article, developers can maintain their existing development practices while enjoying the cost advantages and technological benefits of a decentralized network. As decentralized AI infrastructure continues to improve, this development model is expected to become the standard practice for the next generation of AI applications. Gonka will also launch more practical features and work with developers to explore more AI application scenarios, including but not limited to intelligent education applications, content generation platforms, personalized recommendation systems, and automated customer service solutions .

Original link: https://what-is-gonka.hashnode.dev/build-a-productionready-ai-app-on-gonka-endtoend-guide

About Gonka.ai

Gonka is a decentralized network designed to provide efficient AI computing power, maximizing the use of global GPU computing power to accomplish meaningful AI workloads. By eliminating centralized gatekeepers, Gonka provides developers and researchers with permissionless access to computing resources, while rewarding all participants through its native token, GNK.

Gonka was incubated by US AI developer Product Science Inc. Founded by the Libermans siblings, Web 2 industry veterans and former core product directors at Snap Inc., the company successfully raised $18 million in 2023 from investors including OpenAI investor Coatue Management, Solana investor Slow Ventures, K5, Insight, and Benchmark Partners. Early contributors to the project include well-known leaders in the Web 2-Web 3 space, such as 6 Blocks, Hard Yaka, Gcore, and Bitfury.

Official Website | Github | X | Discord | White Paper | Economic Model | User Manual