A Complete Guide to Gonka Miner Deployment

- 核心观点:Gonka通过PoW2.0将GPU算力用于AI任务挖矿。

- 关键要素:

- AI推理训练替代无意义哈希计算。

- 算力贡献比例决定奖励分配。

- 信誉系统惩罚作弊鼓励诚实行为。

- 市场影响:推动去中心化AI算力资源市场化。

- 时效性标注:长期影响

Preface

Welcome to become a miner on the Gonka decentralized AI network! Gonka uses the revolutionary Proof of Work 2.0 consensus mechanism, dedicating nearly 100% of its computing power to AI inference and training tasks, rather than the meaningless hashing of traditional blockchains. As a miner (Host), you will earn rewards by contributing GPU computing power, while providing valuable computing resources to the global AI ecosystem.

What is Gonka Mining?

Core Concepts of Mining

- AI-guided mining: Your GPU performs real AI inference and training tasks, not meaningless computing

- Sprint mechanism: Participate in time-limited computing competitions and use the Transformer model to complete tasks

- Voting weight: The number of successful calculations determines your network voting rights and task allocation ratio

- Randomized verification: Only 1-10% of tasks require verification, significantly reducing overhead

- Reputation system: Honest behavior increases reputation points, and cheating behavior will be severely punished

Reward Mechanism

- Receive rewards based on the proportion of computing power contribution

- Verify other people's results to get extra rewards

- Long-term honest behavior enjoys higher efficiency and less verification

- Geographically distributed training participation rewards

Hardware requirements

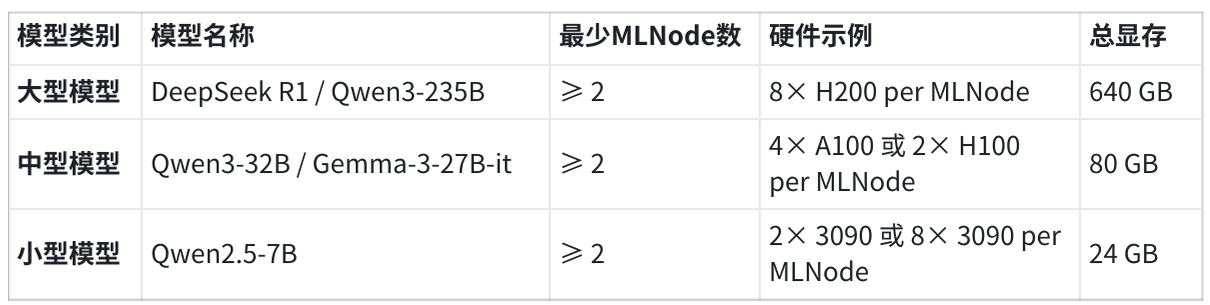

Supported model categories

The protocol currently supports the following model categories:

Optimal reward configuration

To achieve the highest rewards and maintain reliability, each network node should serve all three model categories, with at least 2 MLNodes per category. This setup:

- Improve protocol-level redundancy and fault tolerance

- Enhanced model-level validation performance

- In line with future reward expansion logic

NetworkNode Server Requirements

- CPU: 16 cores (AMD 64)

- Memory: 64+GB RAM

- Storage: 1 TB NVMe SSD

- Network: 100 Mbps minimum (1 Gbps recommended)

MLNode server requirements

- GPU memory at least 1.5 times the RAM

- 16-core GPU

- NVIDIA Container Toolkit (CUDA 12.6-12.9)

- NetworkNode and MLNode can be deployed on the same server

Open Ports

- 5000: Tendermint P2P communication

- 26657: Tendermint RPC (querying the blockchain, broadcasting transactions)

- 8000: Application service (configurable)

Environmental Preparation

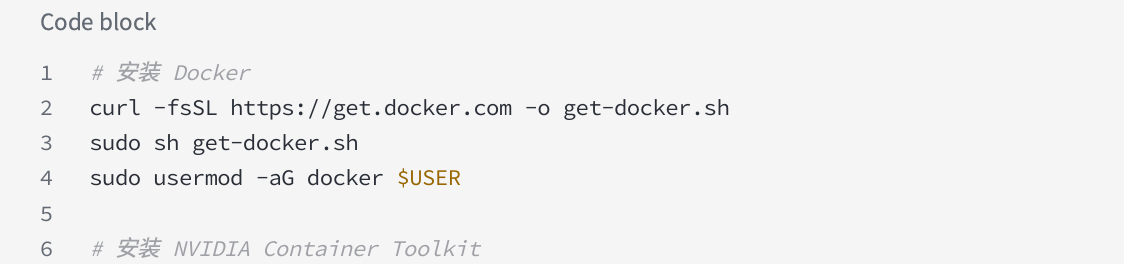

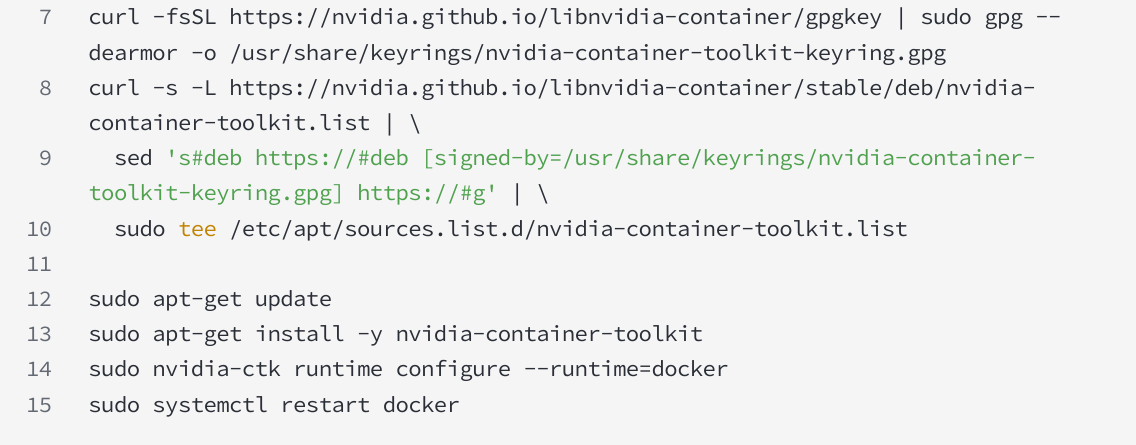

Install Docker and NVIDIA Container Toolkit

Ubuntu/Debian:

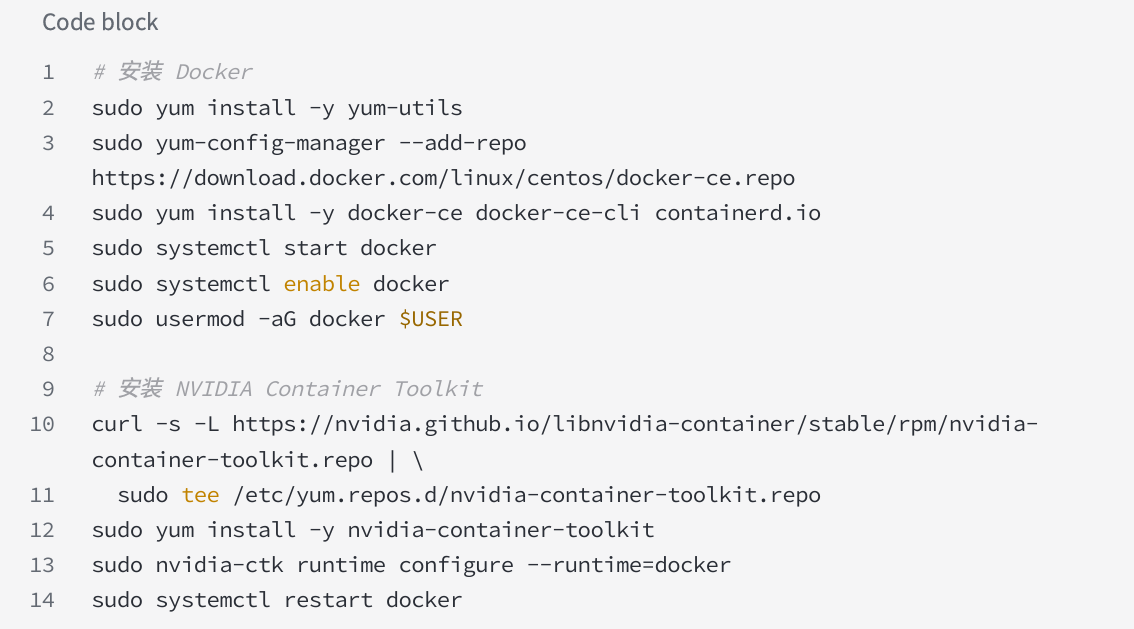

CentOS/RHEL:

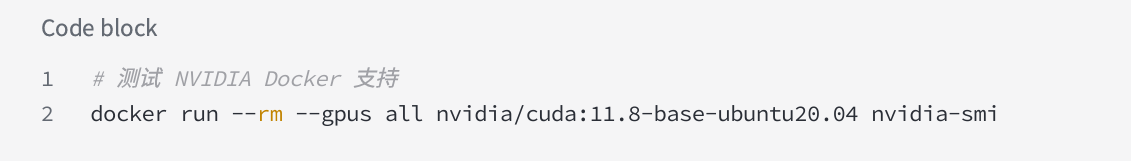

Verifying GPU support

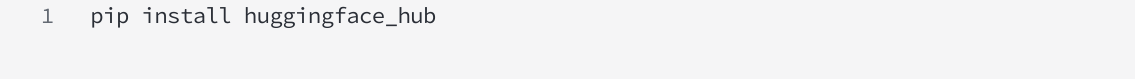

Install HuggingFaceCLI

Deployment Guide

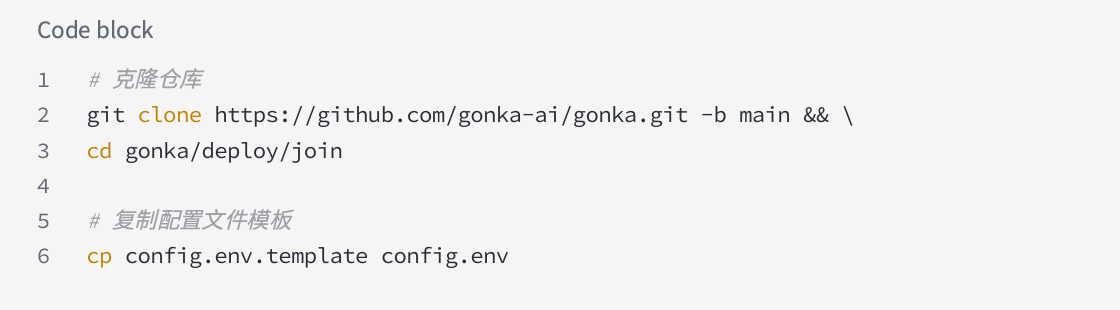

Download the deployment file

Install the CLI tool on the local machine

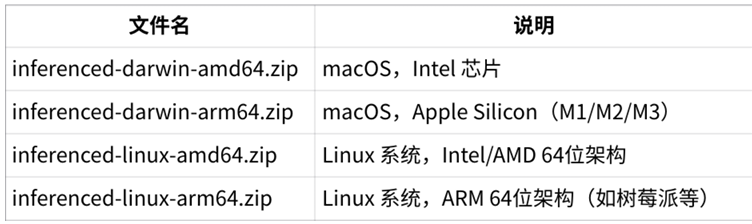

The CLI version of the corresponding machine

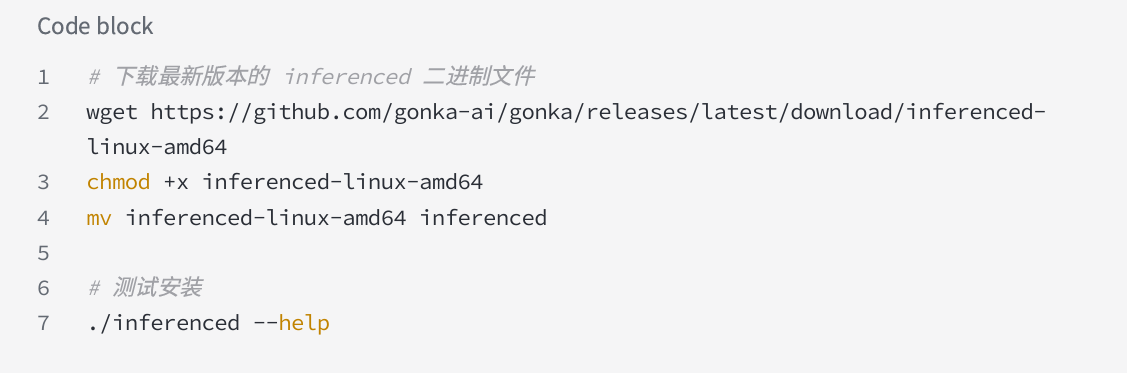

Download and install inferencedCLI on your secure local machine (not a server, using LinuxAMD 64 as an example):

Note for macOS users: On macOS, you may need to enable this feature in System Settings → Privacy & Security.

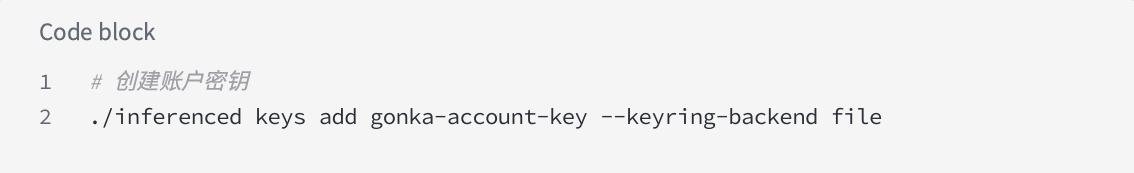

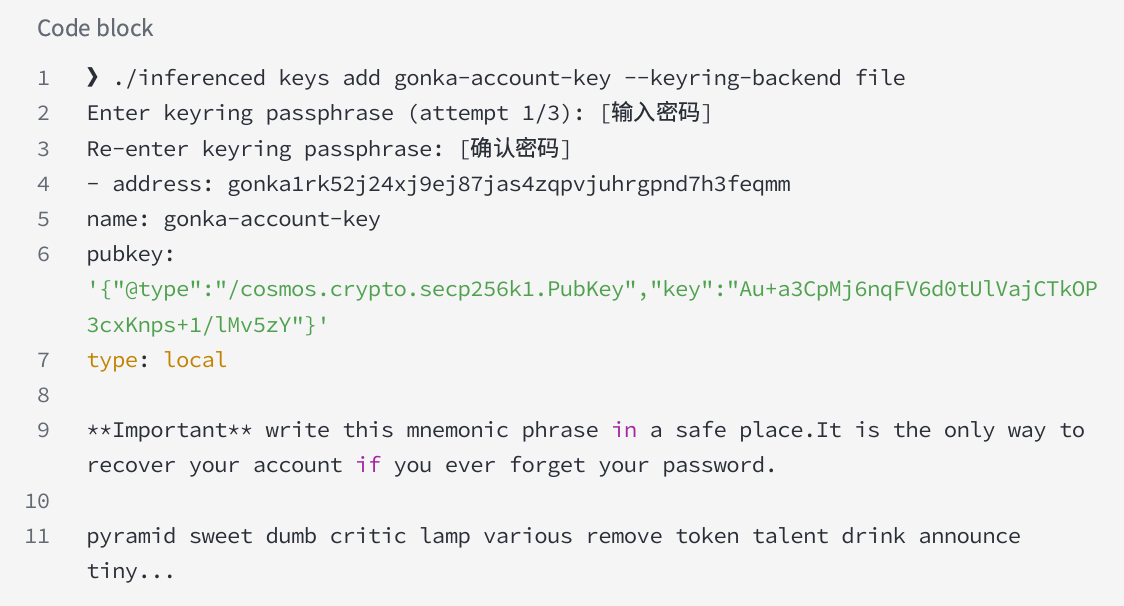

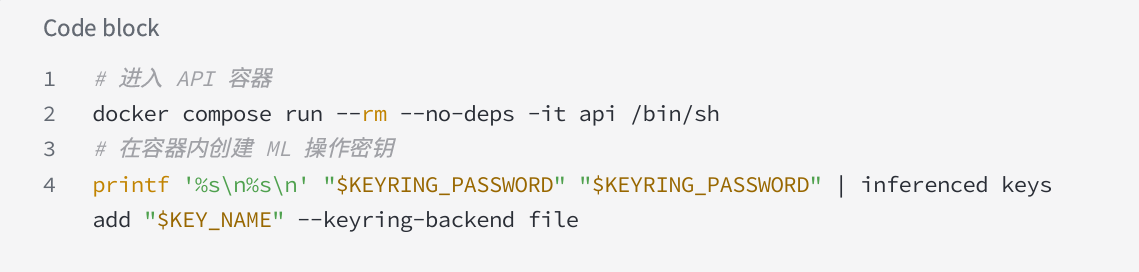

Create an account key on the local machine (cold wallet)

⚠IMPORTANT: This step must be performed on a secure local machine (not your server)

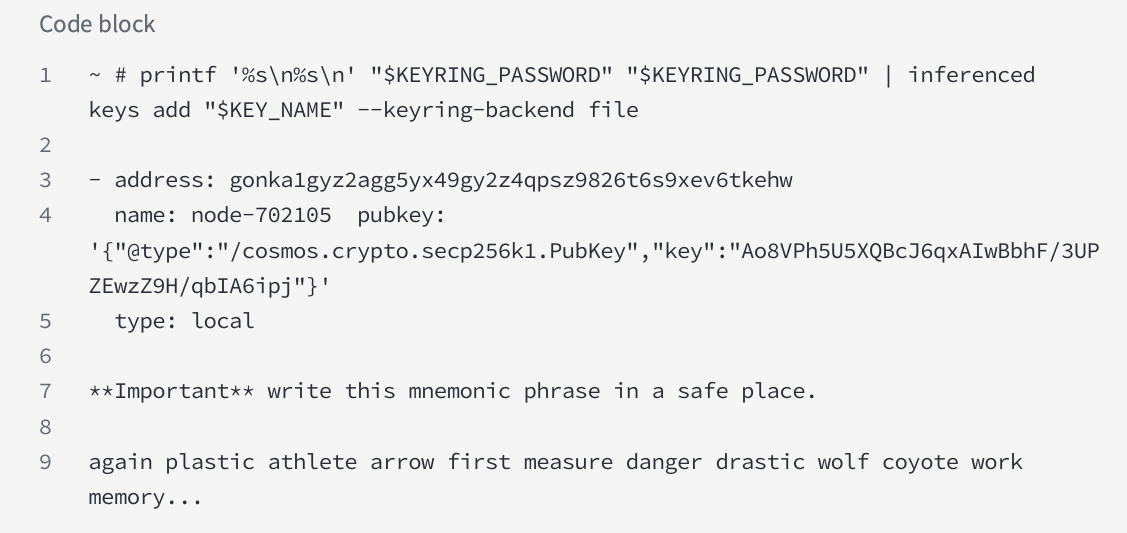

Example output:

EXTREMELY IMPORTANT: Write down your recovery phrase and store it in a secure offline location. This is the only way to recover your account keys.

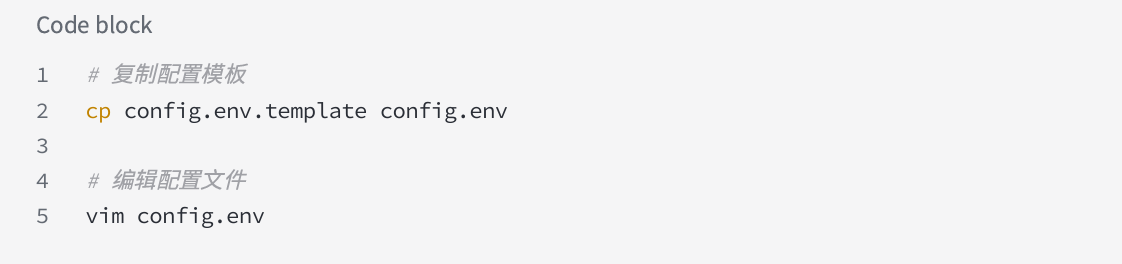

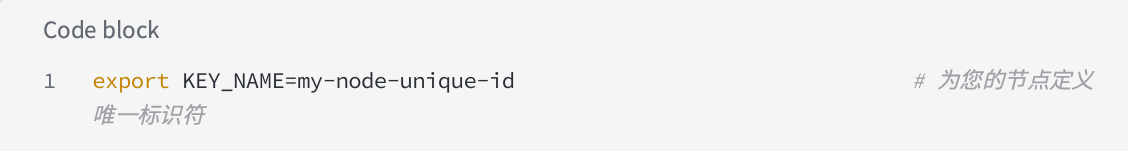

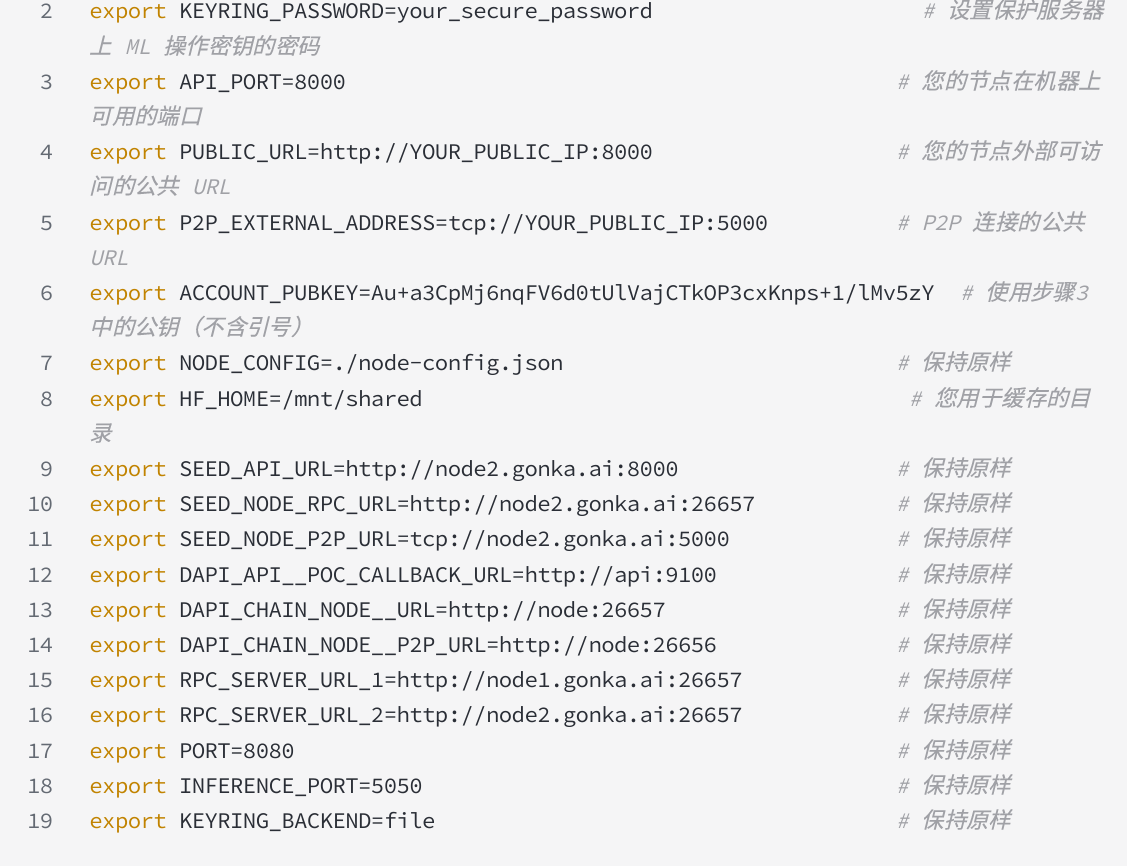

Configure environment variables

Fill in the following information:

Server configuration node model

Choose the appropriate configuration based on your hardware:

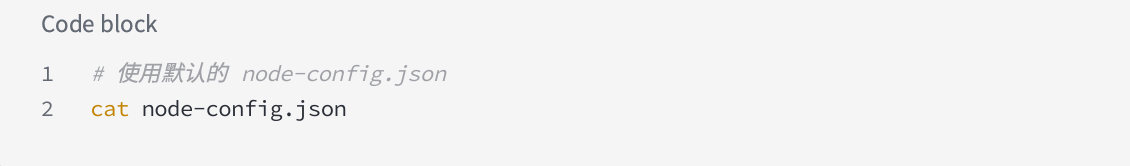

Standard configuration (Qwen 2.5-7 B):

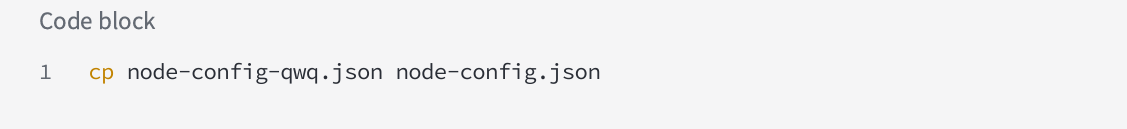

QwQ-32 BonA 100/H 100:

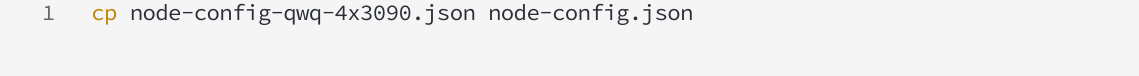

QwQ-32 Bon 4 x 3090:

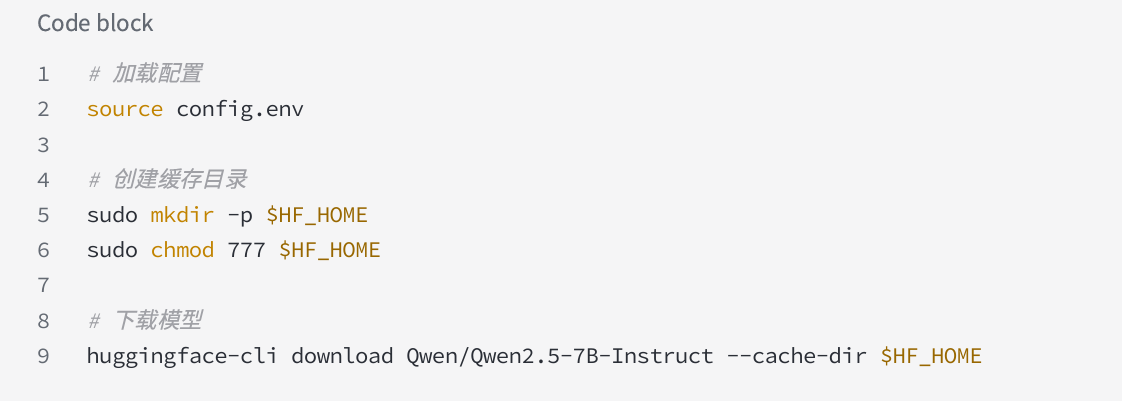

Server pre-downloads model weights

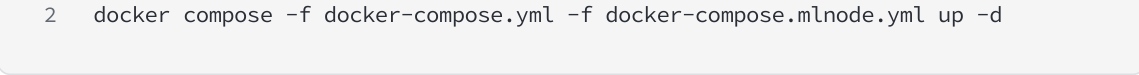

Server Startup Node

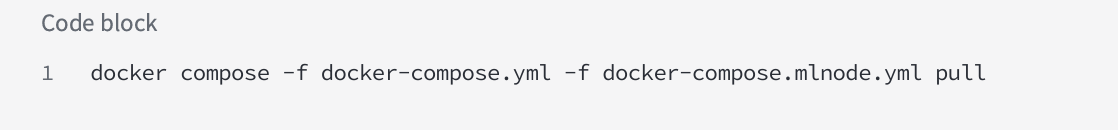

Pull the Docker image

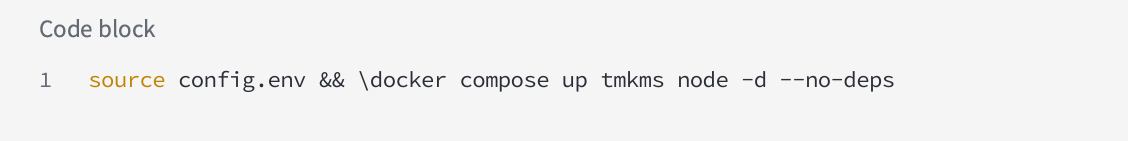

Start the initial service

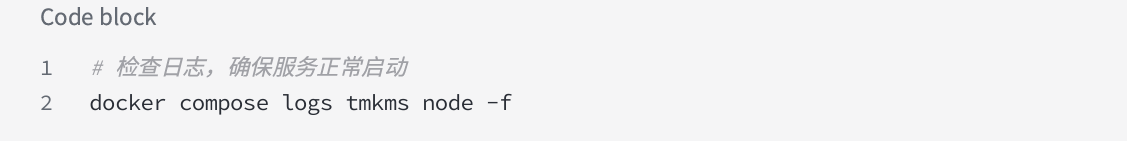

Verify initial service:

If you see the chain node continuously processing block events, then the setup is working properly.

Complete key setup and host registration

Server creates ML operation key (hot wallet)

Example output:

Record ML operation key address: gonka1gyz2agg5yx49gy2z4qpsz9826t6s9xev6tkehw

Server registration host

In the same container:

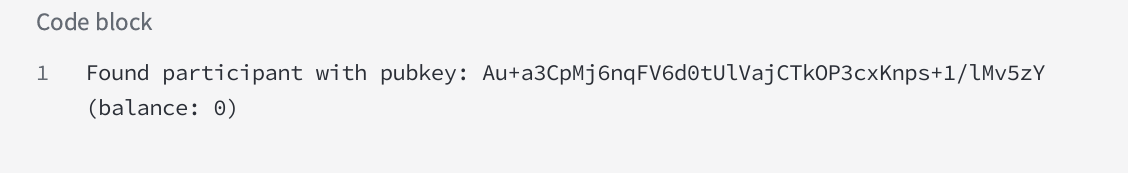

Expected output:

Then exit the container:

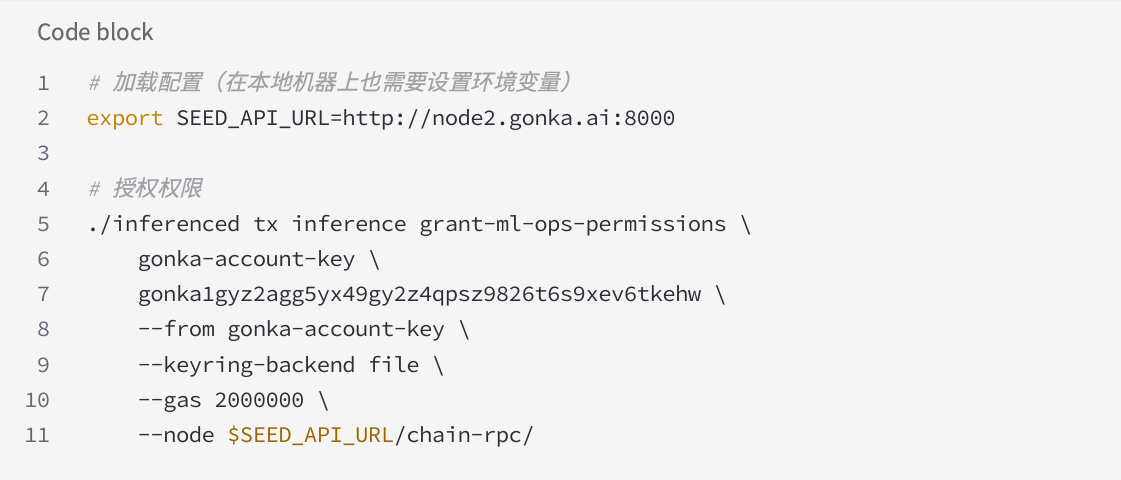

The local machine authorizes the ML operation key

⚠Important: Perform this step on a secure local machine where you created the account key

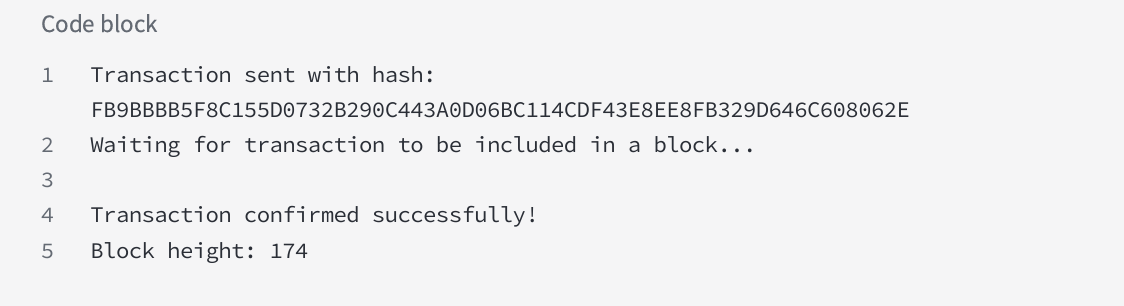

Expected output:

Server starts full node

Verify node status

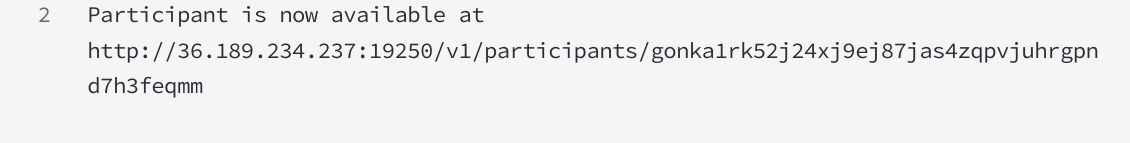

After waiting a few minutes, you should see your node at the following URL:

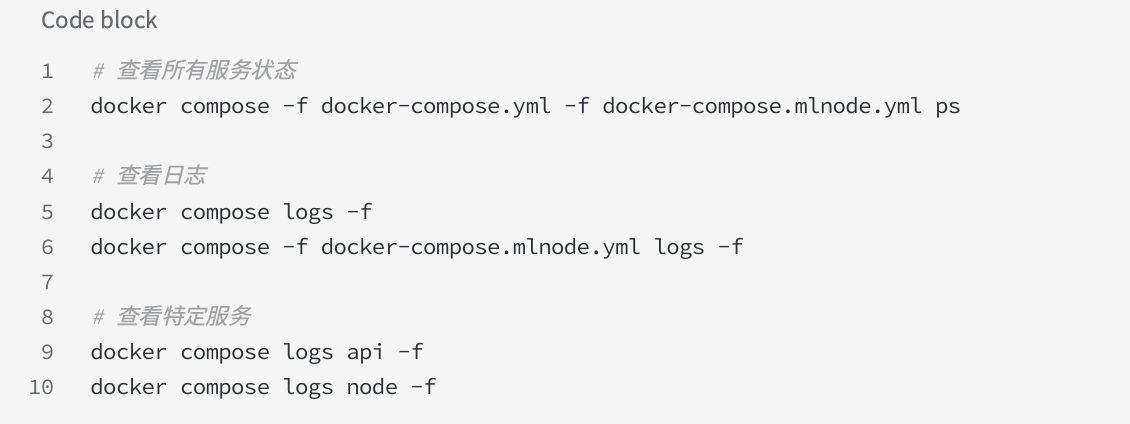

Monitoring and Management

Check service status

Important monitoring indicators

1. Node synchronization status: Ensure synchronization with the network

2. GPU Utilization: Monitor computing power usage

3. MLNode health status: Ensure that the inference service is normal

4. P2P connection number: monitor network connection status

5. Block height: ensuring synchronization with the network

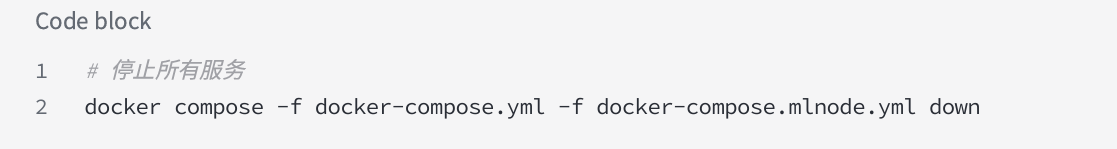

Stopping and cleaning up nodes

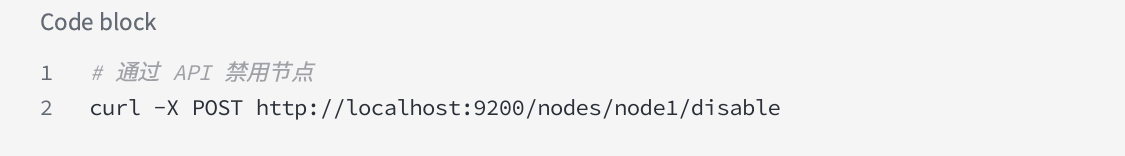

How to stop MLNode

1. Disable each MLNode:

2. Wait for the next cycle: The disable flag will only take effect after the next cycle starts.

3. Verify removal: Confirm that the node is not in the list of active participants and its effective weight is 0

4. Stop MLNode:

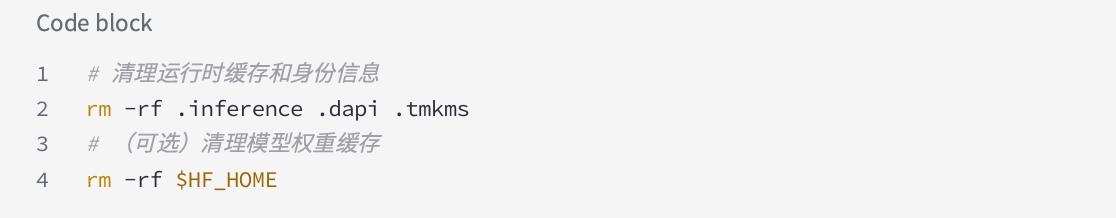

Cleaning the data

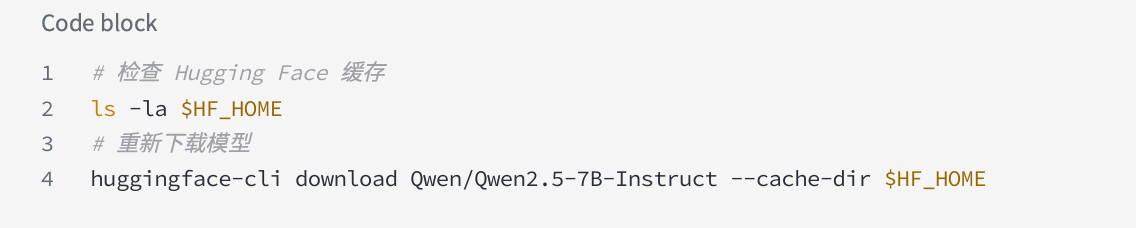

⚠Note: Deleting $HF_HOME will require re-downloading large model files.

Advanced Configuration

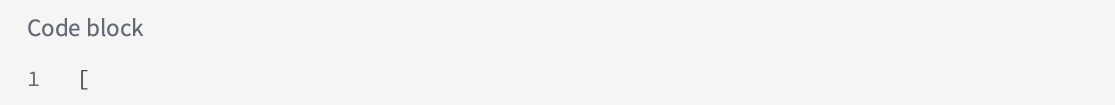

Multi-GPU Optimized Configuration Example

QwQ-32 Bon 8 x 3090 Configuration:

json

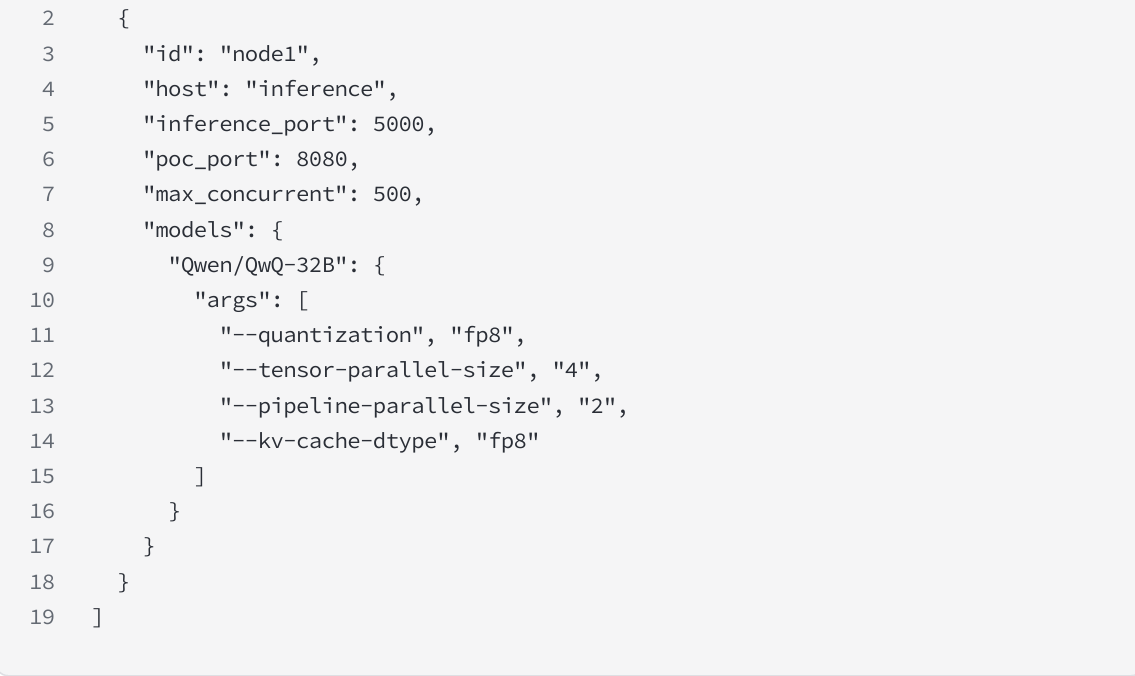

Performance tuning parameters

troubleshooting

FAQ

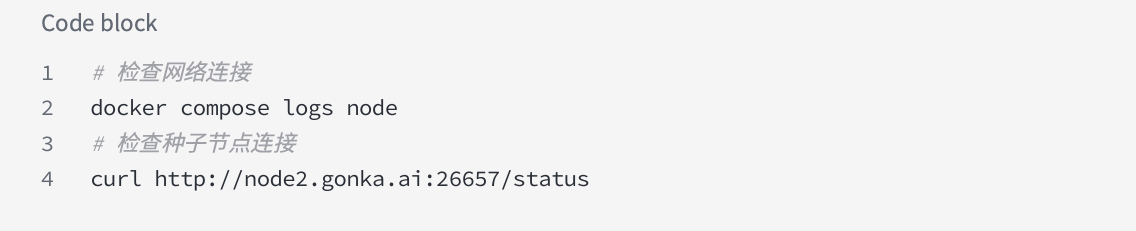

1. Node synchronization failed

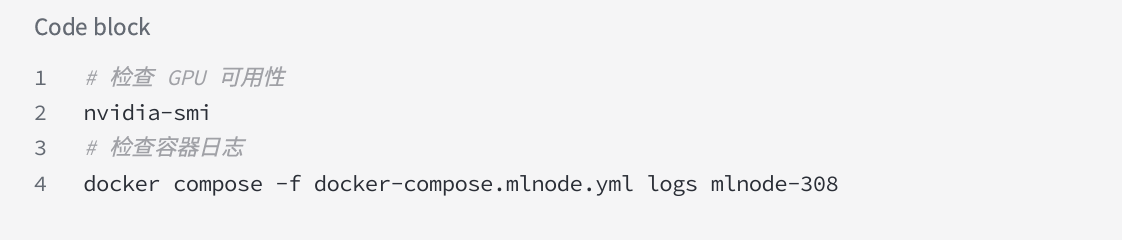

2. MLNode startup failure

3. Model download failed

Technical Support

Official Resources

Official website: https://gonka.ai

Documentation: https://gonka.ai/introduction/

Discord: https://discord.gg/aJDBXt2XJ5

Email: hello@productscience.ai

Get Help

If you need help, please join our Discord server for general inquiries, technical issues, or security questions.

Start your mining journey!

Congratulations on completing your Gonka mining node deployment! You have now become a vital component of the decentralized AI network. By contributing your GPU computing power, you not only earn mining rewards but also contribute to global AI development.

Remember to keep your nodes running stably and participate honestly in network activities. Your reputation and earnings will increase over time. Happy mining!

This tutorial is based on the official documentation. If you have any questions, please refer to the latest official documentation or contact technical support.

About Gonka.ai

Gonka is a decentralized network designed to provide efficient AI computing power. Its design goal is to maximize the use of global GPU computing power to complete meaningful AI workloads. By eliminating centralized gatekeepers, Gonka provides developers and researchers with permissionless access to computing resources while rewarding all participants with its native GNK token.

Gonka was incubated by US AI developer Product Science Inc. Founded by the Libermans siblings, Web 2 industry veterans and former core product directors at Snap Inc., the company successfully raised $18 million in 2023 from investors including OpenAI investor Coatue Management, Solana investor Slow Ventures, K5, Insight, and Benchmark Partners. Early contributors to the project include well-known leaders in the Web 2-Web 3 space, such as 6 Blocks, Hard Yaka, Gcore, and Bitfury.

Official Website | Github | X | Discord | Whitepaper | Economic Model | User Manual