The End of Tokenization Illusion: How Vitalik's Four Quadrants Rewrite the AI×Crypto Script

- Core Viewpoint: Vitalik Buterin's latest perspective indicates that the integration of AI and Crypto is shifting from the early "Crypto helps AI" phase characterized by "tokenization illusion" towards a more pragmatic two-way empowerment. This involves leveraging Ethereum to address AI's trust and economic collaboration issues, while simultaneously using AI to optimize the user experience and governance efficiency within the crypto ecosystem.

- Key Elements:

- Attempts over the past two years in "Crypto Helps AI" (tokenizing computing power, data, models) have largely failed, as they failed to meet commercial-grade requirements or suffered from "tokenization illusion," lacking genuine product-market fit.

- Vitalik's current view is more balanced, proposing four quadrants: utilizing Ethereum's decentralization to provide a trusted interaction and economic layer for AI, and employing AI as a user shield and efficient participant to optimize crypto markets and governance.

- Key application directions include: using ZK/FHE technology to enable private and verifiable AI interactions; allowing AI agents to make payments and collaborate via Ethereum; using local LLMs to audit contracts for enhanced security; and involving AI in prediction markets and DAO governance to improve decision-making efficiency.

Original author: Lao Bai

After two years, Vitalik has posted on Twitter again. Following up on the research report from two years ago, I'd like to share some thoughts. Even the date is exactly the same, February 10th.

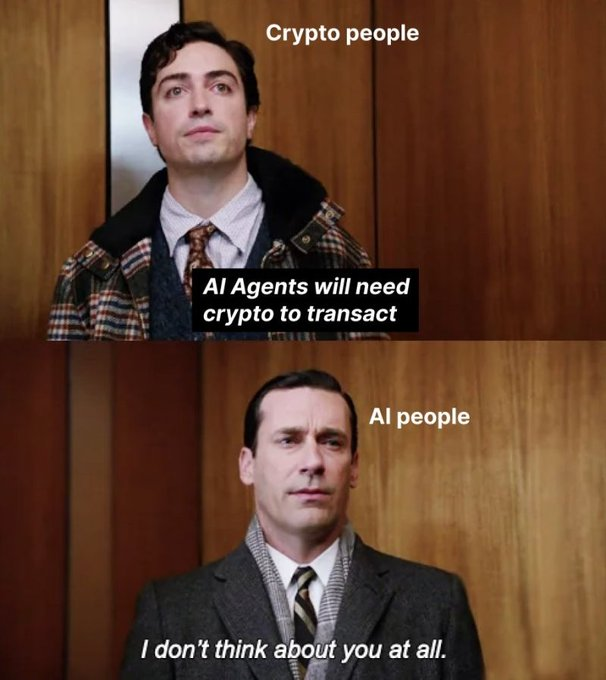

Two years ago, Vitalik actually implicitly expressed that he wasn't very optimistic about the various "Crypto Helps AI" trends that were popular at the time. The three major narratives in the circle back then were computing power assetization, data assetization, and model assetization. My research report two years ago mainly discussed some observed phenomena and doubts regarding these three narratives in the primary market. From Vitalik's perspective, he was more bullish on "AI Helps Crypto".

The examples he gave at the time were:

- AI as a participant in games;

- AI as a game interface;

- AI as game rules;

- AI as a game objective;

Over the past two years, we have made many attempts in "Crypto Helps AI", but with little effect. Many sectors and projects ended up just - issuing a token and calling it a day, lacking real Product-Market Fit (PMF). I call this the "tokenization illusion".

1. Computing Power Assetization - Most cannot provide commercial-grade Service Level Agreements (SLAs), are unstable, and frequently go offline. They can only handle simple, small-to-medium model inference tasks, mostly serving niche markets. Revenue is not linked to the token...

2. Data Assetization - High friction, low willingness, and high uncertainty on the supply side (retail users). The demand side (enterprises) requires structured, context-dependent professional data suppliers with trust and legal liability. It's difficult for DAO-led Web3 projects to provide this.

3. Model Assetization - Models themselves are non-scarce, replicable, fine-tunable, rapidly depreciating process assets, not final-state assets. Hugging Face itself is a collaboration and dissemination platform, more like "GitHub for ML" rather than an "App Store for models". Therefore, projects attempting to tokenize models via a "decentralized Hugging Face" have mostly ended in failure.

Additionally, over these two years, we have tried various forms of "verifiable inference", which is a classic case of a solution looking for a problem. From ZKML to OPML to Game Theory, etc., even EigenLayer shifted its Restaking narrative to Verifiable AI.

But it's largely similar to what happened in the Restaking sector - few AVSs (Actively Validated Services) are willing to pay continuously for extra verifiable security.

Similarly, verifiable inference is mostly about verifying "things that nobody really needs verified". The threat model on the demand side is extremely vague - who exactly are we defending against?

AI output errors (model capability issues) far outnumber cases of AI output being maliciously tampered with (adversarial problems). As we've seen from various security incidents on platforms like OpenClaw and Moltbook recently, the real problems stem from:

- Incorrect policy design

- Excessive permissions granted

- Unclear boundaries

- Unexpected interactions from tool combinations

- ...

Scenarios like "the model being tampered with" or "the inference process being maliciously rewritten" are almost non-existent, imagined nails for the verifiability hammer.

I posted this chart last year, not sure if any old-timers remember.

The ideas Vitalik presented this time are clearly more mature than two years ago, also due to the progress we've made in various directions like privacy, X402, ERC8004, prediction markets, etc.

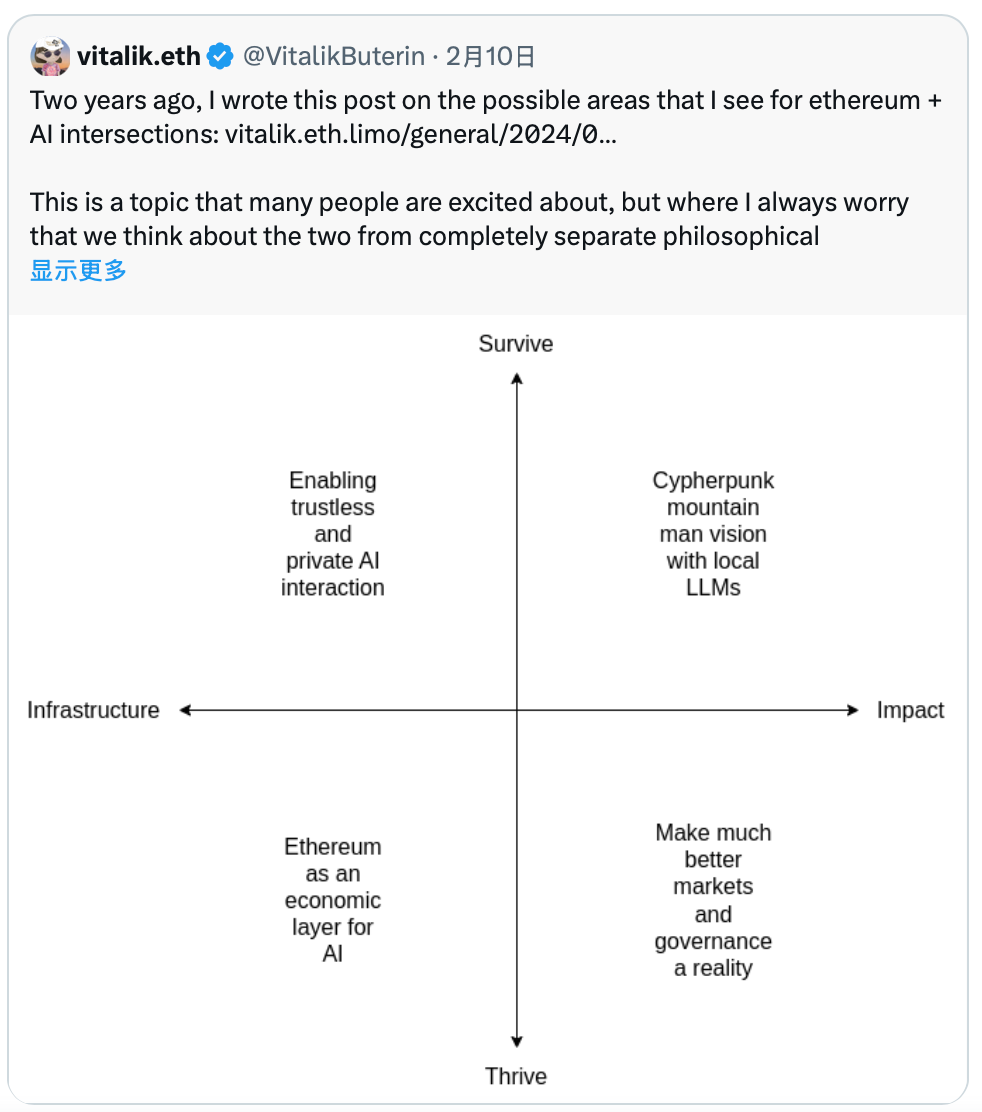

We can see that the four quadrants he outlined this time, half belong to "AI Helps Crypto" and the other half to "Crypto Helps AI", no longer leaning heavily towards the former as it did two years ago.

Top-left and bottom-left - Utilizing Ethereum's decentralization and transparency to solve AI's trust and economic collaboration problems

1.Enabling trustless and private AI interaction (Infrastructure + Survival): Using technologies like ZK, FHE to ensure the privacy and verifiability of AI interactions (not sure if the verifiable inference I mentioned earlier counts).

2. Ethereum as an economic layer for AI (Infrastructure + Prosperity): Enabling AI agents to conduct economic payments, hire other bots, post collateral, or establish reputation systems through Ethereum, thereby building a decentralized AI architecture not limited to a single giant platform.

Top-right and bottom-right - Utilizing AI's intelligent capabilities to optimize the user experience, efficiency, and governance of the crypto ecosystem:

3. Cypherpunk mountain man vision with local LLMs (Impact + Survival): AI as a user's "shield" and interface. For example, local LLMs can automatically audit smart contracts, verify transactions, reducing reliance on centralized front-ends, and safeguarding individual digital sovereignty.

4. Make much better markets and governance a reality (Impact + Prosperity): AI deeply participating in prediction markets and DAO governance. AI can act as efficient participants, amplifying human judgment by processing information at scale, solving various market and governance issues like insufficient human attention, high decision-making costs, information overload, and voter apathy.

Previously, we were desperately trying to make Crypto Help AI, while Vitalik stood on the other side. Now we finally meet in the middle, though it seems unrelated to various XX tokenizations or so-called AI Layer1s. Hopefully, looking back at today's post in two years, there will be some new directions and surprises.