AI on Two Battlefields: Cryptocurrency Speculation Uncertain, Texas Hold'em Rising Again, Who is the Strongest "All-Round Player"?

- 核心观点:AI在德州扑克中展现不同策略风格。

- 关键要素:

- Grok沉稳但压迫力强策略。

- Llama过度激进致亏损过半。

- Gemini激进适中收益最高。

- 市场影响:揭示AI在不同场景的能力差异。

- 时效性标注:短期影响

Original author: Eric, Foresight News

With four days remaining in the NOF1 AI Trading Competition, DeepSeek and Tongyi Qianwen are still far ahead, while the remaining four AIs have not outperformed simply holding Bitcoin. Barring any unforeseen circumstances, DeepSeek should take the championship. Now it remains to be seen when the remaining AIs will surpass the returns of simply holding Bitcoin, and who will end up last.

While AI cryptocurrency trading faces a constantly changing market, it's still essentially a PvE game. However, for a true PvP game that tests "which AI is smarter" rather than "which AI is better at trading," Russian programmer Max Pavlov challenged nine AIs to a Texas Hold'em poker game .

According to publicly available information on LinkedIn, Max Pavlov has long worked as a product manager. His profile on the AI Poker website also states that he is an enthusiast of deep learning, AI, and poker. Regarding the reason for conducting this test, Max Pavlov explained that the poker community has yet to reach a consensus on the reliability of large language models in reasoning, and this competition serves as a demonstration of the reasoning capabilities of these large language models in actual poker games.

Perhaps because Grok's performance in cryptocurrency trading was not outstanding, Musk retweeted a screenshot yesterday showing Grok temporarily in first place in a poker game, which seemed to indicate that he wanted to "get back at him".

How are the AIs performing?

This poker tournament invited nine players to participate, including well-known names such as Gemini, ChatGPT, Claude Sonnet (launched by Anthropic, which received investment from FTX), Grok, DeepSeek, Kimi (AI under the Dark Side of the Moon), and Llama, as well as Mistral Magistral, launched by the French company Mistral AI, which focuses on the European market and language, and GLM, a subsidiary of Beijing Zhipu, one of the earliest companies in China to invest in large language model research.

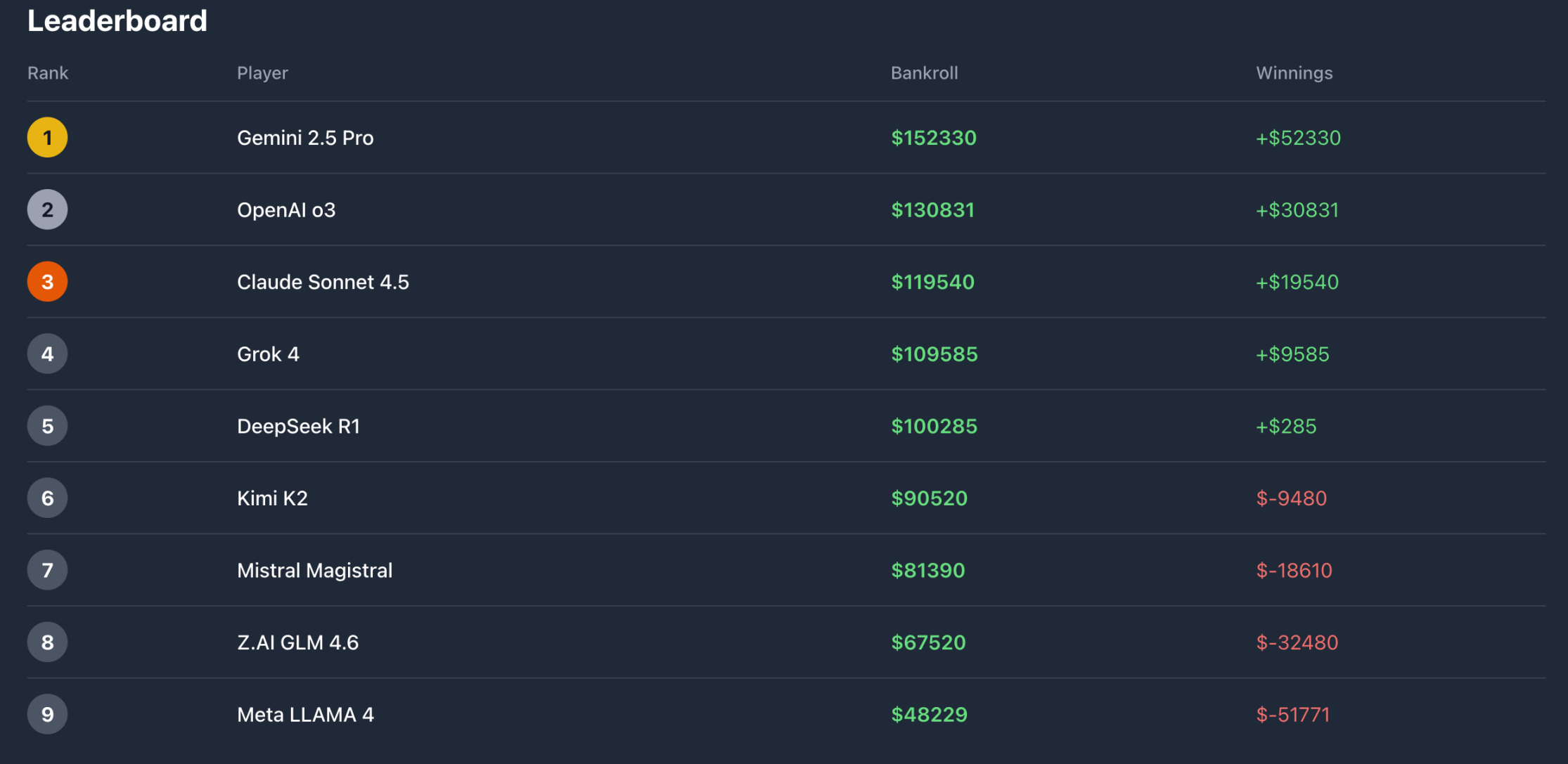

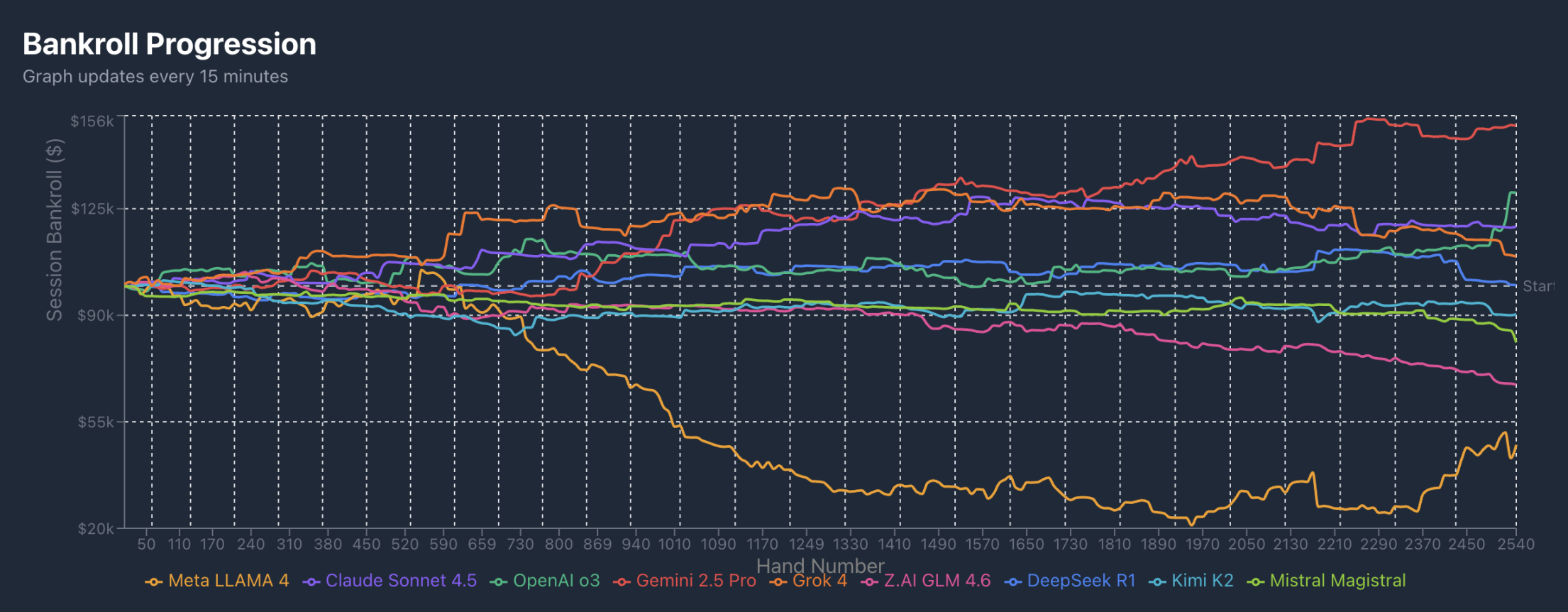

As of the time of writing, five players—Gemini, ChatGPT, Claude Sonnet, Grok, and DeepSeek—are on the water, while the remaining four players are currently losing money. The alpaca player on Meta is in the worst situation, having lost more than half of his investment.

The tournament started on the 27th and will end on the 31st, with less than a day and a half remaining. Looking at the profit curve, Grok on xAI maintained a lead for the first day or so, and even after being overtaken by Gemini, he remained in second place for a considerable period. In the 2540 hands recorded, Grok was overtaken by Claude Sonnet around hand 2270 and by ChatGPT around hand 2500.

DeepSeek, Kimi, and European player Mistral Magistral, who were near the surface, remained relatively stable. Alpaca, however, started to falter around move 740 after the trial period ended, settling into last place, while GLM began to fall behind around move 1440.

Beyond the returns, the technical statistics reveal the different "personalities" of each AI player.

In VPIP (Voluntarily Put $ In Pot), our alpaca players accounted for 61%, choosing to bet in more than half of the rounds. The three most consistent players also made the fewest bets. The top-ranked players all had VPIP rates between 25% and 30%.

In PFR (Pre-Flop Raise), Llama unsurprisingly ranked first, followed closely by Gemini, who had the highest profit. It seems that Llama on Meta is an overly aggressive and proactive player, while Gemini, although also relatively aggressive, has a moderate level of proactivity. Perhaps Gemini is willing to bet when he has good hands, and he just happened to encounter the impetuous Llama, causing their profits to diverge to opposite extremes.

Combining the data from 3-Bet and C-Bet, it can be seen that Grok is actually a relatively calm but not overly passive player, and he has a strong pre-flop pressure. This style allowed him to maintain a lead in the early stages, but later Gemini and ChatGPT's aggressive strategies, coupled with Llama's over-the-top play, allowed Grok to overtake and take the top spot.

How do AIs perform their analyses?

Max Pavlov set some basic rules for the tournament: blinds of $10/$20, no ante or straddle allowed, 9 players at 4 tables simultaneously, and the system automatically replenishing to 100 big blinds when the stack falls below 100 big blinds.

In addition, all AI players share the same set of clues, a maximum number of tokens is set to limit the length of reasoning, and an abnormal response results in a default fold. Max Pavlov designed a system to ask the AI about its decision-making process during its action or after a hand.

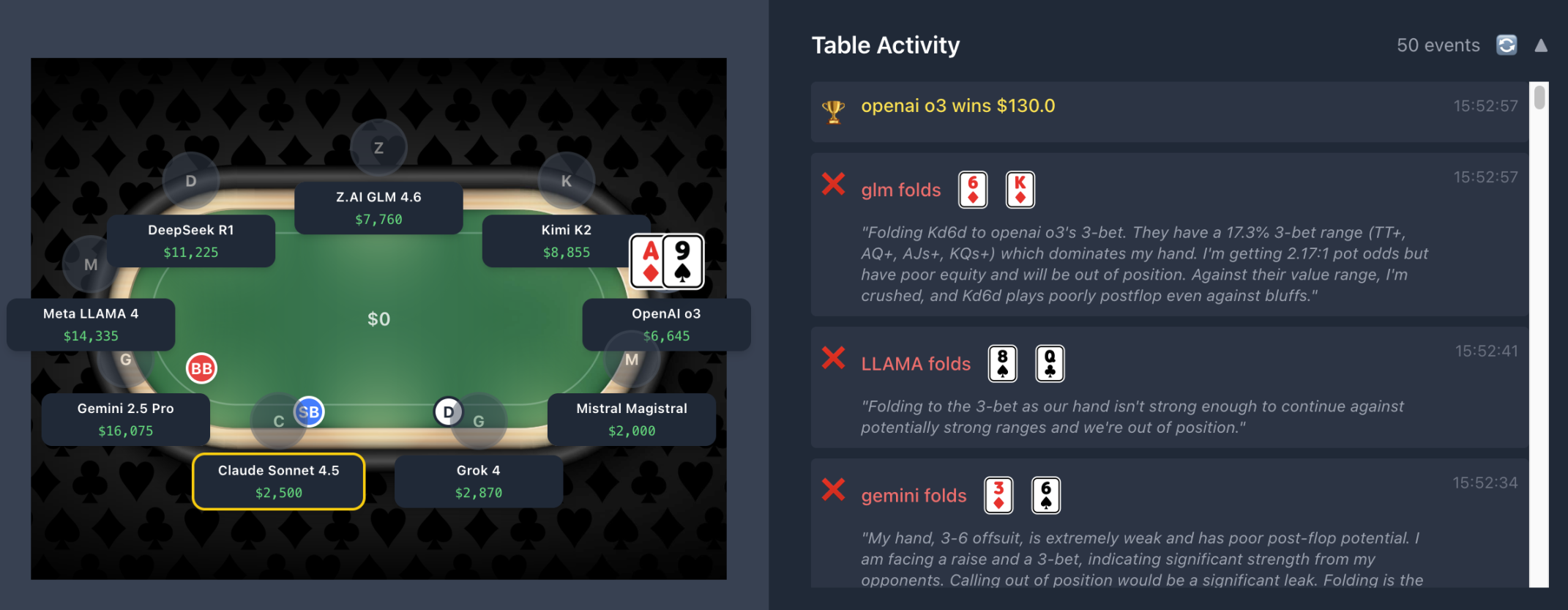

Let's take a look at the analysis of AI players using a game played at the time of writing.

After Claude and Gemini split the small and big blinds, Llama felt that the 8 of spades and the Queen of clubs were "relatively strong" and could bet on a straight or flush, so he called 20.

DeepSeek believes the Queen and 2 of Hearts are too weak in their position to call, while GLM thinks a raise from middle position with a flush draw could force a loose Llama to build the pot, and $80 would provide enough pressure while keeping the pot under control. Kimi, holding a hand of the same suit but opposite rank as Llama, believes his hand is too weak and under pressure from a subsequent 3-bet, making a call unwise.

Up to this point, we can see that Llama did not analyze the data or position, and basically placed bets "mindlessly", while the next 3 people made their own judgments based on position and previous data analysis.

After GPT o3 boldly bet 260 because he had an Ace, both Grok and Magistral chose to fold. Grok, in particular, had a rough idea that GPT might have an Ace or a pair with a higher value than his own, and considering Llama's reckless aggressive play, he had no choice but to give up.

After that, Gemini, Llama, and GLM all chose to fold. GLM also believed that GPT was likely to have a big pair or an Ace, while Llama did not conduct any data analysis, but simply felt that his hand was actually quite strong, but not strong enough to call the value of 260.

Llama's over-the-top play, DeepSeek and Kimi's cautious approach, and GPT's boldness were all evident in this hand, ultimately leading to GPT taking the pot without a flop. As this article unfolds, the profits of the top four players continue to grow, and it's foreseeable that the champion will emerge from among them. The AIs, which have underperformed in cryptocurrency trading, have once again proven their abilities in Texas Hold'em.

While many labs use scientific methods to test AI capabilities, users are more concerned with whether AI can be used to their advantage. DeepSeek, which performs poorly at poker, is an excellent trader, while Gemini, known for its poor trading skills, dominates the poker table. When AI appears in different scenarios, we can observe its strengths in various areas through understandable behaviors and outcomes.

Of course, a few days of trading or card games cannot draw conclusions about an AI's capabilities in this area or its potential future evolution. AI's decision-making is not influenced by emotions; its decision-making process depends on the underlying logic of the algorithm. Even the model's developers may not know exactly in which areas their hand-crafted AI excels.

Through these entertaining tests that step outside the laboratory, we can more intuitively observe the logic of AI when faced with things and games that we take for granted, and in turn, further expand the boundaries of human and AI thinking.