Original article by @BlazingKevin_ , the Researcher at Movemaker

Storage used to be one of the top narratives in the industry. Filecoin, as the leader in the last bull market, had a market value of over 10 billion US dollars. Arweave, as a storage protocol that competes with it, uses permanent storage as a selling point, and its market value has reached as high as 3.5 billion US dollars. However, as the availability of cold data storage has been falsified, the necessity of permanent storage has been questioned, and whether the narrative of decentralized storage can work has been marked with a big question mark. The emergence of Walrus has made waves in the long-dormant storage narrative, and now Aptos has joined hands with Jump Crypto to launch Shelby, aiming to take decentralized storage to the next level in the hot data track. So can decentralized storage make a comeback and provide a wide range of use cases? Or is it another topic of hype? Starting from the development routes of Filecoin, Arweave, Walrus and Shelby, this article analyzes the narrative changes of decentralized storage, trying to find such an answer: How far is the road to the popularization of decentralized storage?

Filecoin: Storage is the appearance, mining is the essence

Filecoin is one of the first altcoins to rise, and its development direction naturally revolves around decentralization, which is a common feature of early altcoins - that is, to find the meaning of decentralized existence in various traditional tracks. Filecoin is no exception. It links storage with decentralization, which naturally associates it with the disadvantages of centralized storage: the trust assumption of centralized data storage service providers. Therefore, what Filecoin does is to shift centralized storage to decentralized storage. However, some aspects sacrificed in this process to achieve decentralization have become the pain points that the Arweave or Walrus projects later envisioned to solve. To understand why Filecoin is just a mining coin, you need to understand why its underlying technology IPFS is not suitable for the objective limitations of hot data.

IPFS: Decentralized architecture, but stopped at the transmission bottleneck

IPFS (Interplanetary File System) was launched as early as 2015. It aims to subvert the traditional HTTP protocol through content addressing. The biggest drawback of IPFS is that it is extremely slow to obtain data. In an era when traditional data service providers can achieve millisecond-level responses, IPFS still takes more than ten seconds to obtain a file, which makes it difficult to promote in practical applications. This also explains why it is rarely adopted by traditional industries except for a few blockchain projects.

The underlying P2P protocol of IPFS is mainly suitable for "cold data", that is, static content that does not change frequently, such as videos, pictures, and documents. However, when it comes to processing hot data, such as dynamic web pages, online games, or artificial intelligence applications, the P2P protocol has no obvious advantages over traditional CDNs.

However, although IPFS itself is not a blockchain, its directed acyclic graph (DAG) design concept is highly compatible with many public chains and Web3 protocols, making it naturally suitable as the underlying construction framework of blockchain. Therefore, even if it has no practical value, it is sufficient as an underlying framework that carries the blockchain narrative. Early copycat projects only need a framework that can run smoothly to open up the stars and the sea. But when Filecoin develops to a certain period, the shortcomings brought by IPFS begin to hinder its progress.

Mining Coin Logic Under the Storage Cloak

The original intention of IPFS is to allow users to store data while also being part of the storage network. However, without economic incentives, it is difficult for users to voluntarily use this system, let alone become active storage nodes. This means that most users will only store files on IPFS, but will not contribute their own storage space or store other people's files. It is in this context that Filecoin came into being.

There are three main roles in Filecoin's token economic model: users are responsible for paying fees to store data; storage miners receive token incentives for storing user data; and retrieval miners provide data when users need it and receive incentives.

This model has potential for malicious behavior. Storage miners may fill garbage data after providing storage space to obtain rewards. Since these garbage data will not be retrieved, even if they are lost, they will not trigger the storage miner's penalty mechanism. This allows storage miners to delete garbage data and repeat the process. Filecoin's replication proof consensus can only ensure that user data is not deleted privately, but it cannot prevent miners from filling garbage data.

The operation of Filecoin relies heavily on the continuous investment of miners in the token economy, rather than on the real demand of end users for distributed storage. Although the project is still being iterated, at this stage, the ecological construction of Filecoin is more in line with the definition of a storage project that is "mining coin logic" rather than "application-driven".

Arweave: Success and failure due to long-termism

If the design goal of Filecoin is to build an incentivized and provable decentralized "data cloud" shell, then Arweave goes to the extreme in another direction of storage: providing the ability to permanently store data. Arweave does not attempt to build a distributed computing platform. Its entire system revolves around a core assumption that important data should be stored once and remain in the network forever. This extreme long-termism makes Arweave very different from Filecoin from the mechanism to the incentive model, from the hardware requirements to the narrative perspective.

Arweave uses Bitcoin as a learning object, trying to continuously optimize its own permanent storage network over a long period of years. Arweave does not care about marketing, competitors, and market trends. It just keeps moving forward on the road of iterative network architecture, even if no one cares, because this is the essence of the Arweave development team: long-termism. Thanks to long-termism, Arweave was enthusiastically sought after in the last bull market; also because of long-termism, even if it hits the bottom, Arweave may still survive several rounds of bull and bear markets. But does Arweave have a place in the future of decentralized storage? The value of permanent storage can only be proven by time.

Arweave mainnet has been working to allow a wider range of miners to participate in the network at the lowest cost, and to motivate miners to store data to the maximum extent, so that the robustness of the entire network is continuously improved, from version 1.5 to the latest version 2.9. Although it has only lost market discussion, it has been committed to allowing a wider range of miners to participate in the network at the lowest cost, and to motivate miners to store data to the maximum extent, so that the robustness of the entire network is continuously improved. Arweave is well aware that it does not meet the market preferences and takes a conservative approach. It does not embrace the mining group, and the ecosystem is completely stagnant. It upgrades the mainnet at the lowest cost, and continuously lowers the hardware threshold without compromising network security.

Review of the upgrade process from 1.5 to 2.9

Arweave 1.5 exposed a vulnerability that allowed miners to rely on GPU stacking rather than real storage to optimize the probability of generating blocks. To curb this trend, version 1.7 introduced the RandomX algorithm, which restricted the use of specialized computing power and instead required general-purpose CPUs to participate in mining, thereby weakening the centralization of computing power.

In version 2.0, Arweave uses SPoA to convert data proof into a concise path of Merkle tree structure, and introduces format 2 transactions to reduce synchronization burden. This architecture relieves network bandwidth pressure and significantly enhances node coordination capabilities. However, some miners can still avoid the responsibility of holding real data through centralized high-speed storage pool strategies.

To correct this bias, 2.4 launched the SPoRA mechanism, introduced global index and slow hash random access, so that miners must actually hold data blocks to participate in effective block generation, weakening the computing power stacking effect from a mechanism perspective. As a result, miners began to pay attention to storage access speed, driving the application of SSD and high-speed read and write devices. 2.6 introduced hash chains to control the rhythm of block generation, balancing the marginal benefits of high-performance equipment and providing fair participation space for small and medium-sized miners.

Subsequent versions further enhanced network collaboration capabilities and storage diversity: 2.7 added collaborative mining and mining pool mechanisms to enhance the competitiveness of small miners; 2.8 introduced a composite packaging mechanism to allow large-capacity, low-speed devices to participate flexibly; 2.9 introduced a new packaging process in the replica_ 2 _ 9 format, greatly improving efficiency and reducing computing dependence, completing the closed loop of the data-oriented mining model.

Overall, Arweave's upgrade path clearly presents its storage-oriented long-term strategy: while continuously resisting the trend of computing power concentration, it continues to lower the threshold for participation and ensure the possibility of long-term operation of the protocol.

Walrus: Is embracing hot data hype or hidden secrets?

Walrus is completely different from Filecoin and Arweave in terms of design ideas. Filecoin’s starting point is to create a decentralized and verifiable storage system, at the cost of cold data storage; Arweave’s starting point is to create an on-chain Alexandria Library that can store data permanently, at the cost of too few scenarios; Walrus’ starting point is to optimize the hot data storage protocol for storage overhead.

Magical modification of erasure code: cost innovation or old wine in a new bottle?

In terms of storage cost design, Walrus believes that the storage costs of Filecoin and Arweave are unreasonable. The latter two both adopt a fully replicated architecture, whose main advantage is that each node holds a complete copy, with strong fault tolerance and independence between nodes. This type of architecture can ensure that the network still has data availability even if some nodes are offline. However, this also means that the system needs multiple copies of redundancy to maintain robustness, which in turn pushes up storage costs. Especially in the design of Arweave, the consensus mechanism itself encourages node redundant storage to enhance data security. In contrast, Filecoin is more flexible in cost control, but the cost is that some low-cost storage may have a higher risk of data loss. Walrus tries to find a balance between the two. Its mechanism controls replication costs while enhancing availability through structured redundancy, thereby establishing a new compromise path between data availability and cost efficiency.

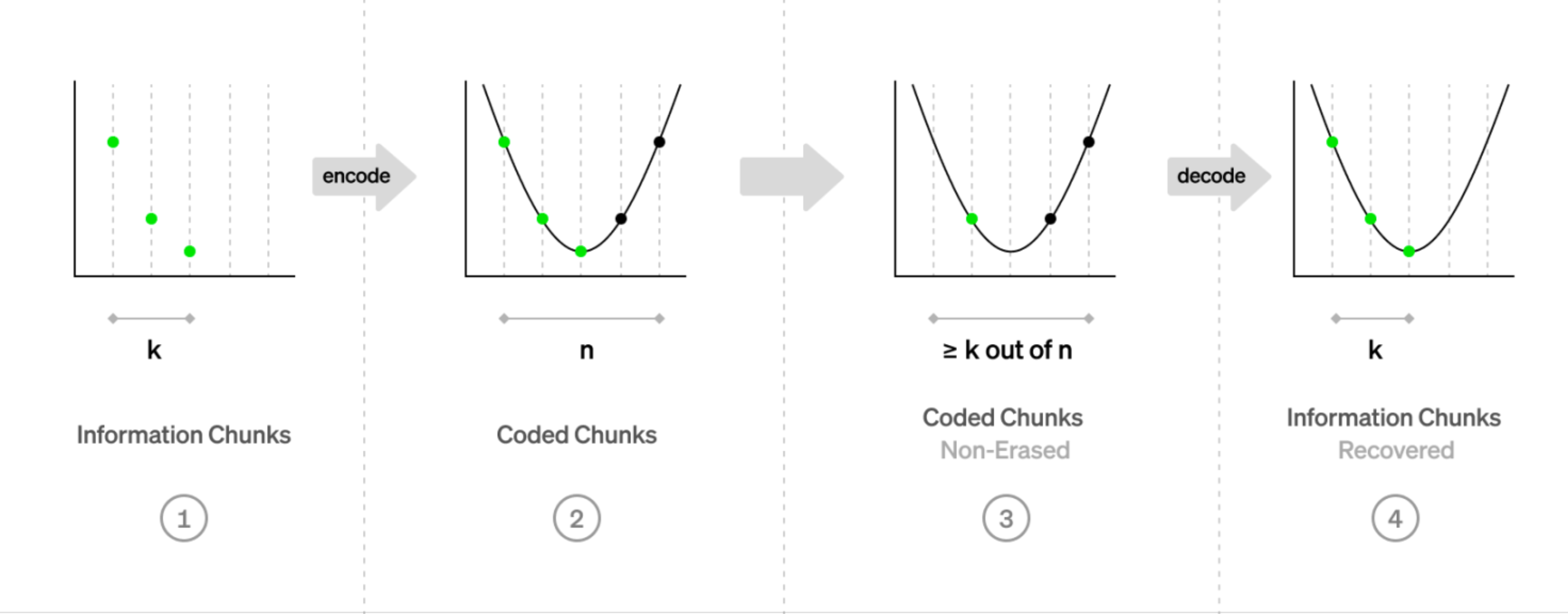

Walrus's self-created Redstuff is a key technology for reducing node redundancy. It is derived from Reed-Solomon (RS) coding. RS coding is a very traditional erasure code algorithm. Erasure coding is a technology that allows a data set to be doubled by adding redundant fragments (erasure code) and can be used to reconstruct the original data. From CD-ROMs to satellite communications to QR codes, it is frequently used in daily life.

Erasure codes allow the user to take a block, say 1 MB in size, and "enlarge" it to 2 MB in size, where the extra 1 MB is special data called an erasure code. If any bytes in the block are lost, the user can easily recover those bytes through the code. Even if up to 1 MB of a block is lost, you can recover the entire block. The same technology allows a computer to read all the data on a CD-ROM, even if it is damaged.

The most commonly used one is RS coding. The implementation method is to start with k information blocks, construct related polynomials, and evaluate them at different x coordinates to obtain the coded blocks. With RS erasure codes, the probability of losing large blocks of data by random sampling is very small.

For example: a file is divided into 6 data blocks and 4 check blocks, a total of 10 copies. As long as 6 of them are retained at random, the original data can be completely restored.

Advantages: It has strong fault tolerance and is widely used in CD/DVD, fault-proof hard disk arrays (RAID), and cloud storage systems (such as Azure Storage and Facebook F4).

Disadvantages: Decoding calculations are complex and costly; not suitable for frequently changing data scenarios. Therefore, it is usually used for data recovery and scheduling in off-chain centralized environments.

Under the decentralized architecture, Storj and Sia have adjusted the traditional RS coding to adapt to the actual needs of the distributed network. Walrus also proposed its own variant, the RedStuff coding algorithm, to achieve a lower-cost and more flexible redundant storage mechanism.

What is the biggest feature of Redstuff? **By improving the erasure coding algorithm, Walrus is able to quickly and robustly encode unstructured data blocks into smaller shards, which are distributed and stored in a network of storage nodes. Even if up to two-thirds of the shards are lost, the original data blocks can be quickly reconstructed using some of the shards. **This is possible while keeping the replication factor at only 4x to 5x.

Therefore, it is reasonable to define Walrus as a lightweight redundancy and recovery protocol redesigned around decentralized scenarios. Compared with traditional erasure codes (such as Reed-Solomon), RedStuff no longer pursues strict mathematical consistency, but makes realistic trade-offs in data distribution, storage verification, and computational costs. This model abandons the instant decoding mechanism required for centralized scheduling and instead verifies whether a node has a specific data copy through on-chain proof, thereby adapting to a more dynamic and marginalized network structure.

The core of RedStuff's design is to split the data into two categories: primary slices and secondary slices. The primary slice is used to restore the original data. Its generation and distribution are strictly constrained, the recovery threshold is f+ 1, and 2 f+ 1 signatures are required as availability endorsements. The secondary slices are generated through simple operations such as XOR combinations. Their function is to provide elastic fault tolerance and improve the robustness of the overall system. This structure essentially reduces the requirements for data consistency - allowing different nodes to store different versions of data for a short period of time, emphasizing the practical path of "eventual consistency". Although similar to the relaxed requirements for backtracking blocks in systems such as Arweave, it has achieved certain results in reducing the burden on the network, but it also weakens the immediate availability and integrity of data.

It cannot be ignored that although RedStuff achieves effective storage in low-computing power and low-bandwidth environments, it is essentially still a "variant" of the erasure code system. It sacrifices some data reading certainty in exchange for cost control and scalability in a decentralized environment. However, at the application level, whether this architecture can support large-scale, high-frequency interactive data scenarios remains to be seen. Furthermore, RedStuff has not really broken through the long-standing coding and computing bottleneck of erasure codes, but has avoided the high coupling points of traditional architectures through structural strategies. Its innovation is more reflected in the combinatorial optimization on the engineering side, rather than the subversion of the basic algorithm level.

Therefore, RedStuff is more like a "reasonable modification" for the current decentralized storage reality. It does bring improvements in redundancy costs and operating loads, allowing edge devices and non-high-performance nodes to participate in data storage tasks. However, in business scenarios with higher requirements for large-scale applications, general computing adaptation and consistency, its capability boundaries are still relatively obvious. This makes Walrus' innovation more like an adaptive transformation of the existing technology system rather than a decisive breakthrough in promoting the migration of the decentralized storage paradigm.

Sui and Walrus: Can high-performance public chains drive the practical application of storage?

From Walrus’ official research article, you can see its target scenario: “Walrus was originally designed to provide a solution for storing large binary files (Blobs), which are the lifeblood of many decentralized applications.”

So-called large blob data usually refers to binary objects with large volume and unstable structure, such as videos, audios, images, model files or software packages.

In the context of encryption, it refers more to NFT, images and videos in social media content. This also constitutes the main application direction of Walrus.

Although the article also mentions the potential uses of AI model dataset storage and data availability layer (DA), the phased decline of Web3 AI has left few related projects, and the number of protocols that truly adopt Walrus in the future may be very limited.

As for the DA layer, whether Walrus can serve as an effective alternative will have to wait until mainstream projects such as Celestia regain market attention before its feasibility can be verified.

Therefore, the core positioning of Walrus can be understood as a hot storage system that serves content assets such as NFTs, emphasizing dynamic calling, real-time updating and version management capabilities.

This also explains why Walrus needs to rely on Sui: with the help of Sui's high-performance chain capabilities, Walrus can build a high-speed data retrieval network, significantly reducing operating costs without developing a high-performance public chain on its own, thereby avoiding direct competition with traditional cloud storage services in terms of unit cost.

According to official data, Walrus' storage cost is about one-fifth of traditional cloud services. Although it is dozens of times more expensive than Filecoin and Arweave, its goal is not to pursue extremely low costs, but to build a decentralized hot storage system that can be used in real business scenarios. Walrus itself runs on a PoS network, and its core responsibility is to verify the honesty of storage nodes and provide the most basic security for the entire system.

As for whether Sui really needs Walrus, it is still more at the level of ecological narrative. **If the main purpose is only financial settlement, Sui does not urgently need off-chain storage support. **However, if it hopes to carry more complex on-chain scenarios such as AI applications, content assetization, and composable agents in the future, the storage layer will be indispensable in providing context, context, and indexing capabilities. High-performance chains can handle complex state models, but these states need to be bound to verifiable data to build a trustworthy content network.

Shelby: Dedicated fiber network completely unleashes Web3 application scenarios

Among the biggest technical bottlenecks currently facing Web3 applications, "read performance" has always been a shortcoming that is difficult to overcome.

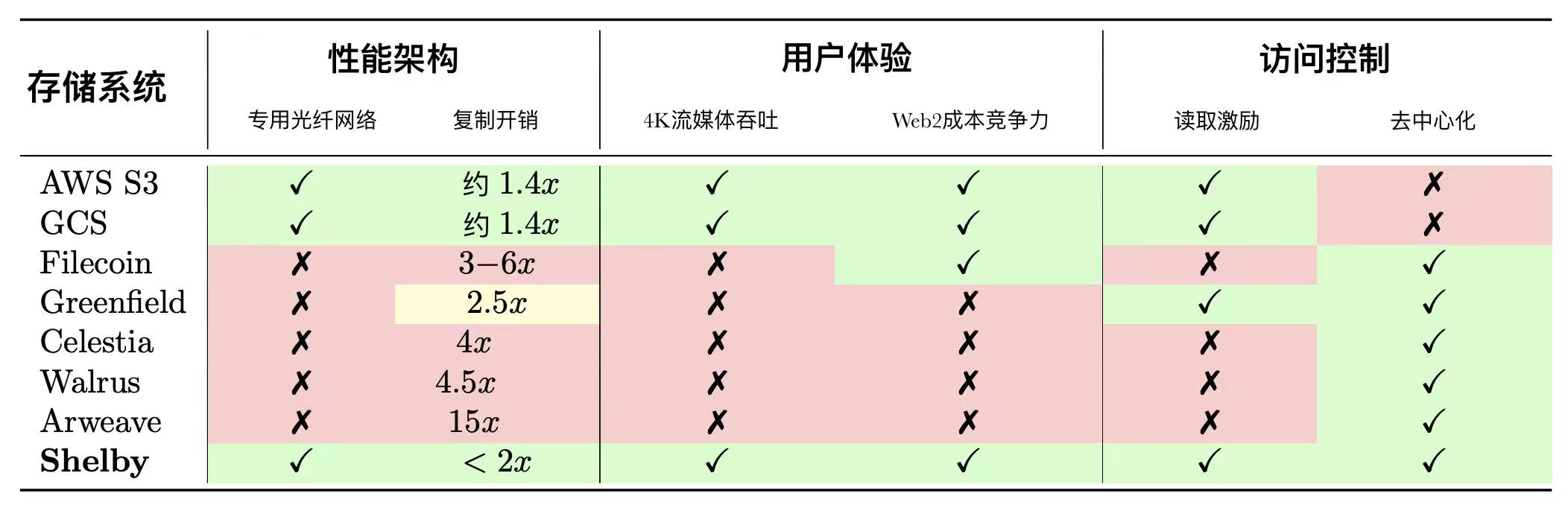

Whether it is video streaming, RAG systems, real-time collaboration tools, or AI model inference engines, they all rely on low-latency, high-throughput access to hot data. Although decentralized storage protocols (from Arweave, Filecoin to Walrus) have made progress in data persistence and trustlessness, they are still unable to get rid of the limitations of high latency, unstable bandwidth, and uncontrollable data scheduling because they run on the public Internet.

Shelby tried to solve the problem at its source.

First, the Paid Reads mechanism directly reshapes the "read operation" dilemma in decentralized storage. In traditional systems, reading data is almost free, and the lack of an effective incentive mechanism leads to service nodes being generally lazy to respond and cutting corners, causing the actual user experience to lag far behind Web2.

Shelby directly links user experience with service node revenue by introducing a pay-per-read model: the faster and more stably a node returns data, the more rewards it can earn.

This model is not an "incidental economic design" but the core logic of Shelby's performance design - without incentives, there will be no reliable performance; only with incentives can there be sustainable improvement in service quality.

Secondly, one of the biggest technological breakthroughs proposed by Shelby is the introduction of a dedicated fiber network, which is equivalent to building a high-speed rail network for instant reading of Web3 hot data.

This architecture completely bypasses the public transport layer that Web3 systems generally rely on, and directly deploys storage nodes and RPC nodes on a high-performance, low-congestion, physically isolated transmission backbone. This not only significantly reduces the latency of cross-node communication, but also ensures the predictability and stability of transmission bandwidth. Shelby's underlying network structure is closer to the dedicated line deployment mode between AWS internal data centers, rather than the "upload to a miner node" logic of other Web3 protocols.

Source: Shelby White Paper

This network-level architectural reversal makes Shelby the first decentralized hot storage protocol that is truly capable of carrying a Web2-level experience. When users read a 4K video on Shelby, call the embedding data of a large language model, or trace back a transaction log, they no longer have to endure the second-level delays common in cold data systems, but can get sub-second responses. For service nodes, the dedicated network not only improves service efficiency, but also greatly reduces bandwidth costs, making the "pay-per-read" mechanism truly economically feasible, thereby incentivizing the system to evolve towards higher performance rather than higher storage.

It can be said that the introduction of a dedicated fiber optic network is the key support for Shelby to "look like AWS, but be Web3 at heart". It not only breaks the natural contradiction between decentralization and performance, but also opens up the possibility of real implementation of Web3 applications in terms of high-frequency reading, high-bandwidth scheduling, and low-cost edge access.

In addition, between data persistence and cost, Shelby uses the Efficient Coding Scheme built by Clay Codes. Through the mathematical optimal coding structure of MSR and MDS, it achieves storage redundancy as low as <2x, while still maintaining 11 9s of persistence and 99.9% availability. At a time when most Web3 storage protocols are still stuck at 5x~15x redundancy rates, Shelby is not only more efficient technically, but also more cost-competitive. This also means that for dApp developers who really value cost optimization and resource scheduling, Shelby provides a realistic option that is "cheap and fast".

Summarize

Looking at the evolution of Filecoin, Arweave, Walrus to Shelby, we can clearly see that: **The narrative of decentralized storage has gradually moved from the technological utopia of "existence is reasonable" to the realistic route of "availability is justice". **Early Filecoin used economic incentives to drive hardware participation, but real user needs have been marginalized for a long time; Arweave chose extreme permanent storage, but it became more and more isolated in the silence of the application ecosystem; Walrus tried to find a new balance between cost and performance, but there are still questions about the construction of landing scenarios and incentive mechanisms. It was not until the emergence of Shelby that decentralized storage made a systematic response to "Web2-level availability" for the first time - from the dedicated fiber optic network at the transmission layer, to the efficient erasure code design at the computing layer, to the pay-per-read incentive mechanism, these capabilities that were originally exclusive to centralized cloud platforms began to be reconstructed in the Web3 world.

The emergence of Shelby does not mean the end of the problem. It does not solve all challenges: developer ecology, permission management, terminal access and other issues are still ahead. But its significance lies in that it has opened up a possible path of "no compromise in performance" for the decentralized storage industry, breaking the binary paradox of "either anti-censorship or easy to use".

The popularization of decentralized storage will not only rely on the popularity of concepts or token speculation, but must move towards the "usable, integrable, and sustainable" application-driven stage. At this stage, whoever can first solve the real pain points of users will be able to reshape the pattern of the next round of infrastructure narrative. From mining coin logic to usage logic, Shelby's breakthrough may mark the end of an era - and the beginning of another era.

About Movemaker

Movemaker is the first official community organization authorized by the Aptos Foundation and jointly initiated by Ankaa and BlockBooster, focusing on promoting the construction and development of the Aptos Chinese ecosystem. As the official representative of Aptos in the Chinese region, Movemaker is committed to building a diverse, open and prosperous Aptos ecosystem by connecting developers, users, capital and many ecological partners.

Disclaimer:

This article/blog is for informational purposes only and represents the personal opinions of the author and does not necessarily represent the position of Movemaker. This article is not intended to provide: (i) investment advice or investment recommendations; (ii) an offer or solicitation to buy, sell or hold digital assets; or (iii) financial, accounting, legal or tax advice. Holding digital assets, including stablecoins and NFTs, is extremely risky and may fluctuate in price and become worthless. You should carefully consider whether trading or holding digital assets is appropriate for you based on your financial situation. If you have questions about your specific situation, please consult your legal, tax or investment advisor. The information provided in this article (including market data and statistical information, if any) is for general information only. Reasonable care has been taken in the preparation of these data and charts, but no responsibility is assumed for any factual errors or omissions expressed therein.