The AI boom brought by ChatGPT: How blockchain technology can solve the challenges and bottlenecks of AI development

Original compilation: BlockTurbo

Original compilation: BlockTurbo

The field of generative artificial intelligence (AI) has been the undisputed hot spot for the past two weeks, with groundbreaking new releases and cutting-edge integrations emerging. OpenAI released the highly anticipated GPT-4 model, Midjourney released the latest V 5 model, and Stanford released the Alpaca 7 B language model. Meanwhile, Google rolled out generative AI across its Workspace suite, Anthropic launched its AI assistant Claude, and Microsoft integrated its powerful generative AI tool, Copilot, into its Microsoft 365 suite.

The pace of AI development and adoption is accelerating as businesses begin to realize the value of AI and automation and the need to adopt these technologies to remain competitive in the marketplace.

Although AI development appears to be progressing smoothly, there are still some underlying challenges and bottlenecks that need to be addressed. As more businesses and consumers embrace AI, bottlenecks in computing power are emerging. The amount of computing required for AI systems is doubling every few months, while the supply of computing resources struggles to keep pace. Additionally, the cost of training large-scale AI models continues to soar, increasing by about 3,100% annually over the past decade.

first level title

Artificial Intelligence (AI) and Machine Learning (ML) Fundamentals

The field of AI can be daunting, with technical terms like deep learning, neural networks, and underlying models adding to its complexity. For now, let's simplify these concepts for easier understanding.

Artificial intelligence is a branch of computer science that involves developing algorithms and models that enable computers to perform tasks that require human intelligence, such as perception, reasoning, and decision making;

Machine Learning (ML) is a subset of AI that involves training algorithms to recognize patterns in data and make predictions based on those patterns;

Deep learning is a type of ML that involves the use of neural networks, which consist of layers of interconnected nodes that work together to analyze input data and generate output.

first level title

AI and ML Industry Issues

Advances in AI are primarily driven by three factors:

algorithm innovationdata

data: AI models rely on large datasets as fuel for training, enabling them to learn from patterns and relationships in the data.

calculate: The complex calculations required to train AI models require a lot of computing processing power.

However, there are two main problems that hinder the development of artificial intelligence. Back in 2021, access to data is the number one challenge AI businesses face in their AI development. Over the last year, computing-related issues have overtaken data as a challenge, especially due to the inability to access computing resources on-demand driven by high demand.

The second problem has to do with the inefficiency of algorithmic innovation. While researchers continue to make incremental improvements to models by building on previous models, the intelligence, or patterns, extracted by these models is always lost.

secondary title

computing bottleneck

Training basic machine learning models is resource-intensive, often involving large numbers of GPUs for extended periods of time. For example, Stability.AI requires 4,000 Nvidia A 100 GPUs running in AWS's cloud to train their AI models, costing over $50 million a month. OpenAI's GPT-3, on the other hand, costs $12 million to train using 1,000 Nvidia V100 GPUs.

AI companies typically face two choices: invest in their own hardware and sacrifice scalability, or choose a cloud provider and pay top dollar. While larger companies can afford the latter option, smaller companies may not have that luxury. As the cost of capital rises, startups are being forced to cut back on cloud spending, even as the cost of scaling infrastructure for large cloud providers remains largely the same.

secondary title

Inefficiency and lack of collaboration

More and more AI development is being done in secret at big tech companies, not in academia. This trend has led to less collaboration in the field, with companies such as Microsoft's OpenAI and Google's DeepMind competing with each other and keeping their models private.

Lack of collaboration leads to inefficiencies. For example, if an independent research team wanted to develop a more powerful version of OpenAI's GPT-4, they would need to retrain the model from scratch, essentially relearning everything GPT-4 trained on. Considering the $12 million training cost of GPT-3 alone, this puts smaller ML research labs at a disadvantage and pushes the future of AI development further into the control of big tech companies.

first level title

Decentralized Computing Networks for Machine Learning

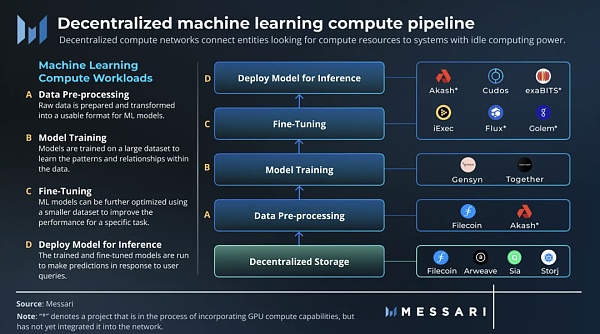

A decentralized computing network connects entities seeking computing resources to systems with spare computing power by incentivizing the contribution of CPU and GPU resources to the network. Since there is no additional cost for individuals or organizations to provide their idle resources, decentralized networks can offer lower prices compared to centralized providers.

There are two main types of decentralized computing networks: general purpose and special purpose. A general-purpose computing network operates like a decentralized cloud, providing computing resources for various applications. Purpose-built computing networks, on the other hand, are tailored for specific use cases. For example, a rendering network is a dedicated computing network focused on rendering workloads.

secondary title

Machine Learning Computing Workloads

Machine learning can be broken down into four main computational workloads:

data preprocessing: Raw data is prepared and transformed into a format usable by ML models, which typically involves activities such as data cleaning and normalization.

train: Machine learning models are trained on large datasets to learn patterns and relationships in the data. During training, the parameters and weights of the model are adjusted to minimize error.

fine-tuning: ML models can be further optimized with smaller datasets to improve performance on specific tasks.

reasoning: Run the trained and fine-tuned model to make predictions in response to user queries.

secondary title

Machine Learning Dedicated Computing Network

Due to two challenges around parallelization and validation, the training part requires a special-purpose computing network.

The training of ML models is state-dependent, which means that the result of the calculation depends on the current state of the calculation, which makes it more complicated to utilize distributed GPU networks. Therefore, a specific network designed for parallel training of ML models is required.

More important issues have to do with validation. To build a trust-minimized ML model training network, the network must have a way to verify computational work without repeating the entire computation, which would waste time and resources.

Gensyn

Gensyn is an ML-specific computing network that has found a solution to the parallelization and validation problems of training models in a decentralized and distributed manner. The protocol uses parallelization to split larger computational workloads into tasks and push them asynchronously to the network. To solve the verification problem, Gensyn uses probabilistic learning proofs, a graph-based pinpointing protocol, and a staking and slashing based incentive system.

Although the Gensyn network is not live yet, the team predicts an hourly cost of about $0.40 for a V100-equivalent GPU on its network. This estimate is based on Ethereum miners earning $0.20 to $0.35 per hour using similar GPUs prior to Merge. Even if this estimate were off by 100%, Gensyn's compute costs would still be significantly lower than the on-demand services offered by AWS and GCP.

Together

secondary title

Bittensor: Decentralized Machine Intelligence

Bittensor addresses inefficiencies in machine learning while transforming the way researchers collaborate by using standardized input and output encodings to incentivize knowledge production on open-source networks to enable model interoperability.

On Bittensor, miners are rewarded with the network's native asset, TAO, for providing intelligent services to the network through unique ML models. When training their models on the network, miners exchange information with other miners, speeding up their learning. By staking TAO, users can use the intelligence of the entire Bittensor network and adjust their activities according to their needs, thus forming a P2P intelligence market. Additionally, applications can be built on top of the network's smart layer via the network's validators.

How Bittensor works

Bittensor is an open-source P2P protocol that implements decentralized Mix-of-Experts (MoE), a ML technique that combines multiple models specialized for different problems to create a more accurate overall model. This is done by training a routing model called a gating layer, which is trained on a set of expert models to learn how to intelligently route inputs to produce optimal outputs. To achieve this, validators dynamically form federations between mutually complementary models. Sparse computing is used to solve latency bottlenecks.

Bittensor's incentives attract specialized models into the mix and play a niche role in solving larger problems defined by stakeholders. Each miner represents a unique model (neural network), and Bittensor operates as a self-coordinating model of models, governed by a permissionless smart market system.

The protocol is algorithm-agnostic, validators only define locks and allow the market to find keys. The intelligence of the miners is the only component that is shared and measured, while the model itself remains private, removing any potential bias in the measurement.

Verifier

On Bittensor, the validator acts as a gating layer for the network's MoE model, acts as a trainable API and enables the development of applications on top of the network. Their staking governs the incentive landscape and determines the problems miners solve. Validators understand the value miners provide in order to reward them accordingly and reach a consensus on their ranking. Higher-ranked miners receive a higher share of the inflationary block reward.

Validators are also incentivized to discover and evaluate models honestly and efficiently, as they earn bonds from their top-ranked miners and receive a portion of their future rewards. This effectively creates a mechanism where miners economically "bind" themselves to their miner rank. The protocol's consensus mechanism is designed to resist collusion by up to 50% of network shares, making it financially infeasible to dishonestly rank one's own miners highly.

miner

Miners on the network are trained and inferred, they selectively exchange information with their peers based on their expertise, and update the model's weights accordingly. When exchanging messages, miners prioritize validator requests according to their stake. There are currently 3523 miners online.

The exchange of information between miners on the Bittensor network allows for the creation of more powerful AI models, as miners can leverage the expertise of their peers to improve their own models. This essentially brings composability to the AI space, where different ML models can be connected to create more complex AI systems.

compound intelligence

Summarize

Summarize

As the decentralized machine learning ecosystem matures, there will likely be synergies between various computing and intelligent networks. For example, Gensyn and Together can be used as the hardware coordination layer of the AI ecosystem, and Bittensor can be used as the intelligent coordination layer.

On the supply side, large public crypto miners who previously mined ETH have shown great interest in contributing resources to the decentralized computing network. For example, Akash has received commitments for 1 million GPUs from large miners ahead of their network GPU release. Additionally, Foundry, one of the larger private bitcoin miners, already mines on Bittensor.

The teams behind the projects discussed in this report are not just building crypto-based networks for the hype, but teams of AI researchers and engineers who have realized the potential of crypto to solve problems in their industries.

By improving training efficiency, pooling resources, and providing opportunities for more people to contribute to large-scale AI models, decentralized ML networks can accelerate AI development and allow us to unlock general artificial intelligence faster in the future.