Modular Blockchain: The Importance of Data Availability

Specialized and disassembled: the conceptual origin of modularity

From programming languages to DeFi protocols, "combinability" is a favored term in Web 3.0. But composability is not just a narrative method. When a working model develops to a certain extent, it will inevitably become specialized and disassembled. Then it is inevitable that the blockchain will gradually move towards modularization on the headache of expansion.

We know that the main stack of blockchain has data availability layer, consensus layer, settlement layer and transaction execution layer. Then the monolithic blockchain is to make the work of the four layers be completed in one network. Every node in the network needs to handle data verification to transaction execution.

However, it is conceivable that as the transaction volume increases, the single blockchain gradually encounters bottlenecks. At present, users are adhering to the vision of decentralization, embracing the high transaction fees of Ethereum and the transfer speed per second far inferior to Visa.

At its core, the core of the bottleneck of a single blockchain stems from the accumulation of data. Because the blockchain cannot be tampered with, the availability of data is a prerequisite for expansion that cannot be sacrificed.

But in order to promote mass adoption, the monolithic blockchain is bound to evolve towards modularity.

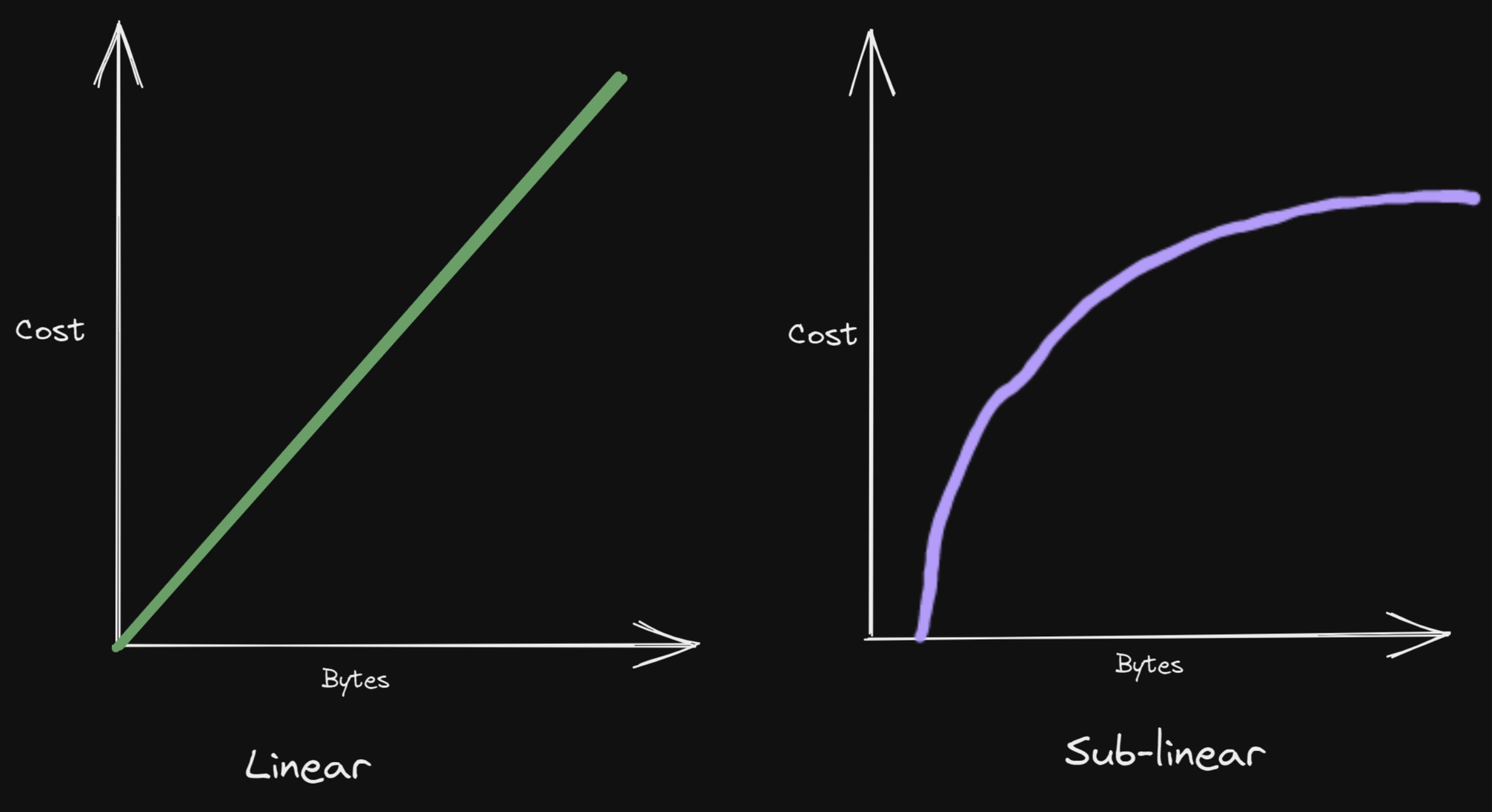

Considering the development process of Ethereum, Vitalik once said that Ethereum will present an ecological state centered on rollup. Rollup is a scaling solution that separates the execution layer and processes calculations off-chain, but still puts part of the data of each transaction in thechain. The throughput of Rollup increases linearly, because the larger the transaction volume, the more data needs to be broadcast to the main network. In this way, the bottleneck of Rollup throughput still falls on the data bandwidth of the underlying network.

In order to overcome this bottleneck, the initial implementation of Ethereum's final expansion plan - Sharding is to let the chains from the sharding executedata available。

It is obvious that the development direction of the single block chain is not only to advance towards modularization, but also the modular block chain or solutions that focus on data availability are particularly worthy of attention. The goal of Ethereum is to scale computation without compromising decentralization and security. Due to the limitations of the overall blockchain architecture, data availability is critical to achieving decentralized scalability.

first level title

Modular Blockchain: Definition, Features and Benefits

In general, a blockchain network can be called a modular blockchain once it outsources one of its work tasks, be it transaction execution/settlement, consensus, or data availability.

In this part of the article, we will use Celestia as an example to demonstrate the features and advantages of modularity. Since the launch of the testnet in May, the popularity has been high, and many articles have interpreted and analyzed its own mechanism and comparison with Ethereum. This first dedicated and modular blockchain network will officially go live on the mainnet in 2023.

On October 20, Celestia completed a financing of US$55 million, led by Bain Capital Crypto and Polychain Capital. According to reports, $55 million is the total of Celestia's A and B rounds of financing, and the latest round of financing has made Celestia a unicorn with a valuation of $1 billion.

Celestia is a pluggable consensus and data availability layer that leverages Tendermint as a consensus protocol and is only responsible for the ordering and availability of data behind transactions regardless of transaction validity. It seems that the work that Celestia is responsible for has been reduced, and the availability of data is actually the core task of blockchain to ensure security.

decentralized:

decentralized: Since the consensus layer on Celestia only focuses on data availability, light nodes in the network can use data availability sampling to confirm availability without downloading the entire block. And this light client node can run directly on the mobile phone, which greatly reduces the threshold for running nodes.

Scalabilitysafety

safetyalso,

also,Modular blockchain has the following advantages:

pluggable, flexible: First, users can deploy their own execution layer based on Celestia, thereby relying on Celestia's data availability as a security guarantee. In addition, Celestia opens up the virtual machine design space for the "execution layer-agnostic" state - it does not need to be compatible with EVM, which gives ample opportunities for the up-and-coming VMs to showcase and compete, such as LLVM, MoveVM, CosmWasm, FuelVM, etc. .

cheapsource:

source:https://rainandcoffee.substack.com/p/the-modular-world

Sovereignty: In previous L1s, hard forks were usually avoided because they involved a lot of risk. Hard fork means that the consensus layer of the network diverges, the underlying security is diluted, and the network needs to call more nodes to join to maintain the original security. However, since Celestia provides a common data availability layer, Rollup based on Celestia can independently decide the upgrade of nodes, the supply of tokens, or define its own validity criteria. Such sovereignty gives Rollups great freedom and room for experimentation.

Solving the problem of expansion: the principle and importance of data availability

De-trust is the premise of blockchain thinking, and data is transmitted through verification rather than trust. If we can't reproduce something with existing data, from a blockchain perspective, then it doesn't exist.Restoring all data with some data, this process is called Data Availability Proof.The way to achieve data availability in a single blockchain is to download all data to a full-node. This method has poor scalability and has high requirements for nodes. The modular expansion solution separates data availability from the consensus and execution layers. This is also considered the most ideal solution since it does not require node upgrades.

Data availability is divided into two ways: on-chain and off-chain:

On-chain: Force block producers to publish all transaction data on-chain and have validators download it. This is usually used for monolithic blockchains, supporting both full nodes and light clients. In addition, Danksharding adopts a brand-new method to realize DA on the chain.

Off-chain: Block producers do not publish transaction data on the chain, but provide encrypted commitments to prove the availability of data. Ethereum's rollups solution and Modular both adopt this approach.

Specific implementation method: the block proposer (Block Proposer) needs to publish the information of each block, and the node (Validator) restores the transaction information according to the available data, and verifies that the information released by the block proposer is the same as the information restored when the node downloads all the data . Since the block proposer only publishes part of the data, it is not ruled out that it hides or tampers with the data and launches an attack on the transaction. This attack is called "data withholding attacks”。

Currently, there are 4 ways to avoid "data withholding attacks":

Data Availability Committees: Pure Validium stores transaction data off-chain through block producers, which is somewhat centralized. The DAC records a copy of the off-chain data offline, but needs to make it available in the event of a dispute. DAC members also publish on-chain proofs that the above data is indeed available.

Proof-of-stake data availability committees: Anyone can become a validator and store data off-chain. However, they must provide a "bond," which is deposited in a smart contract. Proof-of-Stake Data Availability Committees are much more secure than regular DACs. Not only are they permissionless and trustless, but they also have carefully designed incentives to encourage honest behavior. It solves the centralization risk of DAC to a certain extent.

Data Availability Sampling(DAS): In the DAS mechanism, nodes verify the availability of data by randomly sampling small blocks for multiple rounds. Since many nodes sample different parts of a block simultaneously, statistical certainty is achieved to verify its availability. DAS is not only suitable for light client data availability, but also widely used in modular DA schemes.

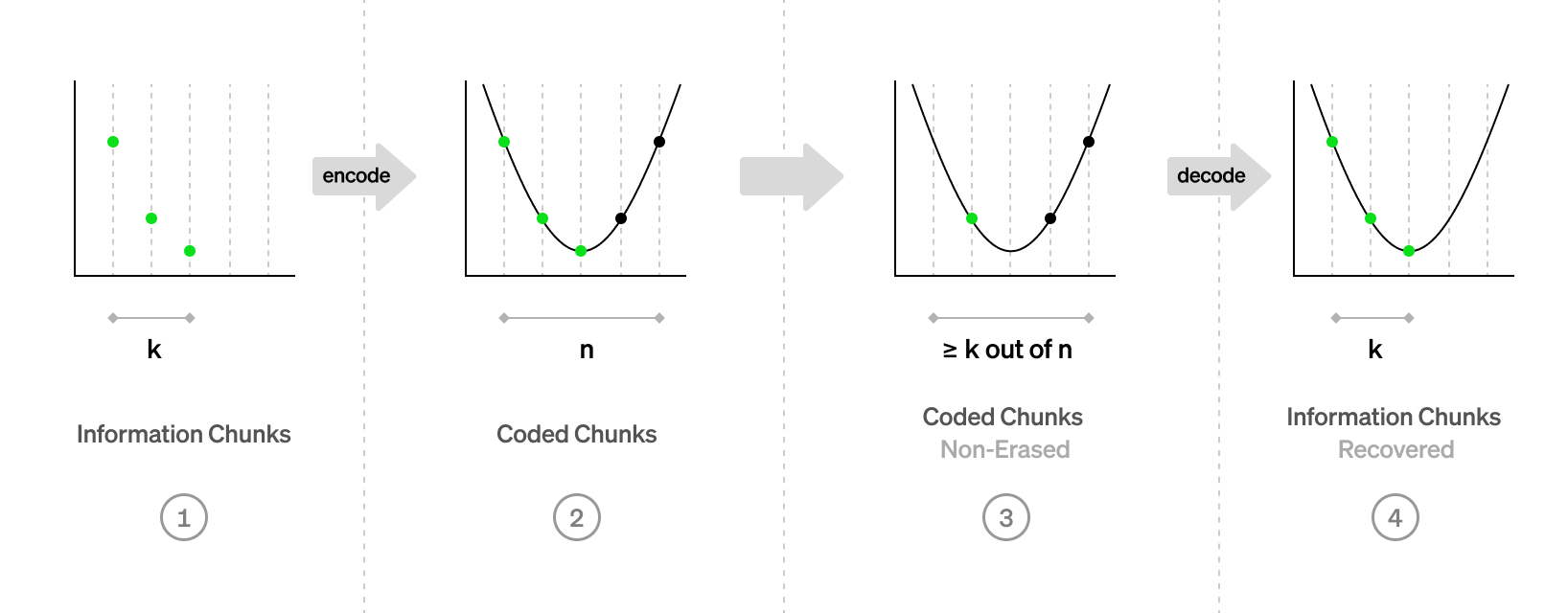

Data Availability Proofs: Combining DAS with erasure coding, since DAS does not verify all data, block proposers may still implement "data withholding attack". Erasure coding is to restore transactions with less data by adding redundant data to the data itself. Since the block proposer is required to publish less data, if the block proposer wants to carry out an attack, it needs to retain at least 50% of the block data, and only need to retain 1% to carry out the attack without erasure coding .

Erasure coding is a technique that allows data sets to be doubled by adding redundant pieces (erasure codes), which can be used to reconstruct the original data. It is ubiquitous in information technology, from CD-ROMs to satellite communications to QR codes. Mustafa Al-Bassam atwhat data is availableAs explained in the article, erasure codes allow users to take a block, say 1MB large, and "enlarge" it to 2MB in size, where the extra 1MB is special data called erasure codes. If any bytes in the block are lost, these bytes can be easily recovered by the user through code. Even if blocks of up to 1MB are lost, you can recover entire blocks. The same technology allows a computer to read all the data on a CD-ROM, even if it is damaged.

Currently the most commonly used is the Reed-Solomon code. This is achieved by, starting from k information blocks, constructing the associated polynomial and evaluating it at different x-coordinates to obtain coded blocks. With RS erasure coding, random sampling has a very small chance of losing large chunks of data. KZG Polynomial Commitment allows direct verification of Reed-Solomon encoded blocks based on commitments to unencoded information blocks, thus leaving no room for invalid encodings.

source:

source:https://www.paradigm.xyz/2022/08/das

first level title

Interpretation: Modular Project/Solution

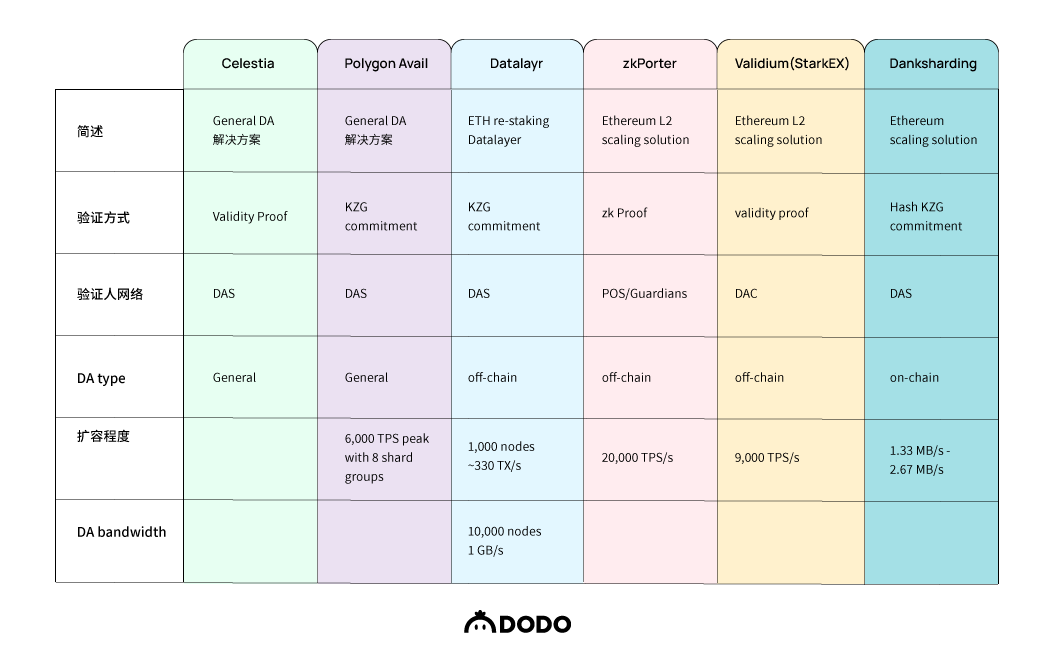

Danksharding

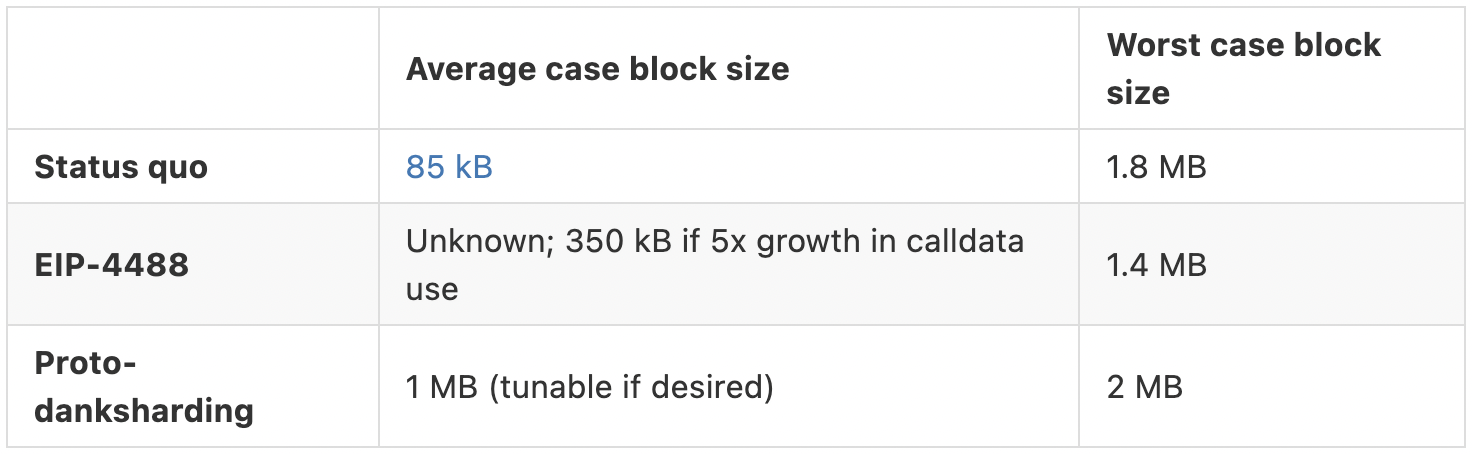

Danksharding is an Ethereum modular solution. Different from simple Sharding, Danksharding uses DAS to verify the availability of data, which solves the problem of validators hiding data on a single shard of Sharding to do evil. Sharding disperses blocks and block proposers on different shards, while Danksharding introduces the concept of Merge Fee Market, and the only block proposer will process all transaction data. At the same time, the separation of block builders and block proposers (PBS) avoids the problem of raising node requirements.

Proto-danksharding (EIP-4844) is the path to a full sharding roadmap. It proposes a new transaction type "Blob-carrying Transaction". Blob is characterized by the same data size, which is much cheaper than calldata, and the data capacity is larger than calldata.

Unlike using simple KZG polynomial commitments, Proto-danksharding uses hashed KZG polynomial commitments to achieve EVM compatibility and future capacity compatibility.

image description

Source: Ethereum.org

Validium

To put it simply, Validium is an L2 expansion solution centered on Ethereum. Validium is similar to zkRollup, the only difference is that it puts data availability off-chain. As a result, Validium has greatly expanded its capacity - reaching a speed of 9000 transactions per second.

In order to better understand Validium, we have to mention the project that adopted Validium to realize the data availability solution - StarkEX. StarkEX is a STARK-powered scalability engine developed by StarkWare that can be applied to cryptocurrency exchanges. Launched in the summer of 2019, StarkEx adopts an independent customized expansion solution that proves the validity of zk-STARK. Currently there are dYdX, Sorare, Immutable and Deversififour products。

Data availability in StarkEX has three optional modes: zk-Rollup, Validium and Volition. Among them, Validium is an off-chain data availability solution. In this scheme, data security is maintained by eight nodes of the Data Availability Committee (DAC).

The benefits of this solution are reduced transaction costs and privacy: First of all, users no longer need to pay for data on-chain, because most of the gas costs are used to update the state on the chain. In addition, Validum puts the user's account quota information off-chain, which is kept and maintained by DAC to ensure privacy.

But users need to weigh that although Validium reduces transaction fees and has relative privacy, they need to trust DAC members. This is also the biggest weakness in the Validium solution.

First, the DAC's signing keys are stored on-chain, which means these keys are vulnerable to attack. For example, attackers can convert Validium into a state that only they know, thereby freezing assets and demanding ransom. A DAC consisting of only eight members is similar to a Proof-of-Authority network with relatively weak security.

In addition, StarkEX's Validium is also relatively centralized, because Validium operators, or data availability managers, can completely freeze users' assets. They can change the hashed state without disclosing it to the user. However, without this information, users cannot create title certificates for their accounts.

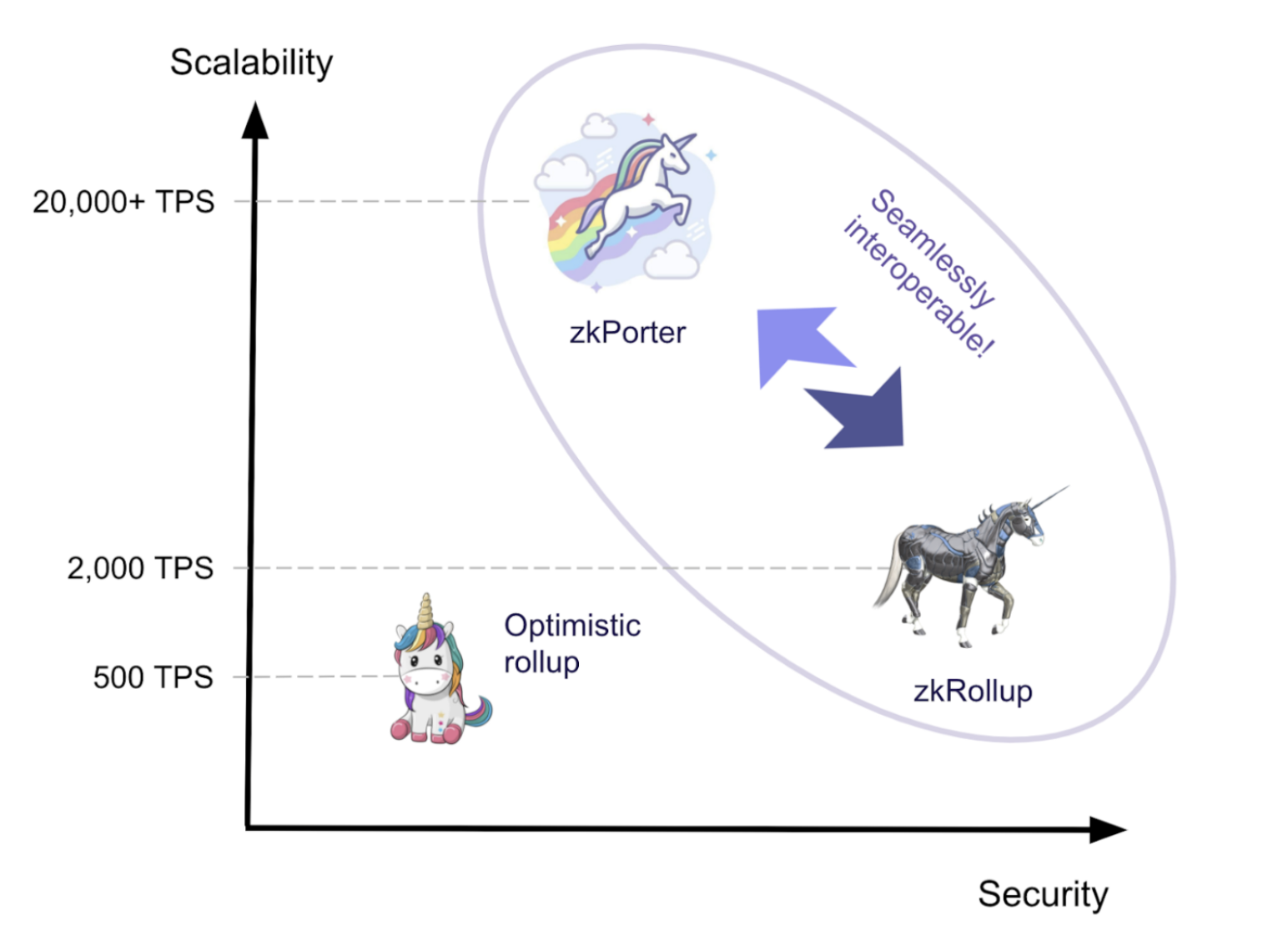

In contrast, zkRollup's solution does not freeze user funds. This is because for zkRollup, the information required to rebuild the state must call the transaction data of Ethereum, otherwise zkRollup will refuse to change the state (Blue Fox Notes, 2020). In other words, the security and status of zkRollup are guaranteed by the Ethereum mainnet. As long as users submit an application to the zkRollup contract on Ethereum, they can withdraw their assets. However, the information verification request submitted to the Ethereum mainnet and the verification process are accompanied by a small gas fee, which increases linearly with the number of transactions. So compared to Validium's solution, zkRollup can only achieve 2000 transactions per second.

It is worth mentioning that StarkEX also has a third data availability solution, Volition, but it is relatively simple to understand. It is a hybrid data availability system, which allows users to choose whether to make data available off-chain or on-chain, and weigh the pros and cons.

zkPorter

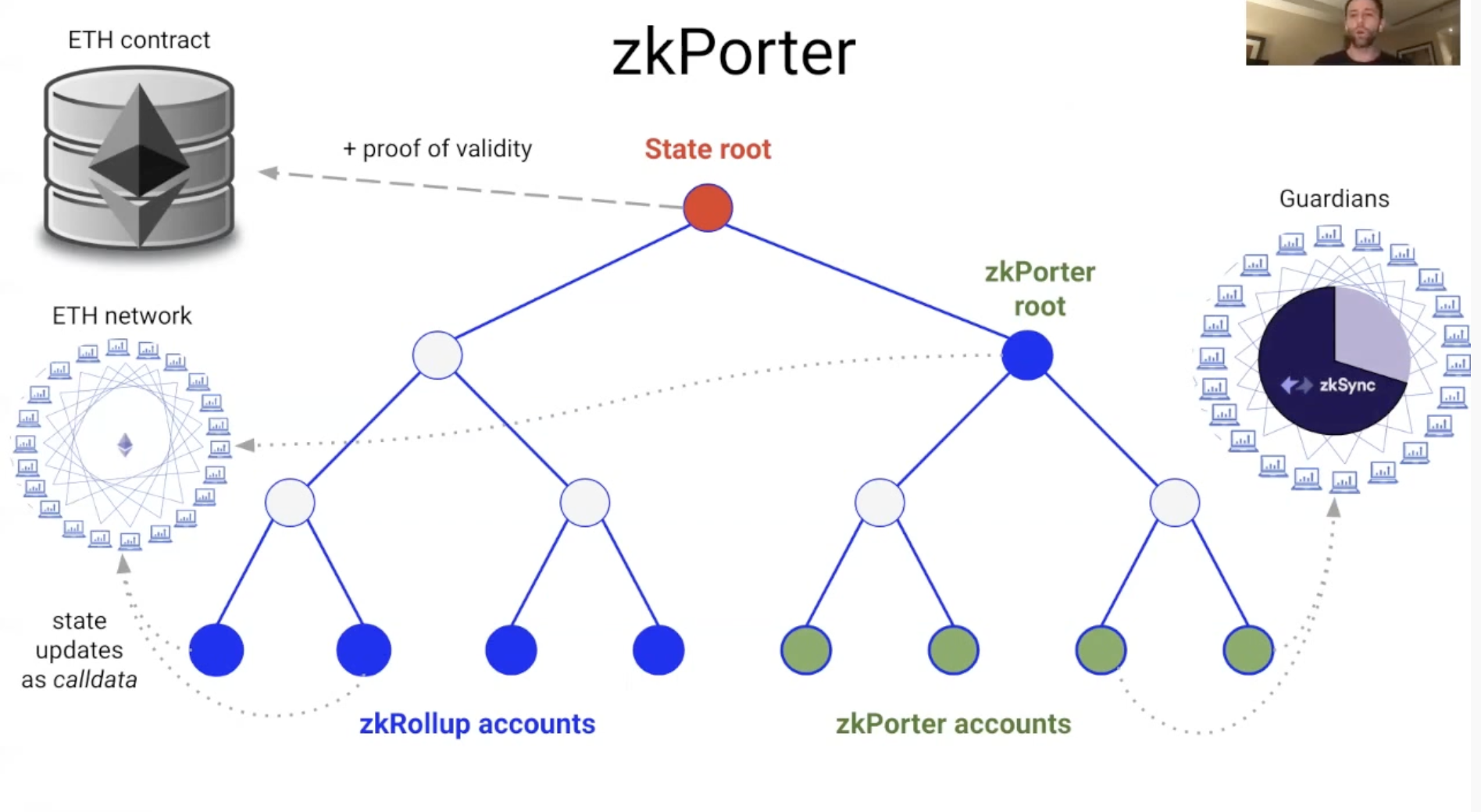

As mentioned before, zkRollup's expansion solution is to allow transactions to be processed off-chain, and then synchronize the results to full nodes on Ethereum - with on-chain data availability.

Specifically, if a user of a zkRollup account wants to prove that she/he owns assets in this account, the user can provide a Merkle Path and broadcast the state change or final state to every full node of the Ethereum network (see below Figure left). In this way, even if the operator of zkRollup wants to hide certain data, users can directly extract data from the Ethereum network. Although this scheme gives users the autonomy to control the availability of data, or "anyoneYou can rebuild the state of Layer 2 by yourself to ensure anti-censorship". But this is accompanied by a bottleneck in expansion, because the action of verifying state changes on the Ethereum network takes up block space.

And zkPorter is an advanced expansion solution of zkRollup. The difference between this solution and the former is that the user of zkPorter account will not update the state change as calldata to the Ethereum mainnet, but send it to the network composed of Guardians ( See right side of figure below).

source:

zkPorter by zkSync @ ETH Global

source:https://www.youtube.com/watch?v=dukgSVE6fxc&ab_channel=MatterLabs

Although zkRollup and zkPorter are two expansion solutions, this does not prevent the seamless interaction of contracts and accounts on both sides. In addition, from the perspective of user experience, the transaction fee of zkPorter account is reduced100source:

source:https://twitter.com/zksync/status/1381955843428605958

Eigenlayer

source:

source:https://messari.io/report/eigenlayer-to-stake-and-re-stake-again

Datalayr is a data availability protocol middleware built on top of Eigenlayer. Datalayr uses KZG polynomial commitments and erasure codes, combined with fraud proofs and mandatory disclosures, to provide integrity guarantees for nodes. They have a throughput of 10 MB/s on the testnet, and this is without considering historical data. As the number of nodes increases, more throughput is available and cheaper to validate. Having 1,000 nodes would enable Ethereum to handle 330 TX/s.

The downside of Datalayr is that it is not yet known how many ETH stakers will be incentivized to provide data availability services. Therefore, no assertions can be made about its scalability and security.

Polygon Avail

Polygon Avail is a modular data availability blockchain. Designed to scale for other independent chains, sidechains, or off-chain data availability. Using KZG polynomial commitments, Data Availability Sampling (DAS) and erasure coding, enables light clients to also serve as data availability verifiers without relying on fraud proofs. The test data released by Polygon on August 30 shows that currently, Avail's block generation time is 20 seconds, and each block can save about 2 MB of data. Assuming an average transaction size of 250 bytes, each Polygon Avail block can now accommodate approximately 8,400 transactions (420 transactions per second).

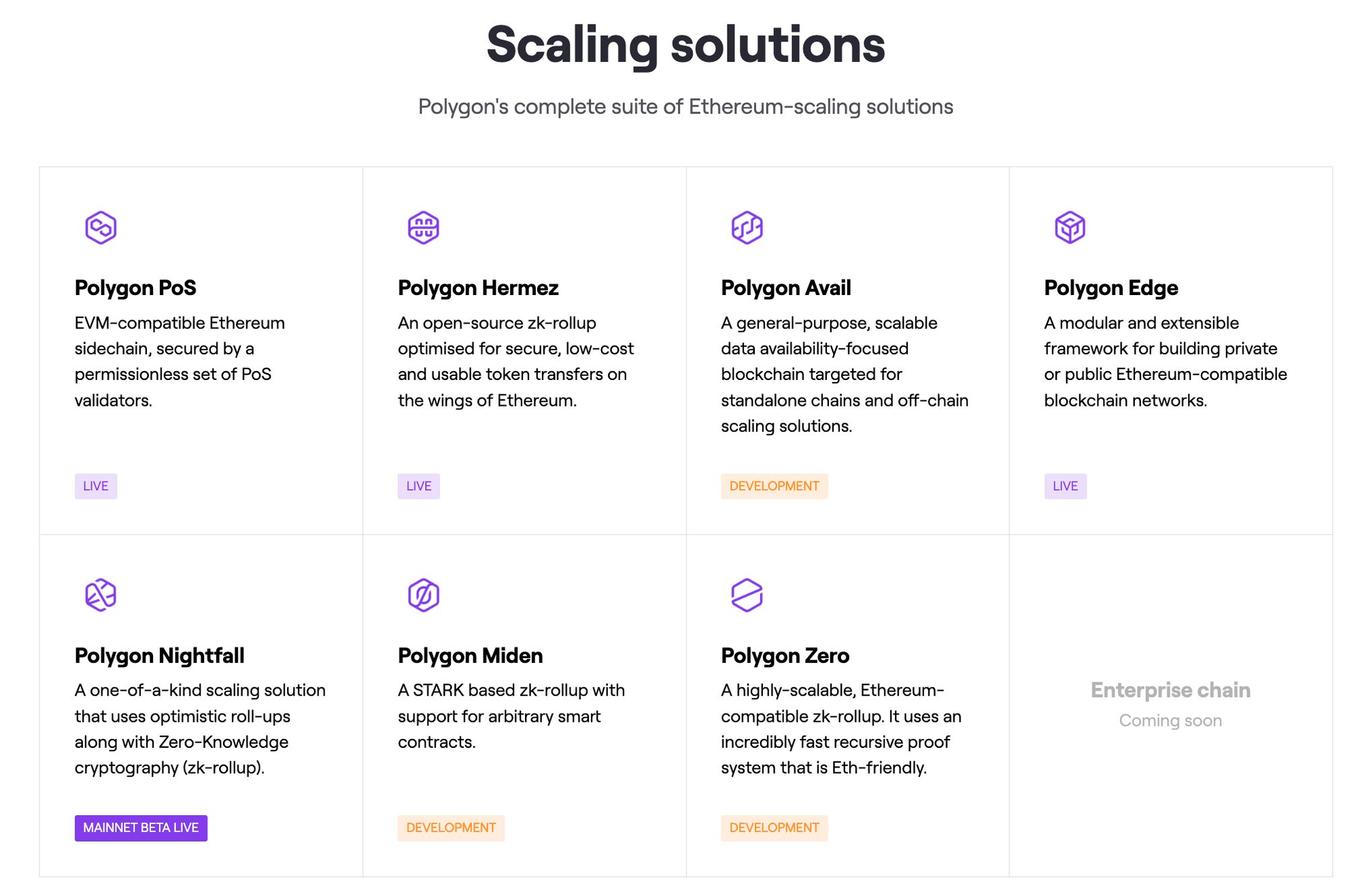

In addition to Polygon Avail for data availability, Polygon ecological expansion solutions include:

Polygon PoS: EVM-compatible Ethereum sidechain, secured through a series of permissionless PoS nodes; Polygon Hermez, a zk-rollup-based Ethereum layer2;

Polygon Edge: Provides a customizable modular framework that supports private or public Ethereum-compatible blockchains; Polygon Nightfall, an Optimistic Rollup designed to reduce the cost of transferring ERC20, ERC721 and ERC1155 tokens privately;

Polygon Miden: It is a zk-STARK-based Layer 2 expansion solution for Ethereum, whose core is Miden VM: a Turing-complete STARK-based virtual machine that provides a certain degree of security and supports Advanced Features;

Polygon Zerodisclaimer

Item comparison

References:

https://medium.com/@Jon_Charbonneau/celestia-the-foundation-of-a-modular-blockchain-world-95900fe2cfb0

https://twitter.com/ptrwtts/status/1509869606906650626

https://mp.weixin.qq.com/s/mpHSH-L48jJebtFZQrg3kw

https://rainandcoffee.substack.com/p/the-modular-world

https://twitter.com/apolynya/status/1517137608253485059

https://twitter.com/zksync/status/1381955843428605958

https://www.tuoluo.cn/article/detail-10012090.html

https://coinmarketcap.com/alexandria/article/what-is-data-availability

https://www.chaincatcher.com/article/2077770

https://messari.io/report/rollups-execution-through-the-modular-lens

https://ethereum.org/en/developers/docs/data-availability/

https://www.paradigm.xyz/2022/08/das

https://messari.io/report/progression-of-the-data-availability-problem?referrer=author:eshita-nandini

disclaimer

More information

Copyright Notice

Without the authorization of DODO Research Institute, no one may use without authorization (including but not limited to copy, disseminate, display, mirror, upload, download, reprint, excerpt, etc.) or allow others to use the above intellectual property rights. If the work has been authorized to be used, it shall be used within the scope of authorization, and the source of the author shall be indicated. Otherwise, its legal responsibility will be investigated according to law.

about Us

"DODO Research Institute" led by the dean "Dr.DODO" led a group of DODO researchers to dive into the Web 3.0 world, doing reliable and in-depth research, aiming at decoding the encrypted world, outputting clear opinions, and discovering the future value of the encrypted world. "DODO" is a decentralized trading platform driven by the Proactive Market Maker (PMM) algorithm, which aims to provide efficient on-chain liquidity for Web3 assets, allowing everyone to issue and trade easily.

More information

Official Website: https://dodoex.io/

GitHub: https://github.com/DODOEX

Telegram: t.me/dodoex_official

Discord: https://discord.gg/tyKReUK

Twitter: https://twitter.com/DodoResearch

Notion: https://dodotopia.notion.site/Dr-DODO-is-Researching-6c18bbca8ea0465ab94a61ff5d2d7682

Mirror:https://mirror.xyz/0x70562F91075eea0f87728733b4bbe00F7e779788