Kalshi's first research report is out: How collective intelligence can outperform Wall Street think tanks when predicting CPI.

- 核心观点:预测市场在通胀预测上优于华尔街共识。

- 关键要素:

- 整体预测误差比共识预期低40.1%。

- 在重大冲击事件中,预测优势扩大至60%。

- 市场与共识分歧是冲击事件的强预警信号。

- 市场影响:为投资与政策决策提供更优预测工具。

- 时效性标注:长期影响。

This article is from Kalshi Research .

Compiled by Odaily Planet Daily ( @OdailyChina ); Translated by Azuma ( @azuma_eth )

Editor's Note: Leading prediction market platform Kalshi announced yesterday the launch of a new research report section, Kalshi Research, designed to provide academics and researchers interested in prediction market topics with Kalshi's internal data. The first research report in this section has been released, titled " Beyond Consensus: Prediction Markets and the Forecasting of Inflation Shocks."

The following is the original text of the report, translated by Odaily Planet Daily.

Overview

Typically, a week before the release of important economic statistics, analysts and senior economists at major financial institutions provide their forecasts for the expected figures. These forecasts, when compiled, are known as "consensus expectations" and are widely regarded as an important reference for understanding market changes and adjusting portfolio allocation.

In this research report, we compare the performance of consensus expectations and Kalshi's implied pricing in the market (hereinafter referred to as "market forecasts") in predicting the actual value of the same core macroeconomic signal—the year-on-year overall inflation rate (YOY CPI).

Key Highlights

- Overall accuracy is superior: In all market environments (including normal and shock environments), Kalshi's mean absolute error (MAE) is 40.1% lower than the consensus forecast.

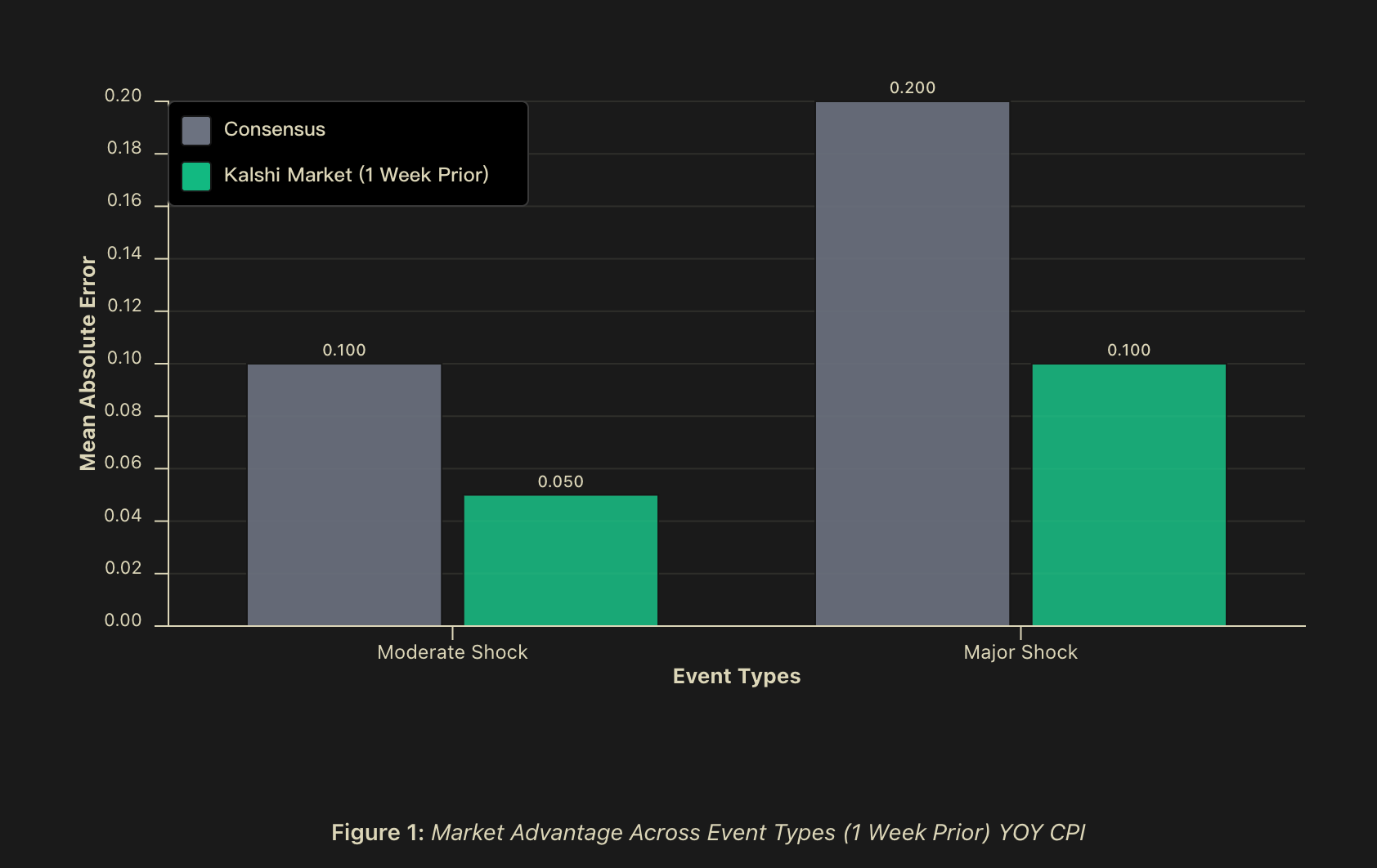

- "Shock Alpha": In the event of a major shock (greater than 0.2 percentage points), Kalshi's forecast is 50% lower than the consensus MAE within a one-week forecast window, and this MAE will further increase to 60% the day before the data release. In the event of a moderate shock (between 0.1 and 0.2 percentage points), Kalshi's forecast is also 50% lower than the consensus MAE within a one-week forecast window, and this will increase to 56.2% the day before the data release.

- Predictive Signal: When the market forecast deviates from the consensus expectation by more than 0.1 percentage points, the probability of a predicted shock is approximately 81.2%, rising to approximately 82.4% the day before the data release. In cases where market forecasts and consensus expectations differ, market forecasts are more accurate in 75% of the cases.

background

Macroeconomic forecasters face an inherent challenge: predicting the most crucial moments—when markets are in disarray, policies are shifting, and structural disruptions occur—is precisely when historical models are most prone to failure. Financial market participants typically release consensus forecasts days before the release of key economic data, summarizing expert opinions into market expectations. However, while these consensus views have value, they often share similar methodological approaches and information sources.

For institutional investors, risk managers, and policymakers, the stakes for forecast accuracy are asymmetrical. In uncontroversial times, slightly better forecasts offer only limited value; but in times of market turmoil—when volatility soars, correlations break down, or historical relationships become invalid—superior accuracy can deliver significant alpha returns and limit drawdowns.

Therefore, understanding the behavior of parameters during periods of market volatility is crucial. We will focus on a key macroeconomic indicator—the year-on-year overall inflation rate (YOY CPI)—which is a core reference for future interest rate decisions and an important signal of the health of the economy.

We compared and evaluated the accuracy of predictions across multiple time windows preceding the release of official data. Our core finding is that the so-called "shock alpha" does exist—that is, in tail events, market-based predictions achieve additional accuracy compared to consensus benchmarks. This excess performance is not merely academic in nature, but significantly improves signal quality at critical moments when prediction error has the highest economic cost. In this context, the truly important question is not whether market predictions are "always right," but whether they provide a differentiated signal worthy of inclusion in traditional decision-making frameworks.

Methodology

data

We analyzed the daily implied forecasts from prediction market traders on the Kalshi platform, covering three timeframes: one week before the data release (matching the consensus expectation release time), the day before the release, and the morning of the release day. Each market used was (or was) a real, tradable, and operational market, reflecting actual funding positions at different liquidity levels. For consensus expectations, we collected institutional-level YoY CPI consensus forecasts, which are typically released approximately one week before the official data release from the U.S. Bureau of Labor Statistics.

The sample period is from February 2023 to mid-2025, covering more than 25 monthly CPI release cycles and spanning a variety of different macroeconomic environments.

Impact Classification

We categorized events into three types based on their "unexpected magnitude" relative to historical levels. "Shock" was defined as the absolute difference between consensus expectations and the actual published data:

- Normal event: The forecast error of YoY CPI is less than 0.1 percentage points;

- Moderate shock: The forecast error for YoY CPI is between 0.1 and 0.2 percentage points;

- Major shock: The forecast error for the year-on-year CPI exceeded 0.2 percentage points.

This classification method allows us to examine whether predictive advantage exhibits a systematic difference as the difficulty of prediction changes.

Performance indicators

To evaluate predictive performance, we use the following metrics:

- Mean Absolute Error (MAE): A key accuracy metric, calculated as the average of the absolute differences between predicted and actual values.

- Win rate: When the difference between consensus expectation and market forecast reaches or exceeds 0.1 percentage points (rounded to one decimal place), we record which forecast is closer to the final actual result.

- Forecast time span analysis: We track how the accuracy of market valuations evolves from the week before the release to the release date to reveal the value brought by continuously incorporated information.

Results: CPI forecast performance

Overall accuracy is superior

In all market environments, market-based CPI forecasts have a mean absolute error (MAE) that is 40.1% lower than consensus forecasts. Across all time spans, market-based CPI forecasts have an MAE that is 40.1% (one week earlier) to 42.3% (one day earlier) lower than consensus expectations.

Furthermore, when there is a discrepancy between consensus expectations and market implied values, Kalshi's market-based predictions demonstrate a statistically significant win rate, ranging from 75.0% a week in advance to 81.2% on the day of release. If the cases where they match consensus expectations (accurate to one decimal place) are also included, market-based predictions match or outperform consensus expectations in approximately 85% of cases a week in advance.

Such a high directional accuracy rate indicates that when market forecasts differ from consensus expectations, this discrepancy itself has significant informational value regarding "whether a shock event may occur".

The "Alpha Impact" does indeed exist.

The difference in forecast accuracy is particularly pronounced during shock events. In moderate shock events, when the release time is consistent, the market's forecast MAE is 50% lower than the consensus expectation, and this advantage widens to 56.2% or more the day before the data release. In major shock events, the market's forecast MAE is also 50% lower than the consensus expectation when the release time is consistent, and can reach 60% or more the day before the data release. In normal environments without shocks, market forecasts and consensus expectations perform roughly the same.

Although the sample size of the shock events was small (which is reasonable in a world where shocks are inherently highly unpredictable), the overall pattern was very clear: the market’s information aggregation advantage was most valuable when the forecasting environment was most difficult.

However, what's more important than Kalshi's superior performance during shock periods is that the divergence between market forecasts and consensus expectations can itself be a signal of an impending shock. In situations of divergence, market forecasts outperformed consensus expectations by 75% (within comparable time windows). Furthermore, threshold analysis shows that when the market's deviation from the consensus exceeds 0.1 percentage points, the probability of a predicted shock is approximately 81.2%, and this probability further rises to approximately 84.2% the day before the data release.

This significant difference in practice indicates that prediction markets can not only serve as a competing prediction tool alongside consensus expectations, but also as a "meta-signal" about prediction uncertainty, transforming the divergence between the market and consensus into a quantifiable early indicator for warning of potential unexpected outcomes.

Further Discussion

An obvious question arises: why do market forecasts outperform consensus forecasts during shocks? We propose three complementary mechanisms to explain this phenomenon.

Heterogeneity of market participants and "collective intelligence"

While traditional consensus expectations integrate the views of multiple institutions, they often share similar methodological assumptions and information sources. Econometric models, Wall Street research reports, and government data releases constitute a highly overlapping common knowledge base.

In contrast, prediction markets aggregate positions held by participants with diverse information bases: including proprietary models, industry-level insights, alternative data sources, and experience-based intuition. This diversity of participants has a solid theoretical foundation in the "wisdom of crowds" theory, which suggests that when participants possess relevant information and their prediction errors are not perfectly correlated, aggregating independent predictions from diverse sources often yields better estimates.

The value of this information diversity is particularly prominent when the macro environment undergoes a "state switch"—individuals with scattered and localized information interact in the market, and their fragmented information is combined to form a collective signal.

Differences in participant incentive structures

Consensus forecasters at the institutional level are often situated within complex organizational and reputational systems that systematically deviate from the goal of "purely pursuing predictive accuracy." The professional risks faced by professional forecasters create an asymmetric reward structure—significant prediction errors result in substantial reputational costs, while even extremely accurate predictions, especially those achieved by deviating significantly from peer consensus, may not yield proportional professional rewards.

This asymmetry induces "herding," where forecasters tend to cluster their predictions around a consensus value, even if their personal information or model output suggests different outcomes. This is because, within professional systems, the cost of "being wrong in isolation" often outweighs the benefits of "being right in isolation."

In stark contrast, the incentive mechanism faced by participants in prediction markets achieves a direct alignment between prediction accuracy and economic outcomes—accurate predictions mean profits, and incorrect predictions mean losses. In this system, reputational factors are virtually nonexistent; the only cost of deviating from market consensus is economic loss, entirely dependent on the accuracy of the prediction. This structure exerts stronger selective pressure on prediction accuracy—participants who can systematically identify errors in consensus predictions accumulate capital and enhance their influence in the market through larger positions; while those who mechanically follow the consensus suffer continuous losses when the consensus is proven wrong.

This differentiation in incentive structures is often most pronounced and economically significant during periods of significantly increased uncertainty, when the professional costs of institutional forecasters deviating from expert consensus reach their peak.

Information aggregation efficiency

A noteworthy empirical fact is that even a week before the data release—a timeframe that coincides with the typical window for consensus forecasts—market predictions still demonstrate a significant advantage in accuracy. This suggests that the market advantage does not solely stem from the "speed of information acquisition" often cited by prediction market participants.

Conversely, market forecasts may more efficiently aggregate fragments of information that are too dispersed, too industry-specific, or too vague to be formally incorporated into traditional econometric forecasting frameworks. The relative advantage of forecast markets may not lie in earlier access to public information, but in their ability to more effectively synthesize heterogeneous information within the same time scale—while consensus mechanisms based on questionnaires, even with the same time window, often struggle to process this information efficiently.

Limitations and precautions

Our findings require an important limitation. Because the overall sample covers only about 30 months, major shock events are by definition quite rare, meaning that statistical power remains limited for larger tail events. Longer time series would enhance future inferences, although current results strongly suggest the superiority of market forecasting and the discrepancy between signals.

in conclusion

We documented the significant, systemic, and economically remarkable performance of market-based forecasts relative to expert consensus expectations, particularly during periods of shocks where forecast accuracy is most critical. Market-based CPI forecasts generally had a 40% lower error rate, and during periods of significant structural change, the error reduction could reach approximately 60%.

Based on these findings, several future research directions become particularly important: First, to study whether the "Shock Alpha" event itself can be predicted using volatility and predictive divergence indicators through a larger sample size and across multiple macroeconomic indicators; second, to determine at what liquidity threshold the market can consistently outperform traditional prediction methods; and third, to explore the relationship between the predicted values of the market and the predicted values implied by high-frequency trading financial instruments.

In environments where consensus predictions heavily rely on highly correlated model assumptions and shared information sets, prediction markets offer an alternative information aggregation mechanism that can capture state transitions earlier and process heterogeneous information more efficiently. For entities that need to make decisions in economic environments characterized by structural uncertainty and an increasing frequency of tail events, "shock alpha" may not only represent a gradual improvement in predictive capabilities but should also become a fundamental component of their robust risk management infrastructure.