Is data infrastructure ready for the era of encrypted super apps?

- 核心观点:数据基础设施需实时化以支持交易盈利。

- 关键要素:

- 高性能公链出块时间达毫秒级。

- AI驱动信号执行提升收益效率。

- 链上链下数据融合揭示Alpha。

- 市场影响:推动数据平台向执行层转型。

- 时效性标注:中期影响。

Original author: Story , IOSG Ventures

TL;DR

Data Challenges: The competition for block time on high-performance public chains has entered the sub-second era. High concurrency, high traffic volatility, and multi-chain heterogeneity requirements on the consumer side have increased data complexity, necessitating a shift in data infrastructure toward real-time incremental processing and dynamic scalability. Traditional batch ETL processes have latency ranging from minutes to hours, making them inadequate for real-time transactions. Emerging solutions such as The Graph, Nansen, and Pangea are introducing streaming computing to reduce latency to real-time tracking levels.

The paradigm shift in data competition: The previous cycle focused on "understanding"; this cycle emphasizes "profitability." Under the Bonding Curve model, a one-minute delay can cost several times as much. Tool iteration: From manual slippage settings to sniping bots to the GMGN integrated terminal. As blockchain-based trading capabilities gradually become commoditized, the core competitive frontier shifts to the data itself: whoever can capture signals faster will help users profit.

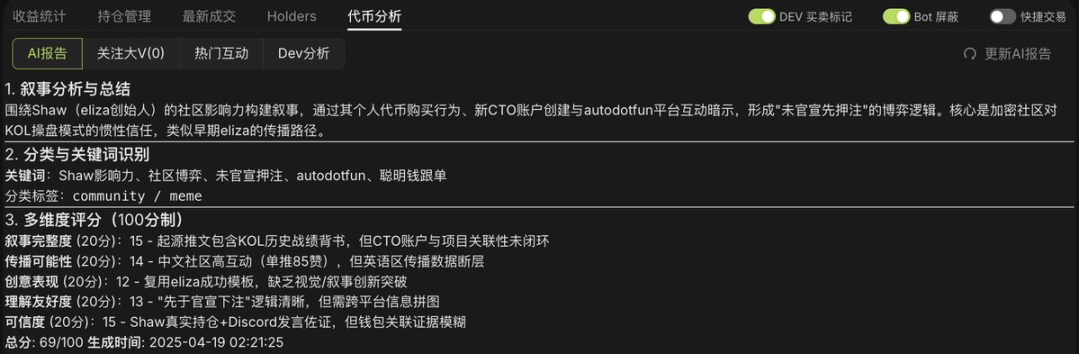

Expanding the dimensions of transaction data: Memes are essentially the financialization of attention, with narrative, attention, and subsequent dissemination crucial. A closed loop of off-chain public opinion and on-chain data: Narrative tracking and summarization, as well as sentiment quantification, are becoming the core of transactions. "Underwater data": Capital flows, persona profiles, and smart money/KOL address labeling reveal the hidden manipulation behind anonymous on-chain addresses. A new generation of trading terminals integrates multi-dimensional on-chain and off-chain signals at the fraction of a second, enhancing market entry and risk avoidance decisions.

AI-driven actionable signals: From information to profits. The competitive objectives in the new phase: speed, automation, and excess returns. LLM+ multimodal AI automatically extracts decision signals and integrates them with copy trading, stop-loss and take-profit execution. Risks and challenges: Illusions, short signal lifespan, execution delays, and risk control. Balancing speed and accuracy, reinforcement learning and simulation backtesting are key.

The survival choices for data dashboards: Lightweight data aggregation/dashboard applications lack a competitive advantage, and their niche is shrinking. Downward: Deepen the integration of high-performance underlying pipelines and data research. Upward: Expand to the application layer, directly address user scenarios, and increase data access activity. The future landscape: Either become the infrastructure for Web 3 water, electricity, and coal, or become a user platform for Crypto Bloomberg.

The moat is shifting towards "executable signals" and "underlying data capabilities." The closed loop of long-tail assets and transaction data presents a unique opportunity for crypto-native entrepreneurs. The window of opportunity for the next 2-3 years:

- Upstream infrastructure: Web 2-level processing power + Web 3 native requirements → Web 3 Databricks/AWS.

- Downstream execution platform: AI Agent + multi-dimensional data + seamless execution → Crypto Bloomberg Terminal.

Thanks to Hubble AI, Space & Time, OKX DEX and other projects for their support of this research report!

Introduction: The Triple Resonance of Meme, High-Performance Public Chain, and AI

In the previous cycle, the growth of on-chain transactions relied primarily on infrastructure iteration. Entering the new cycle, as this infrastructure matures, super-apps like Pump.fun are becoming new growth engines for the crypto industry. This type of asset issuance model, with its unified issuance mechanism and sophisticated liquidity design, has created a fair and pristine trading environment where get-rich-quick stories abound. The replicability of this high-multiplier wealth effect is profoundly changing users' return expectations and trading habits. Users demand not only faster entry opportunities but also the ability to acquire, parse, and execute multi-dimensional data in record time. Existing data infrastructure is struggling to meet these demands for density and real-time performance.

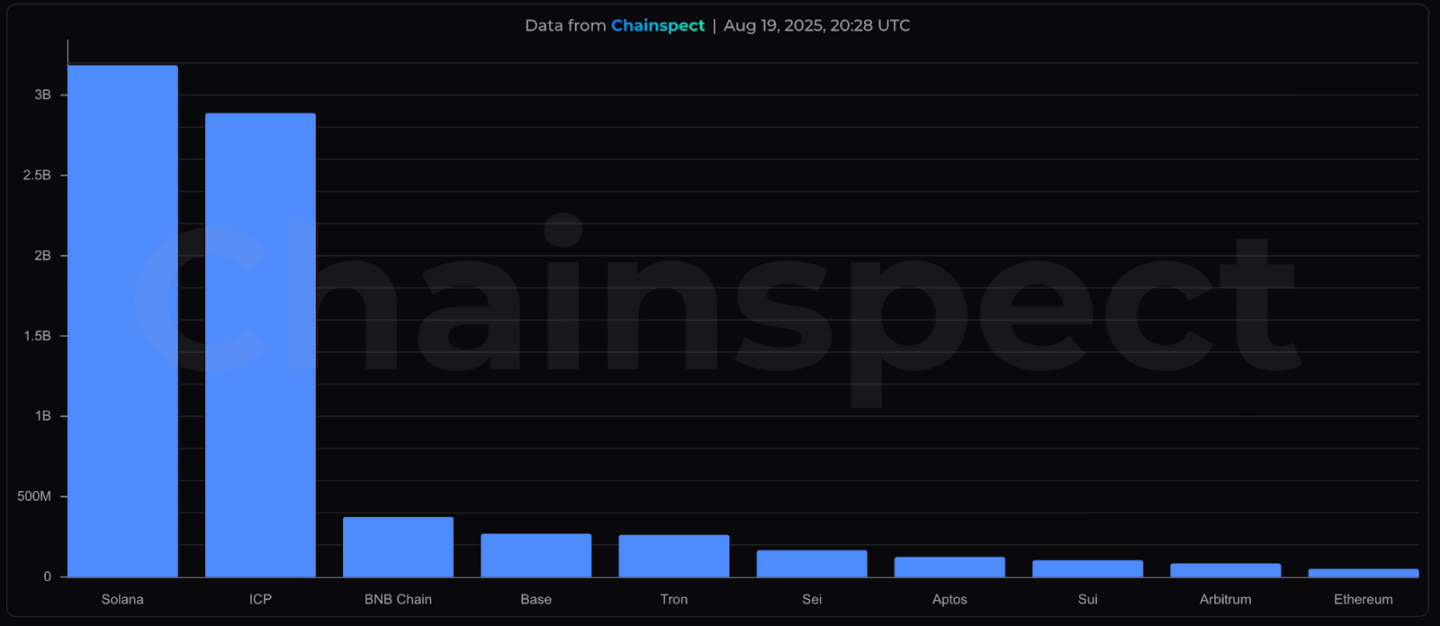

This has led to demands for a more advanced trading environment: lower friction, faster confirmations, and deeper liquidity. Trading venues are rapidly migrating to high-performance public chains and Layer 2 Rollups, represented by Solana and Base. The volume of transaction data on these public chains has increased by more than 10 times compared to the previous generation of Ethereum, posing even more severe data performance challenges for existing data providers. With the imminent launch of a new generation of high-performance public chains like Monad and MegaETH, the demand for on-chain data processing and storage will grow exponentially.

At the same time, the rapid maturation of AI is accelerating the achievement of intelligent equality. GPT-5's intelligence has reached doctoral levels, and large multimodal models like Gemini can easily interpret candlestick charts. With the help of AI tools, once complex trading signals can now be understood and executed by ordinary users. Under this trend, traders are beginning to rely on AI to make trading decisions, and AI trading decisions cannot be separated from multi-dimensional, highly effective data. AI is evolving from an auxiliary analytical tool to a core trading decision-making platform, and its widespread adoption has further intensified the demand for real-time data, interpretability, and scalability.

Under the triple resonance of the meme trading craze, the expansion of high-performance public chains and the commercialization of AI, the on-chain ecosystem has an increasingly urgent need for a new data infrastructure.

Facing the data challenges of 100,000 TPS and millisecond-level block generation

With the rise of high-performance public chains and high-performance Rollups, the scale and speed of on-chain data have entered a new stage.

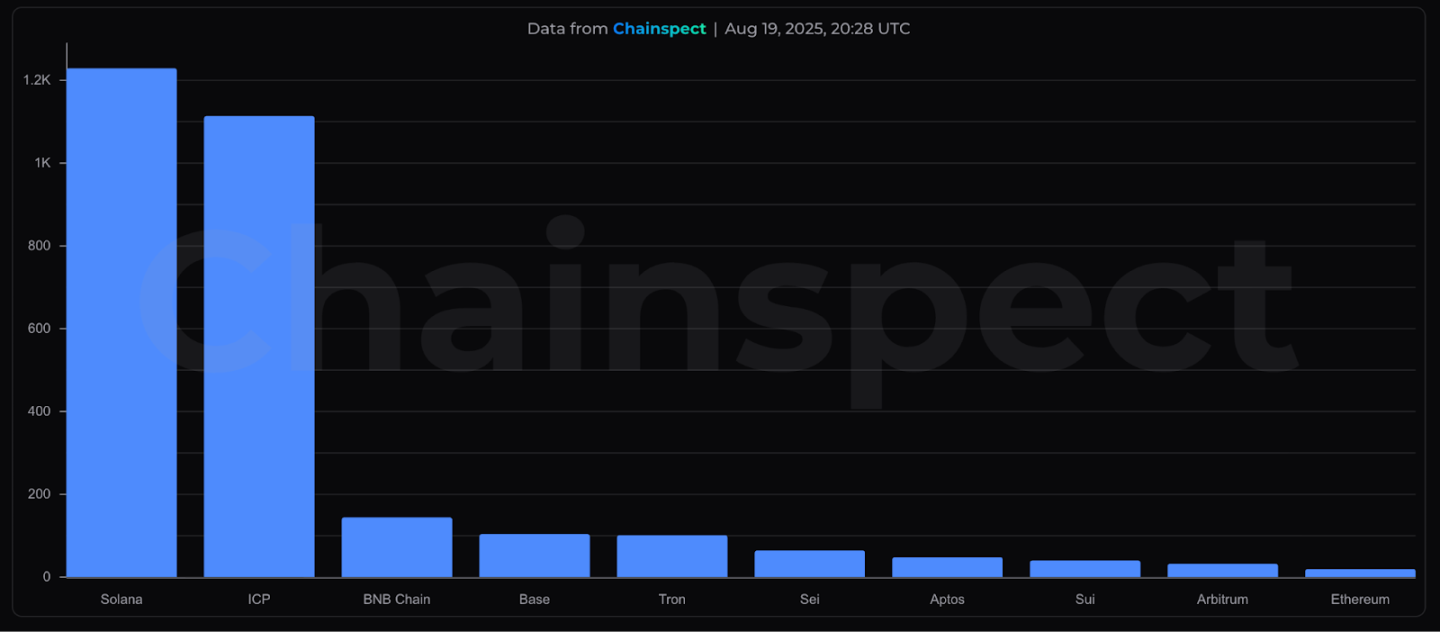

With the widespread adoption of high-concurrency and low-latency architectures, daily transaction volumes easily exceed 10 million, with raw data volumes measured in hundreds of GB. For example, Solana has averaged over 1,200 TPS over the past 30 days, with over 100 million daily transactions. On August 17th, it reached a record high of 107,664 TPS. According to statistics, Solana's ledger data is growing at a rate of 80-95 TB per year, translating to 210-260 GB per day.

▲ Chainspect, 30-day average TPS

Chainspect, 30-day trading volume

Not only has throughput increased, but block times on emerging public chains have also reached milliseconds. BNB Chain's Maxwell upgrade has reduced block times to 0.8 seconds, while Base Chain's Flashblocks technology has reduced block times to 200 ms. In the second half of this year, Solana plans to replace PoH with Alpenglow, reducing block confirmation times to 150 ms, while the MegaETH mainnet is aiming for 10 ms real-time block times. These consensus and technological breakthroughs have significantly improved transaction real-time performance, but they have also placed unprecedented demands on block data synchronization and decoding capabilities.

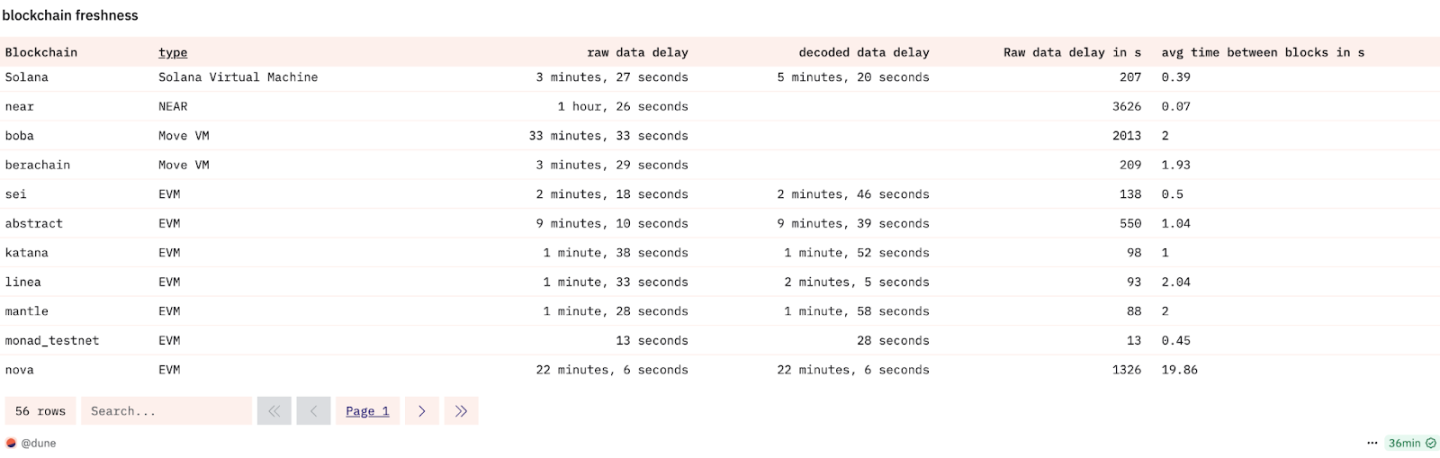

However, downstream data infrastructure still largely relies on batch ETL pipelines, which inevitably introduce data delays. For example, on Solana, contract interaction event data is typically delayed by approximately five minutes, while protocol-level aggregated data can take up to an hour. This means that on-chain transactions that could be confirmed within 400 milliseconds are delayed hundreds of times before being visible in analytics tools, making them unacceptable for real-time trading applications.

▲ Dune, Blockchain Freshness

To address data supply-side challenges, some platforms have shifted to streaming and real-time architectures. The Graph leverages Substreams and Firehose to reduce data latency to near real-time. Nansen, by incorporating stream processing technologies like ClickHouse, has achieved a tenfold performance improvement in Smart Alerts and real-time dashboards. Pangea, by aggregating the compute, storage, and bandwidth provided by community nodes, provides real-time streaming data with sub-100 ms latency to business-side providers such as market makers, quantitative analysts, and central limit order books (CLOBS).

Chainspect

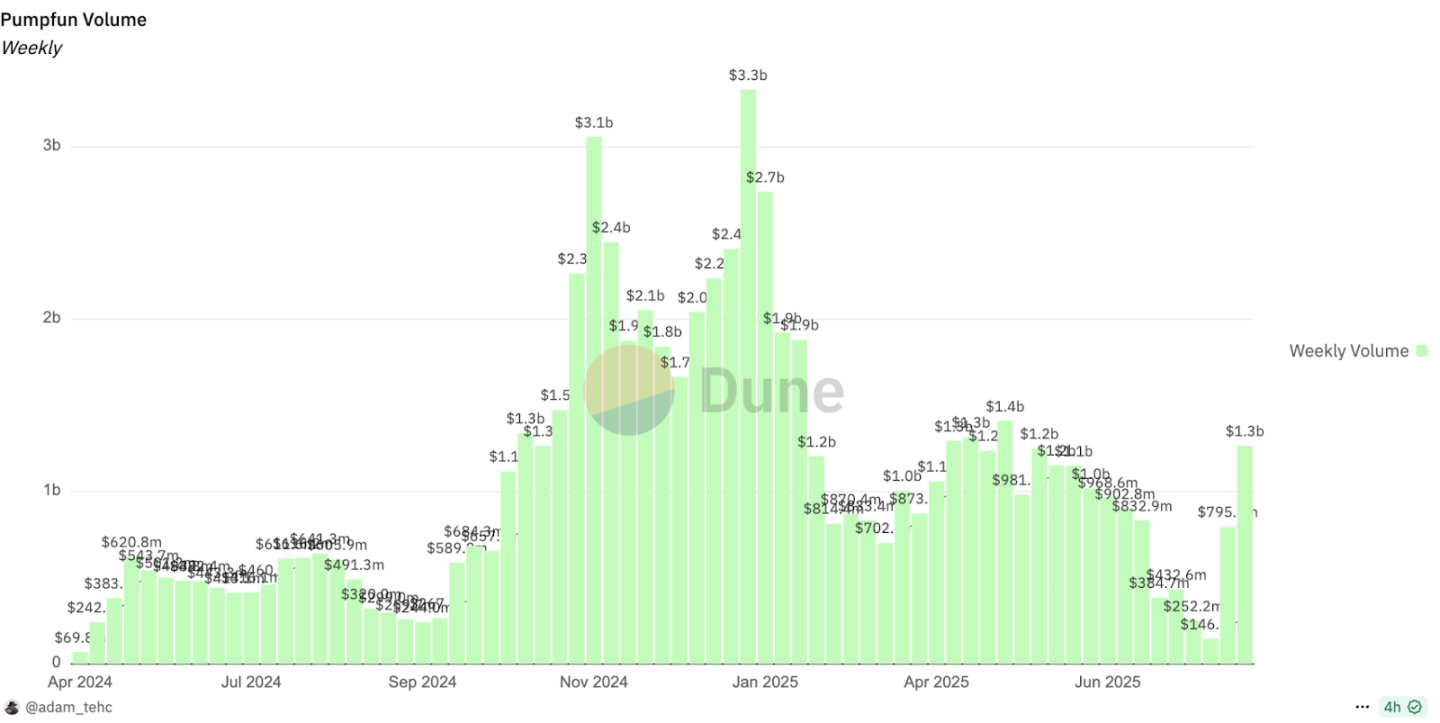

In addition to the massive volume of data, on-chain transactions also exhibit significant traffic imbalances. Over the past year, Pumpfun's weekly transaction volume has varied nearly 30-fold from its lowest to its highest. In 2024, the meme trading platform GMGN experienced six server outages within four days, forcing it to migrate its underlying database from AWS Aurora to TiDB, an open-source distributed SQL database. The migration significantly improved the system's horizontal scalability and computing elasticity, boosting business agility by approximately 30% and significantly alleviating pressure during peak trading periods.

▲ Dune, Pumpfun Weekly Volume

▲ Odaily, TiDB's Web 3 service case

The multi-chain ecosystem further exacerbates this complexity. Differences in log formats, event structures, and transaction fields across public chains require customized parsing logic for each new chain, significantly challenging the flexibility and scalability of data infrastructure. As a result, some data providers have adopted a "customer-first" strategy: prioritizing access to services from chains with active trading activity, balancing flexibility with scalability.

If data processing remains stuck in fixed-interval batch ETL (Extract, Transform, and Load) processing amid the prevalence of high-performance blockchains, it will face latency backlogs, decoding bottlenecks, and query lags, failing to meet the demand for real-time, refined, and interactive data consumption. Therefore, on-chain data infrastructure must evolve to a streaming incremental processing and real-time computing architecture, coupled with load balancing mechanisms to cope with the concurrency pressures brought on by periodic trading peaks in the cryptocurrency market. This is not only a natural extension of the technological path but also a key step in ensuring the stability of real-time queries. It will also mark a true differentiator in the competition among the next generation of on-chain data platforms.

Speed is wealth: a paradigm shift in on-chain data competition

The core proposition of on-chain data has shifted from visualization to actionability. In the last cycle, Dune was the standard tool for on-chain analysis. It met the needs of researchers and investors for comprehensibility, allowing them to piece together on-chain narratives using SQL charts.

- GameFi and DeFi players rely on Dune to track capital inflows and outflows, calculate gold farming yields, and withdraw in time before market turning points.

- NFT players use Dune to analyze trading volume trends, whale holdings, and distribution characteristics to predict market heat.

However, in this cycle, meme users are the most active consumer group. They have driven the phenomenal app Pump.fun to generate cumulative revenue of $700 million, nearly double the total revenue of the leading consumer app Opensea in the previous cycle.

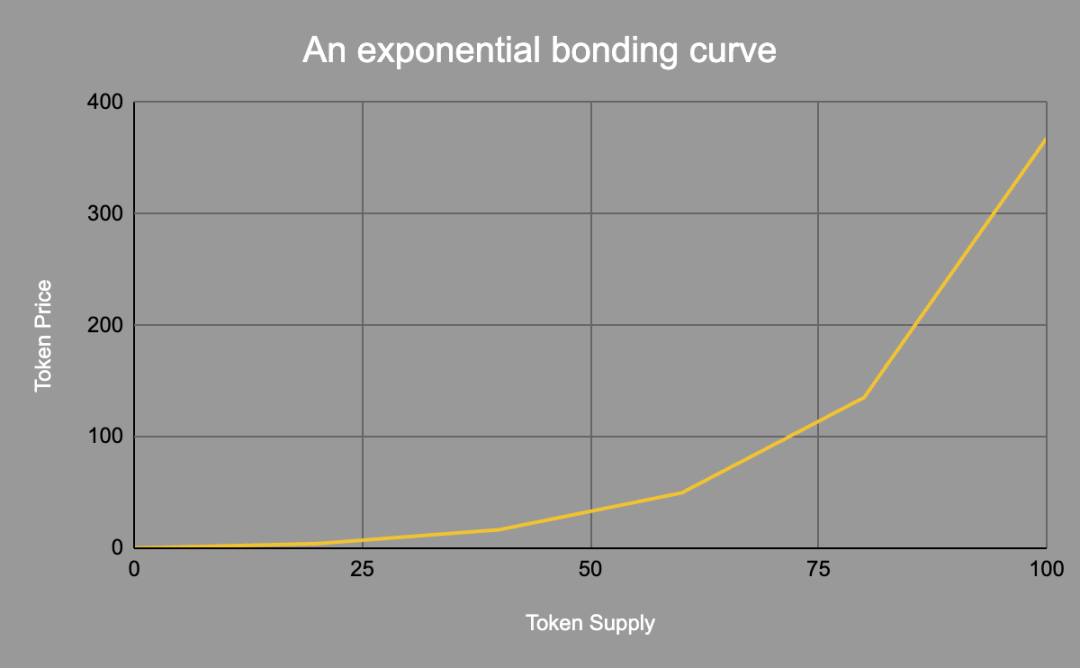

In the meme space, the market's time sensitivity is magnified to the extreme. Speed is no longer a nice-to-have, but a core variable determining profit and loss. In the primary market, priced by the Bonding Curve, speed is cost. Token prices rise exponentially with buying demand, and even a one-minute delay can increase the cost of entry several times. According to Multicoin research, the most profitable players in the game often pay 10% slippage to enter the block three points ahead of their competitors. The wealth effect and the "get-rich-quick myth" drive players to pursue second-level candlestick charts, same-block transaction execution engines, and one-stop decision-making dashboards, competing for information collection and order placement speed.

▲ Binance

In the manual trading era of Uniswap, users had to set their own slippage and gas, and prices were not visible on the front end, making trading more like "buying a lottery ticket"; in the era of the BananaGun sniper bot, automatic sniping and slippage technology allowed retail players to stand on the same starting line as scientists; then in the PepeBoost era, the bot pushed out pool opening information at the same time as the front-row position data; and finally developed into the current GMGN era, creating a terminal that integrates K-line information, multi-dimensional data analysis and transaction execution, becoming the "Bloomberg Terminal" of meme trading.

As trading tools continue to iterate and execution thresholds gradually disappear, the forefront of competition inevitably slides towards the data itself: whoever can capture signals faster and more accurately will be able to establish a trading advantage in the ever-changing market and help users make money.

Dimensionality is advantage: the truth beyond the K-line

Memecoin's essence is the financialization of attention. High-quality narratives can consistently break through audiences, attracting attention and driving up prices and market capitalization. For meme traders, real-time performance is crucial, but achieving significant results hinges on answering three key questions: what is the token's narrative, who is paying attention, and how this attention can be amplified in the future. These merely reflect the K-line; the true driving force lies in multi-dimensional data: off-chain sentiment, on-chain addresses and holdings, and the precise mapping of the two.

On-chain × Off-chain: A Closed Loop from Attention to Transaction

Users attract attention off-chain and complete transactions on-chain, and the closed-loop data of the two is becoming the core advantage of Meme transactions.

#Narrative tracking and communication chain identification

On social platforms like Twitter, tools like XHunt help meme enthusiasts analyze a project's KOL followlist to identify the project's connections and potential attention-spreading chains. 6551 DEX aggregates Twitter feeds, official websites, tweet comments, offer history, KOL followers, and more to generate comprehensive AI-powered reports for traders that evolve in real time with public opinion, helping them accurately capture narratives.

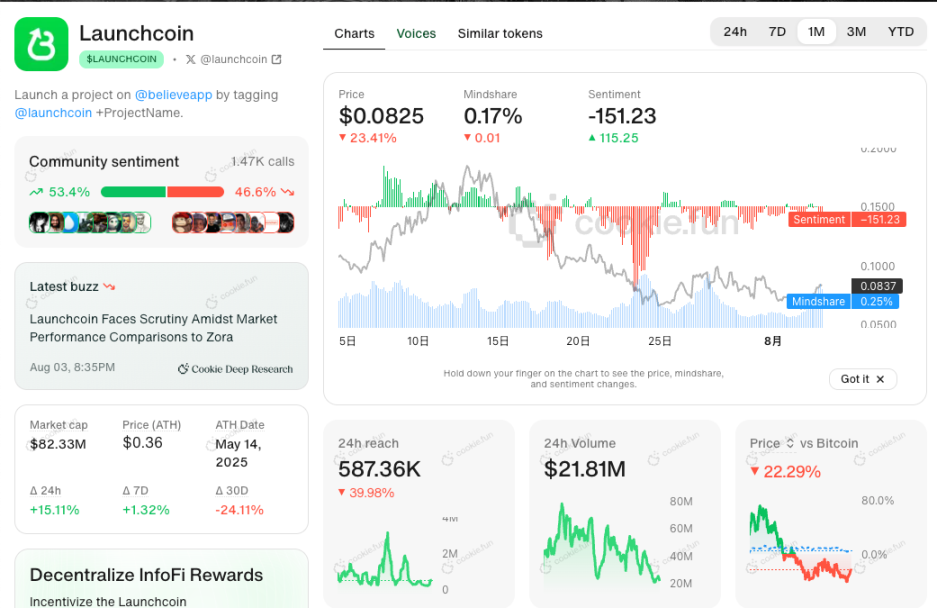

#Emotional indicator quantification

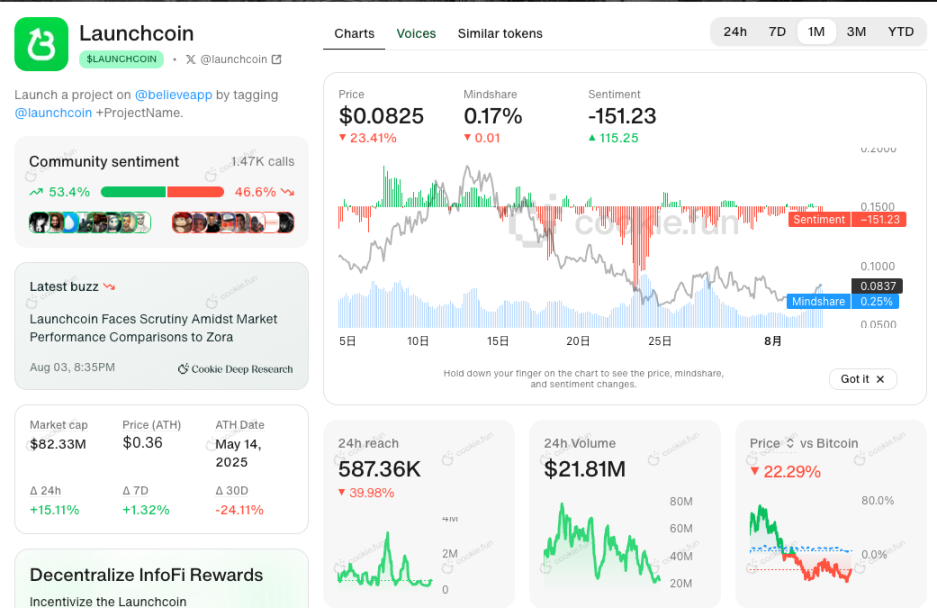

Infofi tools like Kaito and Cookie.fun aggregate and analyze Crypto Twitter content, providing quantifiable metrics for mindshare, sentiment, and influence. Cookie.fun, for example, overlays these two metrics directly onto price charts, transforming off-chain sentiment into readable technical indicators.

▲ Cookie.fun

#On-chain and off-chain are equally important

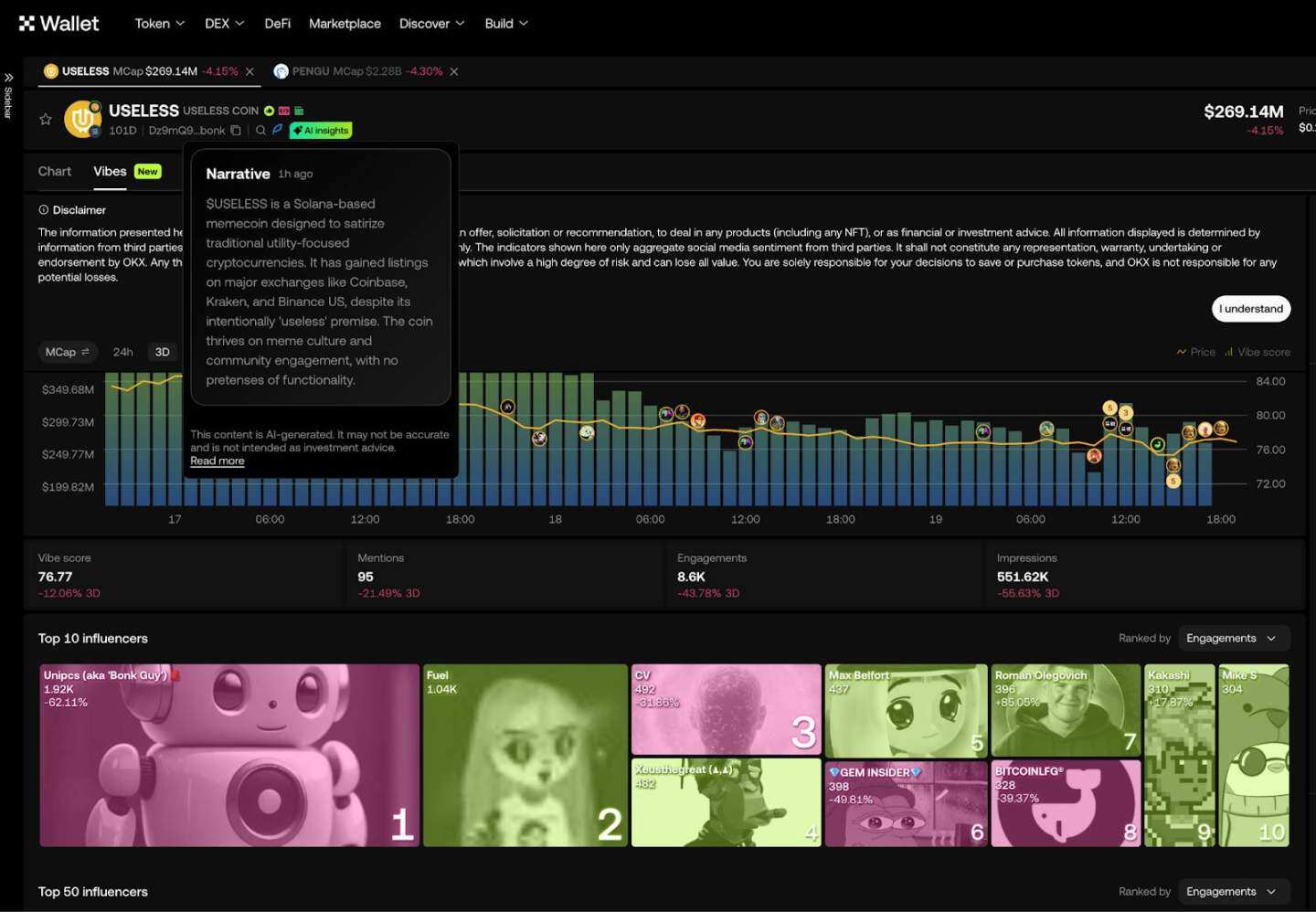

OKX DEX displays Vibes analytics alongside market data, aggregating KOL call times, top KOLs, narrative summaries, and comprehensive scores to shorten off-chain information retrieval time. Narrative summaries have become a highly popular AI product feature among users.

Underwater Data Display: Turning “Visible Ledger” into “Usable Alpha”

In traditional finance, order flow data is controlled by large brokers, forcing quantitative firms to pay hundreds of millions of dollars annually to access it in order to optimize their trading strategies. In contrast, crypto's trading ledgers are completely public and transparent, effectively making valuable intelligence "open source," creating an open-pit gold mine waiting to be mined.

The value of underwater data lies in extracting invisible intentions from visible transactions. This includes capital flows and role profiling—market makers building positions or distributing leads, KOL alt-account addresses, concentrated or dispersed stakes, bundled transactions, and unusual capital flows. It also involves address profiling—categorizing addresses as smart money, KOL/VC, developer, phishing, and insider trading, and then linking these to off-chain identities, connecting on-chain and off-chain data.

These signals are often difficult for ordinary users to detect, yet they can significantly influence short-term market trends. By analyzing address tags, position characteristics, and bundled transactions in real time, trading assistance tools are revealing underlying market trends, helping traders mitigate risks and seek alpha in these second-by-second market fluctuations.

For example, GMGN further integrates label analysis such as smart money, KOL/VC addresses, developer wallets, insider trading, phishing addresses, and bundled transactions on top of the on-chain real-time transaction and token contract data sets, maps on-chain addresses with social media accounts, and aligns capital flows, risk signals, and price behaviors to the second level, helping users make faster entry and risk avoidance decisions.

▲ GMGN

AI-driven actionable signals: From information to revenue

“In the next wave of AI, selling isn’t about tools, it’s about revenue.” — Sequoia Capital

This assessment also holds true in the cryptocurrency trading sector. Once data speed and dimensionality meet standards, the next competitive goal becomes the ability to directly transform complex, multi-dimensional data into actionable trading signals in the data decision-making phase. The evaluation criteria for data decision-making can be summarized into three key points: speed, automation, and excess returns.

Fast enough: With the continuous advancement of AI capabilities, the advantages of natural language processing and multimodal LLM will gradually be realized. They can not only integrate and understand massive amounts of data, but also establish semantic connections between data and automatically extract decision-making conclusions. In the high-intensity, low-volume trading environment of on-chain, each signal has a very short timeliness and capital capacity, and speed directly affects the return that a signal can bring.

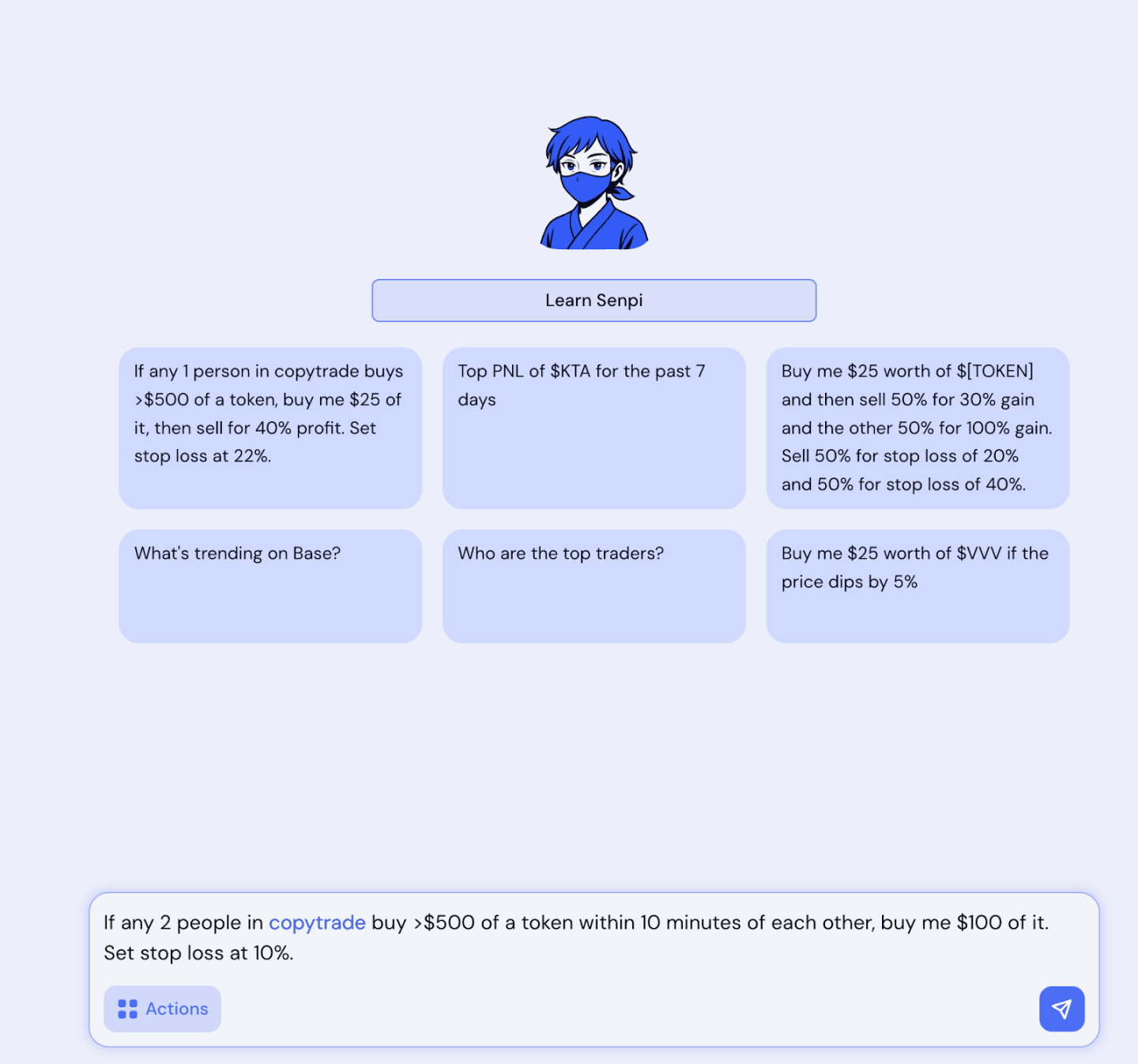

Automation: Humans can't monitor the market 24/7, but AI can. For example, a user can place a copy trading conditional buy order with a stop-loss or take-profit setting with an agent on the Senpi platform. This requires the AI to poll or monitor data in real time in the background and automatically place orders when it detects a signal.

Return: Ultimately, the effectiveness of any trading signal depends on its ability to consistently generate excess returns. AI not only needs to have a robust understanding of on-chain signals but also incorporates risk control to maximize risk-return ratios in highly volatile environments. This includes factoring in unique on-chain factors affecting return, such as slippage and execution latency.

This capability is reshaping the business logic of data platforms: from selling "data access" to selling "revenue-driving signals." The competitive focus of next-generation tools is no longer on data coverage, but on the actionability of signals—the ability to truly complete the last mile from "insight" to "execution."

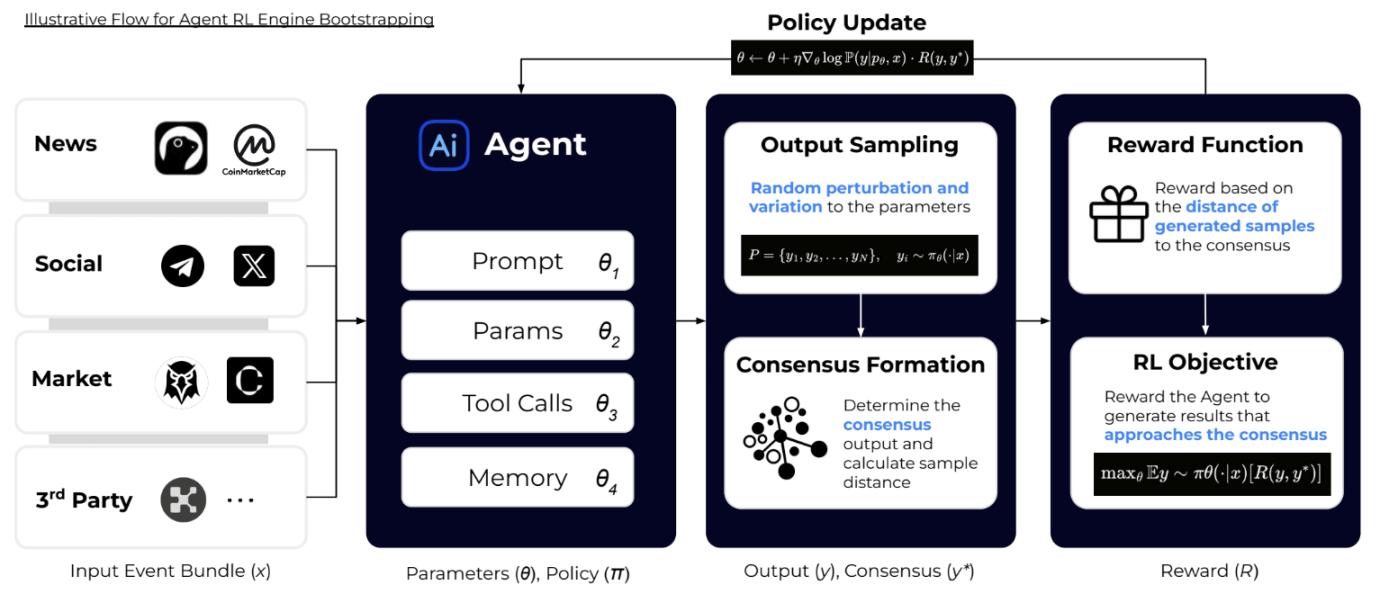

Some emerging projects have begun exploring this direction. For example, Truenorth, an AI-driven discovery engine, incorporates "decision execution rate" into its information effectiveness assessment. Using reinforcement learning, it continuously optimizes output, minimizes invalid noise, and helps users build actionable information flows directly for order placement.

▲ Truenorth

While AI has great potential for generating actionable signals, it faces multiple challenges.

Hallucinations: On-chain data is highly heterogeneous and noisy. LLMs are prone to hallucinations or overfitting when parsing natural language queries or multimodal signals, impacting signal yield and accuracy. For example, when multiple tokens have the same name, AI often fails to find the contract address corresponding to a CT Ticker. Another example is that many AI signal products often refer to discussions of AI in CT as Sleepless AI.

Signal Lifespan: The trading environment is constantly changing. Any delay will erode profits. AI must complete data extraction, reasoning, and execution in a very short time. Even the simplest copy trading strategy can turn positive returns into negative returns if the smart money is not followed.

Risk Control: In high-volatility scenarios, if AI fails to upload to the blockchain continuously or the slippage is too large, not only will it fail to generate excess returns, but it may also consume the entire principal within a few minutes.

Therefore, how to find a balance between speed and accuracy and reduce the error rate through mechanisms such as reinforcement learning, transfer learning, and simulation backtesting is the competitive point for the implementation of AI in this field.

Up or Down? The Survival Decision of Data Dashboards

With AI now able to directly generate actionable signals and even assist with order placement, "light middle-tier applications" that rely solely on data aggregation are facing a critical crisis. Whether it's tools that combine on-chain data into dashboards or trading bots that layer execution logic on top of aggregation, they inherently lack a sustainable moat. In the past, these tools thrived on convenience or user preference (e.g., users habitually use Dexscreener to check token CTO status). However, with the availability of the same data in multiple locations, the increasing commoditization of execution engines, and AI now able to directly generate decision signals and trigger execution based on the same data, their competitiveness is rapidly being diluted.

In the future, efficient on-chain execution engines will continue to mature, further lowering transaction barriers. In this trend, data providers must make a choice: either focus on developing faster data acquisition and processing infrastructure, or expand their reach to the application layer to directly control user scenarios and consumer traffic. Those stuck in the middle, focusing solely on data aggregation and lightweight packaging, will continue to face challenges in their survival.

Moving downward means building an infrastructure moat. While developing trading products, Hubble AI realized that relying solely on TG Bots wouldn't create a long-term advantage. Therefore, it shifted its focus to upstream data processing, dedicating itself to building "Crypto Databricks." After achieving extreme data processing speeds on Solana, Hubble AI is moving from data processing to an integrated data and research platform, establishing a position upstream in the value chain and providing the underlying support for the data needs of the US "finance on-chain" narrative and on-chain AI agent applications.

Moving forward means expanding into application scenarios and targeting end users. Space and Time initially focused on sub-second SQL indexing and oracle push, but recently began exploring consumer scenarios with the launch of Dream.Space, a "vibe coding" product on Ethereum. Users can write smart contracts in natural language or generate data analysis dashboards. This transformation not only increases the frequency of data service calls but also fosters direct user engagement through the end-user experience.

This shows that those caught in the middle, relying solely on selling data interfaces, are losing their niche. The future B2B2C data landscape will be dominated by two types of players: infrastructure companies that control the underlying pipelines and become "on-chain water, electricity, and gas"; and platforms that closely align with user decision-making scenarios and transform data into application experiences.

summary

Driven by the triple resonance of the meme craze, the explosion of high-performance public blockchains, and the commercialization of AI, the on-chain data sector is undergoing a structural shift. Advances in transaction speed, data dimensionality, and execution signals are making "visible charts" no longer the core competitive advantage. The true moat is shifting to "actionable signals that help users monetize" and the underlying data capabilities that underpin it all.

Over the next two to three years, the most attractive entrepreneurial opportunities in the crypto data space will emerge at the intersection of Web 2 infrastructure maturity and Web 3's on-chain native execution models. Data for major currencies like BTC and ETH, due to their high standardization and similar characteristics to traditional financial futures products, are gradually being included in the data coverage of traditional financial institutions and some Web 2 fintech platforms.

In contrast, data on meme coins and long-tail on-chain assets is highly non-standard and fragmented. From community narratives and on-chain sentiment to cross-chain liquidity, this information requires integration with on-chain address profiles, off-chain social signals, and even sub-second transaction execution. It is precisely this difference that creates a unique window of opportunity for crypto-native entrepreneurs in the processing and trading of long-tail assets and meme data.

We are optimistic about projects that have long-term development in the following two areas:

Upstream infrastructure: On-chain data companies with streaming data pipelines, ultra-low latency indexing, and cross-chain unified parsing frameworks that rival the processing power of Web 2 giants. These projects have the potential to become the Web 3 equivalent of Databricks/AWS. As users gradually migrate on-chain, transaction volume is expected to increase exponentially, and the B2B2C model has the long-term compounding value.

Downstream execution platforms—applications that integrate multi-dimensional data, AI agents, and seamless trade execution. By transforming fragmented on-chain and off-chain signals into directly executable trades, these products have the potential to become crypto-native Bloomberg Terminals. Their business model no longer relies on data access fees, but rather on monetization through excess returns and signal delivery.

We believe that these two types of players will dominate the next generation of encrypted data and build sustainable competitive advantages.