0G: Redefining the Performance Peak and Technical Paradigm of Decentralized AI Operating Systems

- Core Viewpoint: 0G (Zero Gravity) aims to address the bottleneck of traditional blockchains being unable to support large-scale AI applications through its disruptive modular architecture and extremely high-performance Data Availability (DA) layer, providing infrastructure support for decentralized artificial intelligence.

- Key Elements:

- Performance Breakthrough: Its Data Availability layer claims to achieve throughput as high as 50 Gbps, far surpassing traditional solutions like Ethereum, aiming to support real-time distribution of ultra-large-scale AI models.

- Architectural Innovation: Adopts a four-layer dAIOS architecture (Settlement, Storage, DA, Computation), decoupling AI workflows for independent scaling, and integrates TEE and PoRA technologies to ensure privacy and verifiability.

- Storage Paradigm Revolution: 0G Storage separates the log layer from the key-value layer, combined with Proof of Random Access, aiming to achieve access performance transitioning from "cold archiving" to "hot performance" comparable to centralized cloud services.

- Competitive Positioning: The report emphasizes that 0G achieves "dimensional superiority" in throughput over existing mainstream DA solutions and builds differentiated advantages through programmable DA and vertically integrated storage.

- Token Economics: The $0G token is designed for paying for resources, security staking, and task priority allocation, aiming to capture network usage value and incentivize ecosystem development.

Original Author: Jtsong.eth (Ø,G) (X: @Jtsong2)

Recently, the crypto research think tank @MessariCrypto released a comprehensive in-depth research report on 0G. This article is a Chinese summary of the key points:

[Core Summary]

With the anticipated explosion of the decentralized AI (DeAI) track in 2026, 0G (Zero Gravity), with its disruptive technical architecture, has completely ended the historical challenge of Web3 being unable to support large-scale AI models. Its core killer features can be summarized as:

Ultra-Fast Performance Engine (50 Gbps Throughput): Through logical decoupling and multi-level parallel sharding, 0G achieves a performance leap of over 600,000 times compared to traditional DA layers (like Ethereum, Celestia), becoming the world's only protocol capable of supporting real-time distribution of ultra-large-scale models like DeepSeek V3.

dAIOS Modular Architecture: Pioneering the operating system paradigm of four-layer synergy—"settlement, storage, data availability (DA), computation"—it breaks the "storage deficit" and "computation lag" of traditional blockchains, achieving an efficient closed loop for AI data flow and execution flow.

AI-Native Trusted Environment (TEE + PoRA): Through deep integration of Trusted Execution Environment (TEE) and Proof of Random Access (PoRA), 0G not only addresses the "hot storage" needs of massive data but also constructs a trustless, privacy-protected AI inference and training environment, realizing a leap from "ledger" to "digital life foundation."

Chapter 1: Macro Background: The "Decoupling and Reconstruction" of AI and Web3

In the era of large AI models, data, algorithms, and computing power have become core production factors. However, existing traditional blockchain infrastructure (like Ethereum, Solana) faces severe "performance mismatch" when supporting AI applications.

1. Limitations of Traditional Blockchains: Bottlenecks in Throughput and Storage

Traditional Layer 1 blockchains were originally designed to handle financial ledger transactions, not to carry TB-level AI training datasets or high-frequency model inference tasks.

Storage Deficit: Data storage costs on chains like Ethereum are extremely high, and they lack native support for unstructured big data (like model weight files, video datasets).

Throughput Bottleneck: Ethereum's DA (Data Availability) bandwidth is only about 80KB/s. Even after the EIP-4844 upgrade, it falls far short of the GB-level throughput required for real-time inference of large language models (LLMs).

Computation Lag: AI inference requires extremely low latency (millisecond level), while blockchain consensus mechanisms often operate on a second-level timescale, making "on-chain AI" nearly infeasible under current architectures.

2. 0G's Core Mission: Breaking the "Data Wall"

The AI industry is currently monopolized by centralized giants, forming a de facto "Data Wall," leading to restricted data privacy, unverifiable model outputs, and expensive rental costs. The emergence of 0G (Zero Gravity) marks a deep reconstruction of AI and Web3. It no longer merely views blockchain as a ledger storing hash values but, through a modular architecture, decouples the "data flow, storage flow, computation flow" required by AI. 0G's core mission is to break the centralized black box, making AI assets (data and models) into sovereignly ownable public goods through decentralized technology.

After understanding this macro mismatch, we need to delve into how 0G systematically addresses these fragmented pain points through a rigorous four-layer architecture.

Chapter 2: Core Architecture: Four-Layer Synergy of the Modular 0G Stack

0G is not a simple single blockchain but is defined as a dAIOS (decentralized AI Operating System). The core of this concept is that it provides AI developers with a complete protocol stack similar to an operating system, achieving exponential performance leaps through deep synergy of its four-layer architecture.

1. Analysis of the dAIOS Four-Layer Architecture

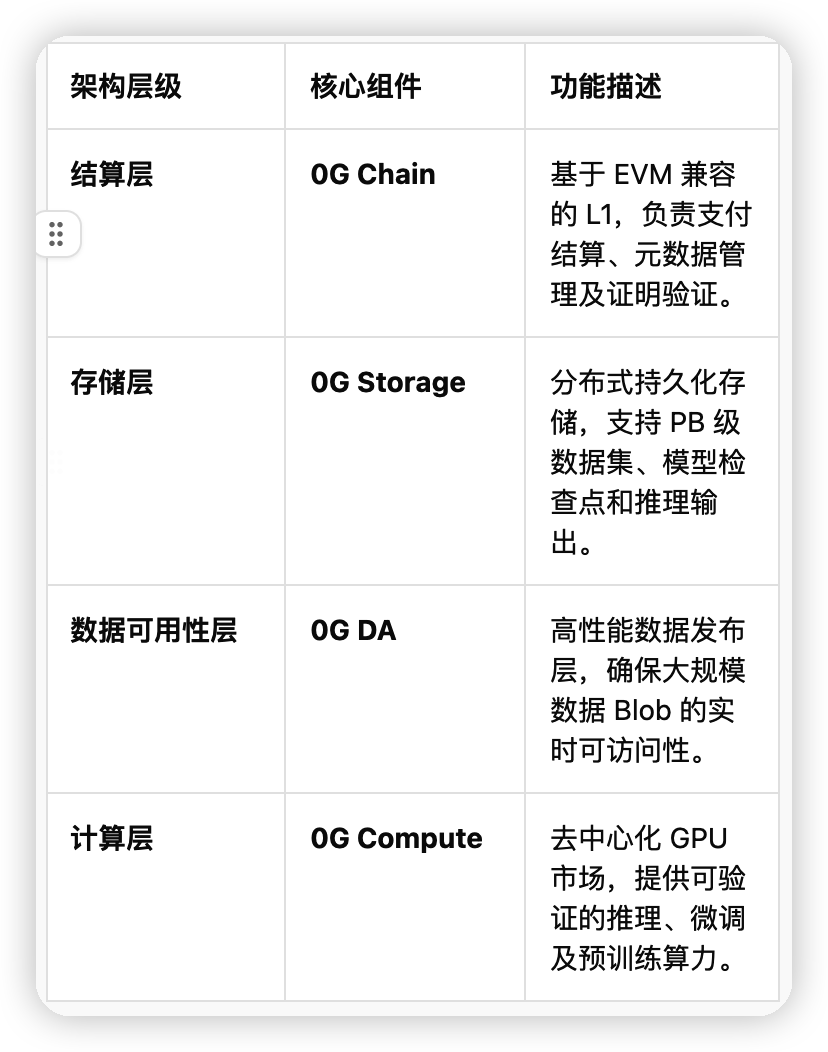

The 0G Stack ensures each layer can scale independently by decoupling execution, consensus, storage, and computation:

2. 0G Chain: The Performance Foundation Based on CometBFT

As the neural center of dAIOS, the 0G Chain employs a highly optimized CometBFT consensus mechanism. Its innovation lies in separating the execution layer from the consensus layer and significantly reducing block production wait times through pipelining and ABCI modular design. Performance Metrics: According to the latest benchmarks, the 0G Chain can achieve a throughput of 11,000+ TPS under a single shard, with sub-second finality. This extreme performance ensures that on-chain settlement does not become a bottleneck during high-frequency interactions of large-scale AI Agents.

3. The Decoupled Synergy of 0G Storage and 0G DA

0G's technical moat lies in its "dual-channel" design, separating data publishing from persistent storage:

0G DA: Focuses on the rapid broadcast and sampling verification of Blob data. It supports a maximum of about 32.5 MB per Blob. Through erasure coding technology, data availability is ensured even if some nodes go offline.

0G Storage: Handles immutable data through the "Log Layer" and dynamic state through the "Key-Value (KV) Layer."

This four-layer synergistic architecture provides fertile ground for the high-performance DA layer. Next, we will delve into the most impressive part of 0G's core engine—the high-performance DA technology.

Chapter 3: Technical Deep Dive into the High-Performance DA Layer (0G DA)

In the 2026 decentralized AI ecosystem, Data Availability (DA) is not just about "publishing proofs" but must carry the real-time pipeline for PB-level AI weight files and training datasets.

3.1 Logical Decoupling and Physical Synergy: The Generational Evolution of the "Dual-Channel" Architecture

The core superiority of 0G DA stems from its unique "dual-channel" architecture: it completely decouples Data Publishing from Data Storage logically but achieves efficient synergy at the physical node level.

Logical Decoupling: Unlike traditional DA layers that conflate data publishing with long-term storage, 0G DA is only responsible for verifying the accessibility of data blocks in the short term, delegating the persistence of massive data to 0G Storage.

Physical Synergy: Storage nodes use Proof of Random Access (PoRA) to ensure data truly exists, while DA nodes ensure transparency through a shard-based consensus network, achieving "publish-and-verify, storage-verification integration."

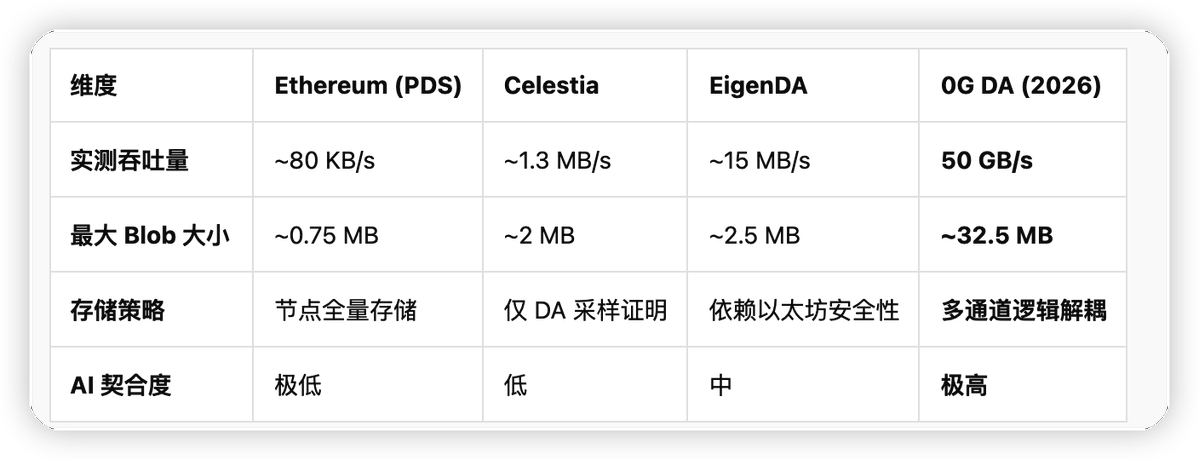

3.2 Performance Benchmark: Orders-of-Magnitude Leading Data Comparison

The breakthrough in throughput by 0G DA directly defines the performance boundaries of a decentralized AI operating system. The table below shows a technical parameter comparison between 0G and mainstream DA solutions:

3.3 Technical Foundation for Real-Time Availability: Erasure Coding and Multi-Consensus Sharding

To support massive AI data, 0G introduces Erasure Coding and Multi-Consensus Sharding:

Erasure Coding Optimization: By adding redundant proofs, complete information can be recovered from sampling minimal data fragments even if a large number of nodes in the network go offline.

Multi-Consensus Sharding: 0G abandons the linear logic of a single chain handling all DA. By horizontally scaling the consensus network, total throughput increases linearly with the number of nodes. In 2026 tests, it supported tens of thousands of Blob verification requests per second, ensuring the continuity of AI training flows.

Having a high-speed data channel alone is not enough; AI also needs a low-latency "brain storage" and a secure, private "execution space," which leads to the AI-specific optimization layer.

Chapter 4: AI-Specific Optimization and Secure Computing Enhancement

4.1 Solving the Latency Anxiety of AI Agents

For AI Agents executing strategies in real-time, data read latency is a life-or-death line.

Hot/Cold Data Separation Architecture: 0G Storage is internally divided into an immutable Log Layer and a mutable state KV Layer. Hot data is stored in the high-performance KV layer, supporting sub-second random access.

High-Performance Indexing Protocol: Utilizing Distributed Hash Tables (DHT) and dedicated metadata indexing nodes, AI Agents can locate required model parameters in milliseconds.

4.2 TEE Enhancement: The Final Piece for Building Trustless AI

0G fully introduced TEE (Trusted Execution Environment) security upgrades in 2026.

Privacy-Preserving Computation: Model weights and user inputs are processed within the "enclave" inside the TEE. Even node operators cannot peek into the computation process.

Result Verifiability: The remote attestation generated by the TEE is submitted to the 0G Chain along with the computation results, ensuring the results are generated by a specific, unaltered model.

4.3 Vision Realized: The Leap from Storage to Operating System

AI Agents are no longer isolated scripts but digital life entities with sovereign identity (iNFT standard), protected memory (0G Storage) and verifiable logic (TEE Compute). This closed loop eliminates the monopoly of centralized cloud providers over AI, marking the entry of decentralized AI into the era of large-scale commercial use.

However, to carry these "digital lives," the underlying distributed storage must undergo a performance revolution from "cold" to "hot."

Chapter 5: Innovation in the Distributed Storage Layer—A Paradigm Revolution from "Cold Archive" to "Hot Performance"

The core innovation of 0G Storage lies in breaking the performance constraints of traditional distributed storage.

1. Dual-Layer Architecture: Decoupling of Log Layer and KV Layer

Log Layer (Streaming Data Processing): Designed for unstructured data (like training logs, datasets). Through an append-only mode, it ensures millisecond-level synchronization of massive data across distributed nodes.

KV Layer (Indexing and State Management): For structured data, it provides high-performance indexing support. When retrieving model parameter weights, it reduces response latency to the millisecond level.

2. PoRA (Proof of Random Access): Sybil Attack Resistance and Verification System

To ensure storage authenticity, 0G introduces PoRA (Proof of Random Access).

Sybil Attack Resistance: PoRA directly ties mining difficulty to the actual occupied physical storage space.

Verifiability: Allows the network to randomly "spot-check" nodes, ensuring data is not only stored but also in a "ready-to-use" hot-activated state.

3. Performance Leap: Engineering Implementation of Second-Level Retrieval

0G achieves a retrieval leap from "minute-level" to "second-level" through the combination of erasure coding and high-bandwidth DA channels. This "hot storage" capability rivals the performance of centralized cloud services.

This leap in storage performance provides a solid decentralized foundation for supporting models with tens of billions of parameters.

Chapter 6: AI-Native Support—The Decentralized Foundation for Models with Tens of Billions of Parameters

1. AI Alignment Nodes: Guardians of the AI Workflow

AI Alignment Nodes are responsible for monitoring collaboration between storage nodes and service nodes. By verifying the authenticity of training tasks, they ensure AI model operation does not deviate from preset logic.

2. Supporting Large-Scale Parallel I/O

Processing models with tens or hundreds of billions of parameters (like Llama 3 or DeepSeek-V3) requires extremely high parallel I/O. Through data sharding and multi-consensus sharding technology, 0G allows thousands of nodes to simultaneously handle large-scale dataset reads.

3. Synergy Between Checkpoints and High-Bandwidth DA

Fault Recovery: 0G can rapidly persist checkpoint files of hundreds of GB in size.

Seamless Recovery: Thanks to the 50 Gbps throughput ceiling, new nodes can instantly synchronize the latest checkpoint snapshot from the DA layer, solving the pain point of decentralized large model training being difficult to sustain long-term.

Beyond technical details, we must zoom out to the entire industry to see how 0G sweeps across the existing market.

Chapter 7: Competitive Landscape—0G's Dimensional Supremacy and Differentiated Advantages

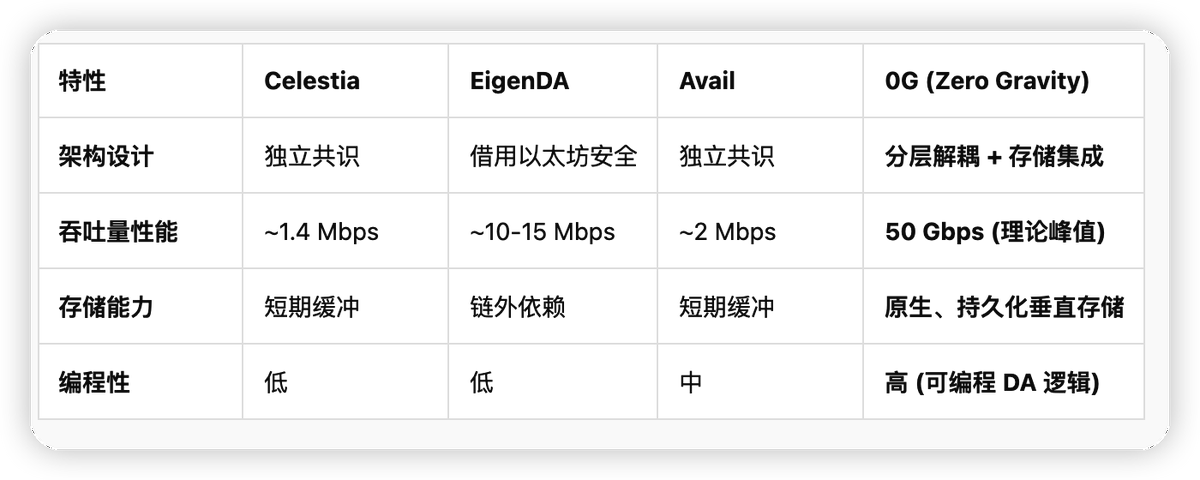

7.1 Horizontal Evaluation of Mainstream DA Solutions

7.2 Core Competitiveness: Programmable DA and Vertically Integrated Storage

Eliminating Transmission Bottlenecks: Native integration of the storage layer allows AI nodes to directly retrieve historical data from the DA layer.

50 Gbps Throughput Leap: Several orders of magnitude faster than competitors, supporting real-time inference.

Programmability (Programmable DA): Allows developers to define custom data allocation strategies and dynamically adjust data redundancy.

This dimensional supremacy foreshadows the rise of a vast economic system, and tokenomics is the fuel driving this system.

Chapter 8: 2026 Ecosystem Outlook and Tokenomics

With the stable operation of the mainnet in 2025, 2026 will be a critical juncture for the explosive growth of the 0G ecosystem.

8.1 $0G Token: Multi-Dimensional Value Capture Paths

Resource Payment (Work Token): The sole medium for accessing high-performance DA and storage space.

Security Staking: Validators and storage providers must stake $0G, receiving network revenue sharing.

Priority Allocation: During busy periods, token holdings determine the priority of computational tasks.

8.2 2026 Ecosystem Incentives and Challenges

0G plans to launch the "Gravity Foundation 2026" special fund, focusing on supporting DeAI inference frameworks and data crowdfunding platforms. Despite technological leadership, 0G still faces challenges such as high node hardware requirements, ecosystem cold start, and compliance.