Vitalik's new article: When technology controls everything, openness and verifiability become necessities

- 核心观点:技术栈需具备开放性与可验证性以保障自主权。

- 关键要素:

- 开源技术可防止数据垄断与权力集中。

- 可验证硬件能抵御后门与安全漏洞。

- 案例证明单有开放性不足,需结合验证。

- 市场影响:推动去中心化技术发展,增强用户主权。

- 时效性标注:长期影响

Original author: Vitalik

Original translation: TechFlow

Introduction

In this new article , published on September 24, 2025, Vitalik Buterin explores a critical question for all of our futures: how do we maintain our autonomy as technology takes over our lives?

The article begins by pointing out the biggest trend of this century - "The Internet has become real life."

From instant messaging to digital finance, from health tracking to government services, and even to future brain-computer interfaces, digital technology is reshaping every aspect of human existence. Vitalik believes this trend is irreversible, because in the global competition, civilizations that reject these technologies will lose competitiveness and sovereignty.

However, the widespread adoption of technology has brought about profound shifts in power structures. The true beneficiaries of this technological surge are not the consumers, but the producers. As we place increasing trust in technology, the consequences of a breach (e.g., through backdoors or security vulnerabilities) can be catastrophic. More importantly, even the mere possibility of a breach in trust can force society back into an exclusive trust model, prompting the question, "Is this something made by someone I trust?"

Vitalik’s solution is: we need to achieve two interrelated characteristics across the entire technology stack (software, hardware, and even biotechnology): true openness (open source, free license) and verifiability (preferably directly verifiable by end users).

The article uses specific examples to demonstrate how these two principles support each other in practice, and why neither is sufficient on its own. The following is a translation of the full article.

Special thanks to Ahmed Ghappour, bunnie, Daniel Genkin, Graham Liu, Michael Gao, mlsudo, Tim Ansell, Quintus Kilbourn, Tina Zhen, Balvi volunteers, and GrapheneOS developers for their feedback and discussions.

Perhaps the biggest trend of this century so far can be summed up in this phrase: "The internet has become real life." It started with email and instant messaging. Private conversations, conducted for millennia through mouth, ear, pen, and paper, now run on digital infrastructure. Then we had digital finance—both crypto-finance and the digitization of traditional finance itself. Then came our health: thanks to smartphones, personal health-tracking watches, and data inferred from purchase history, all sorts of information about our bodies is being processed through computers and computer networks. Over the next twenty years, I expect this trend to take over a wide range of other areas, including various government processes (even voting, eventually), monitoring of physical and biological indicators and threats in the public environment, and eventually, through brain-computer interfaces, even our thoughts.

I do not believe these trends are inevitable; their benefits are so great that, in a highly competitive global environment, civilizations that reject these technologies will first lose competitiveness and then sovereignty to those that embrace them. However, in addition to offering powerful benefits, these technologies also profoundly affect power dynamics, both within and between nations.

The civilizations that benefit most from new technological waves are not those that consume them, but those that produce them. Centrally planned equal access projects for locked-down platforms and APIs can only deliver a fraction of this, at best, and will fail outside of a predetermined "normal" range. Furthermore, this future involves placing a significant amount of trust in technology. If that trust is broken (e.g., through backdoors, security failures), we'll have truly significant problems. Even the mere possibility of a trust breach forces people to revert to fundamentally exclusionary models of social trust ("Did someone I trust build this thing?"). This creates an incentive mechanism that propagates upward: the sovereign is the one who decides the state of exception.

Avoiding these problems requires that the entire technology stack—software, hardware, and biotechnology—have two intertwined characteristics: true openness (i.e., open source, including liberal licensing) and verifiability (ideally, verifiable directly by end users).

The internet is real life. We want it to be a utopia, not a dystopia.

The importance of openness and verifiability in health

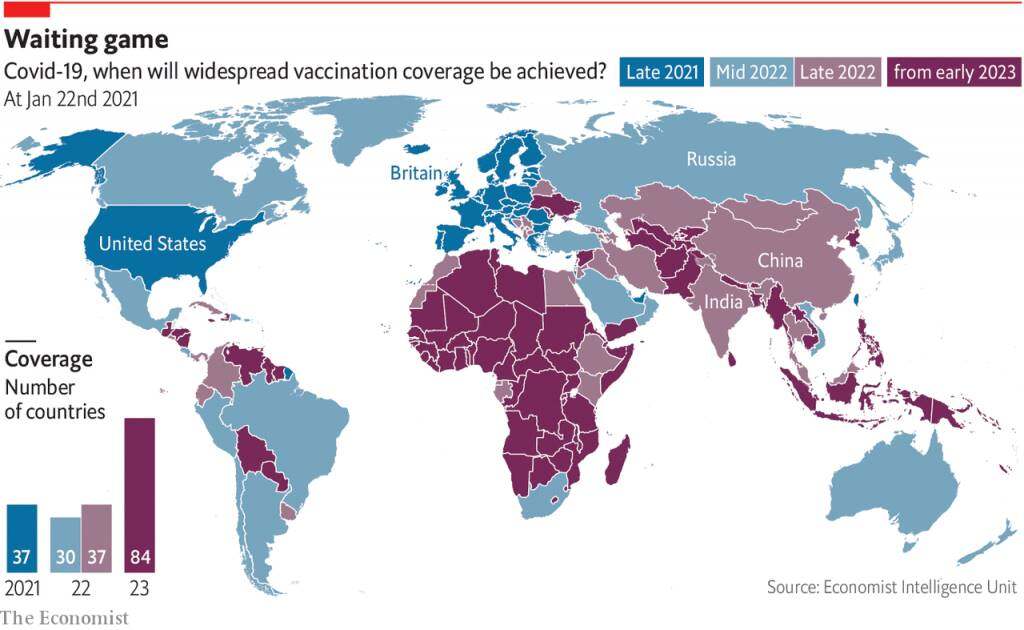

We saw the consequences of unequal access to technological production during the COVID-19 pandemic. Vaccines were produced in only a few countries, leading to huge disparities in the timing of their availability. Wealthier countries received high-quality vaccines in 2021, while others received low-quality vaccines in 2022 or 2023. There are some initiatives trying to ensure equal access , but because vaccine designs rely on capital-intensive, proprietary manufacturing processes that can only be done in a few places, these initiatives can only do so much.

Covid vaccine coverage 2021-23.

The second major problem with vaccines is the opaque science and communications strategies that attempt to pretend to the public that they carry zero risks or drawbacks, which is untrue and ultimately fuels distrust that has now morphed into a rejection of half a century of science.

In reality, both of these problems are solvable. Vaccines like PopVax , funded by Balvi , are cheaper to develop and manufactured using more open processes, reducing inequalities in access while making it easier to analyze and validate their safety and efficacy. We can take a step closer to designing vaccines that are verifiable.

Similar issues apply to the digital side of biotechnology . When you talk to longevity researchers, one of the first things you'll commonly hear is that the future of anti-aging medicine is personalized and data-driven. To know which medications and nutritional changes to recommend to a person today, you need to understand their body's current state. This is much more effective if large amounts of data can be collected and processed digitally in real time .

This watch collects 1000 times more data about you than a world currency. This has advantages and disadvantages.

The same idea applies to defensive biotechnologies designed to prevent downside risks, such as combating epidemics . The earlier a pandemic is detected, the more likely it is to be detected at the source—and even if that's not possible, each week provides more time to prepare and initiate countermeasures. While the pandemic is ongoing, knowing where people are becoming ill is of great value so that countermeasures can be deployed in real time. If the average person infected with a pandemic learns about it and self-isolates within an hour, that means the spread is much shorter than if they were to infect others three days later. If we know which 20% of locations are responsible for 80% of the spread, improving the air quality there can further benefit. All of this requires (i) many, many sensors and (ii) the ability for these sensors to communicate in real time to feed information into other systems.

If we go further in the "science fiction" direction, we'll find that brain-computer interfaces could enable higher productivity, help people better understand each other through telepathic communication, and open a safer path to highly intelligent artificial intelligence.

If the infrastructure for biometric and health tracking (both personal and spatial) is proprietary, data will fall by default into the hands of large corporations. These companies have the ability to build a variety of applications on it, while others do not. They can provide it through API access, but API access will be restricted, used to extract monopoly rents, and could be revoked at any time. This means that a few individuals and companies can access the most important ingredients of a major area of 21st-century technology, which in turn limits who can benefit economically from it.

On the other hand, if this personal health data is not secure, hackers can blackmail you for any health issue, optimize the pricing of insurance and healthcare products to extract value from you, and if the data includes location tracking, they know where to wait and kidnap you. On the other hand, your location data (which is very often hacked ) can be used to infer information about your health. If your brain-computer interface is hacked, it means that hostile actors are effectively reading (or worse, writing) your thoughts. This is no longer science fiction: see here for a plausible attack where a BCI hack could cause someone to lose motor control.

All in all, this is a huge benefit, but also carries significant risks: a strong focus on openness and verifiability is well-suited to mitigating risk.

The importance of openness and verifiability in personal and commercial digital technologies

Earlier this month, I had to fill out and sign a form required for a legal function. I was out of town at the time. There's a national electronic signature system, but I hadn't set it up at the time. I had to print out the form, sign it, walk to a nearby DHL, spend a considerable amount of time filling out the paper form, and then pay for it to be couriered half an hour to the other side of the world. Time required: half an hour, cost: $119. That same day, I had to sign a (digital) transaction to execute an action on the Ethereum blockchain. Time required: 5 seconds, cost: $0.10 (To be fair, without the blockchain, signing would be completely free).

Such stories are easy to find in areas like corporate or nonprofit governance, intellectual property management, and more. You can find them in a significant portion of the promotional materials for blockchain startups over the past decade. And then there's the mother of all use cases for "exercising personal power digitally": payments and finance.

Of course, all of this carries significant risk: what if the software or hardware is hacked? This is a risk the crypto community has long recognized: blockchains are permissionless and decentralized, so if you lose access to your funds , there are no resources, no uncle in the sky, to turn to for help. Not your keys, not your coins. For this reason, the crypto community has long considered multi-sig , social recovery wallets , and hardware wallets . However, in reality, in many cases, the lack of a trusted uncle in the sky isn't an ideological choice but an inherent part of the landscape. In fact, even in traditional finance, this "uncle in the sky" fails to protect most people: for example, only 4% of scam victims recover their losses . In use cases involving the custody of personal data, recovering a leak is impossible even in principle. Therefore, we need true verifiability and security—in software and, ultimately, in hardware .

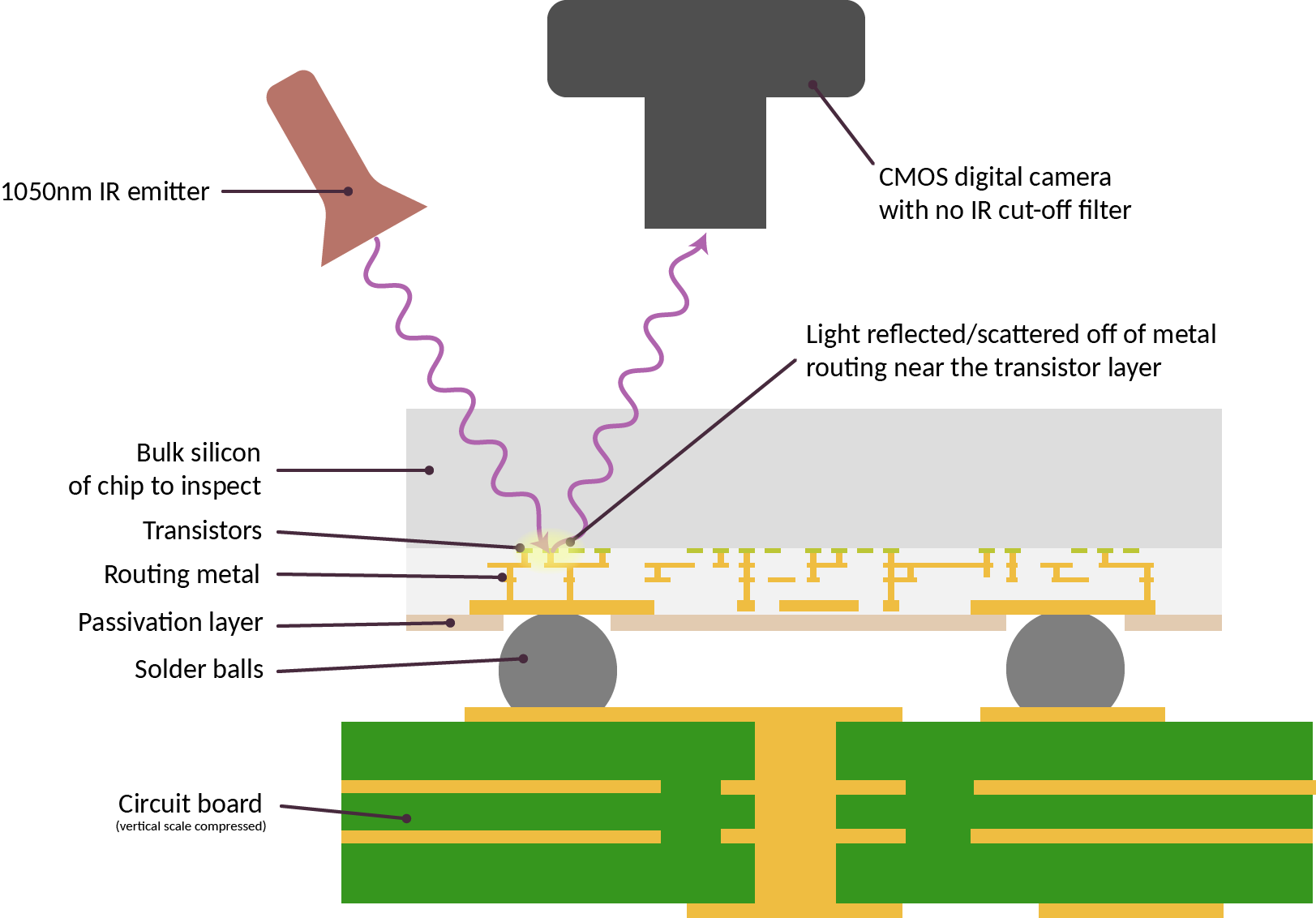

A proposed technique for checking that computer chips are manufactured correctly.

Importantly, when it comes to hardware, the risks we seek to protect against go far beyond the question of whether the manufacturer is evil. Rather, the problem lies in the numerous dependencies, most of which are closed-source, where any oversight could lead to unacceptable security outcomes . This paper presents recent examples of how microarchitectural choices can compromise the side-channel resistance of designs that are provably secure in models that only examine software. Attacks like EUCLEAK rely on vulnerabilities that are much harder to find because so many components are proprietary. If an AI model is trained on compromised hardware, backdoors can be inserted during training.

Another issue in all of these cases is the drawback of closed and centralized systems, even if they are completely secure. Centralization creates persistent leverage between individuals, companies, or nations: if your core infrastructure is built and maintained by a potentially untrustworthy company in a potentially untrustworthy country, you are vulnerable to pressure (e.g., see Henry Farrell on weaponized interdependence ). This is the problem that cryptocurrencies are designed to solve—but it exists in far more areas than just finance.

The Importance of Openness and Verifiability in Digital Citizenship Technologies

I often talk to people from all walks of life who are trying to figure out better forms of government suited to the diverse environments of the 21st century. Some, like Audrey Tang , are trying to take already-functioning political systems to the next level, empowering local open-source communities and using mechanisms like citizen assemblies, categorical lists, and quadratic voting. Others are starting from the bottom: here's a recent proposal by some Russian-born political scientists for a constitution for Russia with strong guarantees for individual liberties and local autonomy, a strong institutional bias toward peace and against aggression, and an unprecedentedly strong role for direct democracy. Others, like economists working on land value taxes or congestion pricing, are working to improve their countries' economies .

Different people may have varying degrees of enthusiasm for each idea. But one thing they all have in common is that they all involve high-bandwidth participation, so any realistic implementation must be digital . Pen and paper can be used for very basic record-keeping of who owns what and for holding elections every four years, but it's fine for anything that requires our input at a higher bandwidth or frequency.

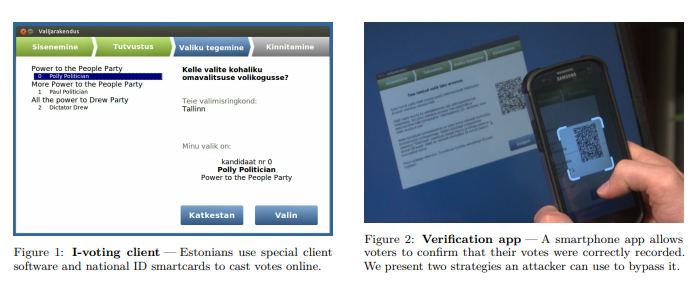

However, historically, security researchers have been receptive to ideas like electronic voting, ranging from skepticism to hostility. Here's a good summary of the case against electronic voting. Quoting the paper:

First, the technology is "black box software," meaning the public is not allowed to access the software that controls voting machines. While companies protect their software to prevent fraud (and defeat competition), this also leaves the public unaware of how voting software works. This makes it easy for companies to manipulate the software to produce fraudulent results. Furthermore, the vendors selling the machines compete with each other and cannot guarantee that they are producing machines in the best interest of voters and the accuracy of votes.

There are many real-world examples that justify this skepticism.

A critical analysis of internet voting in Estonia, 2014.

These arguments apply verbatim to a variety of other situations. But I predict that as technology advances, the "we simply don't do it that way" response will become increasingly unrealistic across a wide range of sectors. The world is rapidly becoming more efficient (for better or worse) thanks to technological development, and I predict that any system that doesn't follow this trend will become increasingly less relevant for both individual and collective affairs as people circumvent it. Therefore, we need an alternative: to actually do the hard thing and figure out how to make complex technical solutions secure and verifiable.

In theory, "secure and verifiable" and "open source" are two different things. It's absolutely possible for something to be proprietary and secure : airplanes are highly proprietary technology, but commercial aviation is, by and large, a very safe form of travel . But what the proprietary model fails to achieve is common sense security —the ability to be trusted by actors who don't trust each other.

Civil institutions like elections are one common situation where security knowledge is important. Another is collecting evidence in court. Recently, in Massachusetts, a large amount of breathalyzer evidence was ruled invalid because information about a malfunction in the test was found to have been suppressed. To quote the article:

Wait, so were all the results wrong? No. In fact, in most cases, there were no calibration issues with the breathalyzer tests. However, because investigators later discovered that the state crime lab had withheld evidence suggesting the problem was more widespread than they had stated, Judge Frank Gaziano wrote that all of these defendants' due process rights had been violated.

Due process in the courts is essentially an area that demands not just fairness and accuracy, but common sense about fairness and accuracy—because without common sense that the courts are doing the right thing, society can easily descend into a situation where people take matters into their own hands.

Beyond verifiability, openness itself has inherent benefits. Openness allows local groups to design systems for governance, identity, and other needs in ways that are compatible with local goals. If voting systems were proprietary, a country (or province or town) that wanted to try a new system would face difficulties: they would either have to convince the company to implement their preferred rules as a feature, or start from scratch and do all the work to ensure its security. This increases the high cost of innovation in political institutions.

In any of these areas, a more open-source hacker ethic approach would put more agency in the hands of local implementers, whether they are acting as individuals or as part of a government or corporation. To achieve this, open tools for building need to be widely available, and the infrastructure and codebase need to be liberally licensed to allow others to build on it. Copyleft is particularly valuable to the extent that its goal is to minimize power differentials.

The final area of civic technology that will be most important in the coming years is physical security . Surveillance cameras have been everywhere for the past two decades, leading to numerous concerns about civil liberties. Unfortunately, I predict that the recent drone war will make "no high-tech security" no longer a viable option. Even if a country's own laws don't infringe on a person's legal freedoms, if the state cannot protect you from other countries (or rogue companies or individuals) imposing their laws on you, you will still face significant challenges. Drones make such attacks much easier. Therefore, we need countermeasures, which will likely involve a large number of anti-drone systems , sensors, and cameras.

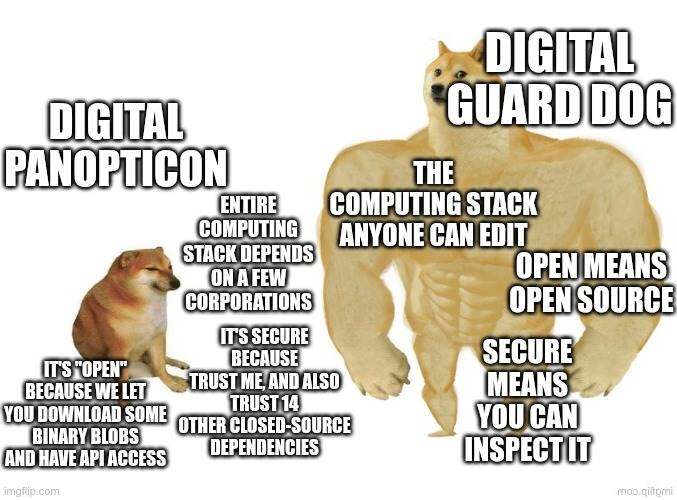

If these tools are proprietary, data collection will be opaque and centralized. If they are open and verifiable, we have the opportunity to adopt a better approach: security devices that can provably output only a limited amount of data under limited circumstances and delete the rest . We could have a digital future of physical security that acts more like a digital watchdog than a digital panopticon . One can imagine a world where public surveillance equipment is required to be open and verifiable, and anyone has the legal right to randomly select a surveillance device in public, disassemble it, and verify it. University computer science clubs could often use this as an educational exercise.

Open source and verifiable approach

We can't avoid the deep ingrained nature of digital computers in every aspect of our lives (individually and collectively). By default, we're likely to end up with digital computers built and operated by centralized corporations, optimized for the profit motives of a few, backdoored by their host governments, and with no way for most of the world to participate in their creation or know if they're secure. But we can try to move toward better alternatives.

Imagine a world where:

- You have a secure personal electronic device - with the functionality of a cell phone, the security and auditability levels of a crypto hardware wallet, not quite like a mechanical watch, but pretty close.

- Your messaging apps are encrypted , message patterns are obfuscated by mixnets, and all code is formally verified . You can be confident that your private communications are, in fact, private.

- Your finances are standardized ERC-20 assets on-chain (or on some server that publishes hashes and proofs to the chain to guarantee correctness), managed in a wallet controlled by your personal electronic device. If you lose your device, they can be recovered through some combination ( your choice) of your other devices, family, friends, or institutions (not necessarily the government: if anyone can easily do it, a church, for example, is likely to provide it).

- Open-source versions of infrastructure like Starlink exist , so we can get robust global connectivity without relying on a few individual players.

- You have OpenWeight LLM on your device scanning your activity, offering suggestions and auto-complete tasks, and warning you when you might have gotten the wrong information or are about to make a mistake.

- The operating system is also open source and formally verified.

- You are wearing a 24/7 personal health tracking device that is also open source and auditable , allowing you to access your data and ensure that no one else accesses it without your consent.

- We have more advanced forms of governance that use a general clever mix of rankings, citizen assemblies, quadratic voting, and democratic voting to set goals, and to select ideas from experts to determine how to achieve them. As a participant, you can actually be confident that the system is enforcing the rules that you understand.

- Public spaces are equipped with monitoring devices to track biological variables (e.g. CO2 and AQI levels, the presence of airborne diseases, wastewater). However, these devices (as well as any surveillance cameras and defensive drones) are open source and verifiable , and there is a legal system in place for random public inspections.

A world in which we have greater security and freedom than we do today, and equal access to the global economy, will require greater investment in a variety of technologies:

- More advanced forms of cryptography. What I call the "Gods of Egypt" of cryptography — ZK-SNARKs , fully homomorphic encryption , and obfuscation —are so powerful because they allow you to interoperate with data in a multi-party context and guarantee the output while keeping both the data and computation private. This enables many stronger privacy-preserving applications. Adjacent tools to cryptography (e.g., blockchains enable applications to strongly guarantee that data has not been tampered with and that users have not been excluded, and differential privacy adds noise to data to further protect privacy) also apply here.

- Application and user-level security . An application is secure only if the security guarantees it makes can actually be understood and verified by the user. This will involve software frameworks that make it easy to build applications with strong security properties. Importantly, it will also involve browsers, operating systems, and other intermediaries (such as locally running observers) all doing their part to validate applications, determine their risk level, and present this information to the user.

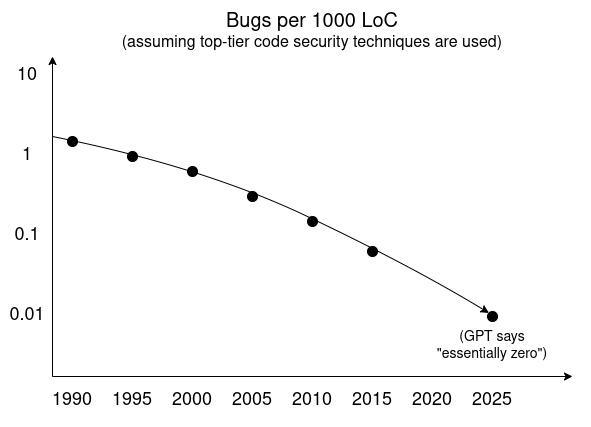

- Formal verification. We can use automated proof methods to algorithmically verify that a program satisfies properties of interest, such as non-data leakage or vulnerability to unauthorized third-party modification. Lean has recently become a popular language. These techniques are already being used to verify the ZK-SNARK proof algorithm for the Ethereum Virtual Machine (EVM) and other high-value, high-risk use cases in cryptography, and are also being used more broadly. Beyond this, further progress is needed in other, more mundane security practices.

The cybersecurity fatalism of the 2000s was wrong: bugs (and backdoors) can be defeated. We “just” have to learn to prioritize security over other competing goals.

- Open source and security-focused operating systems. More and more are starting to emerge: GrapheneOS as a security-focused version of Android, security-focused minimal kernels like Asterinas , and Huawei’s HarmonyOS (which has an open source version ) that uses formal verification (I expect many readers will think “if it’s Huawei, it must have backdoors,” but this misses the point: it doesn’t matter who produces something, as long as it’s open and anyone can verify it. This is a great example of how openness and verifiability can combat global balkanization).

- Secure open source hardware . If you can't be sure your hardware is actually running that software and isn't independently leaking data, then no software can be secure. I'm most interested in two short-term goals in this area:

- Personal secure electronic devices — what blockchain people call “hardware wallets” and open source enthusiasts call “secure phones” — ultimately converge to the same thing, except once you understand the need for security and versatility.

- The physical infrastructure of public spaces —the smart locks, the biometric monitoring devices I described above, and the general “internet of things” technology—needs to be trusted. This requires open source and verifiability.

- A secure, open toolchain for building open-source hardware . Today, hardware designs rely on a cascade of closed-source dependencies. This significantly increases the cost of manufacturing hardware and makes the process more permissive. It also makes hardware verification impractical: if the tools that generated the chip design are closed-source, you don't know what you're verifying against. Even tools like scan chains that exist today are often unusable in practice because so many of the necessary tools are closed-source. This can all change.

- Hardware verification (such as IRIS and X-ray scanning ). We need a way to scan chips to verify that they actually have the logic they're supposed to have and that they don't have extra components that could allow for unexpected forms of tampering and data extraction. This can be done destructively: an auditor randomly orders products containing computer chips (using seemingly average end-user identities), then disassembles the chips and verifies that the logic matches. IRIS or X-ray scanning can be done non-destructively, allowing for the scanning of potentially every chip.

- To achieve a consensus of trust, we ideally want hardware authentication technology that's accessible to a large group of people . Today's X-ray machines aren't in this position yet. This situation can be improved in two ways. First, we can improve authentication equipment (and the authentication-friendliness of chips) to make them more widely available. Second, we can supplement "full authentication" with more limited forms of authentication, even on smartphones (such as ID tags and signatures of keys generated by physical unclonable functions ), to verify stricter claims, such as "Is this machine part of a batch produced by a known manufacturer, from which a known random sample has been thoroughly authenticated by a third-party group?"

- Open-source, low-cost, local environmental and biological monitoring devices . Communities and individuals should be able to measure their environment and themselves and identify biological risks. This includes technologies in multiple form factors: personal-scale medical devices like OpenWater , air quality sensors, general-purpose airborne disease sensors (e.g., Valor ), and larger-scale environmental monitoring.

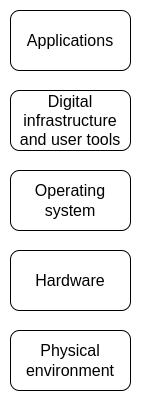

Openness and verifiability at every layer of the stack are important.

From Here to There

A key difference between this vision and more "traditional" visions of technology is that it's more friendly to local sovereignty, individual empowerment, and freedom. Security isn't achieved by searching the entire world and ensuring there are no bad actors anywhere, but by making the world more robust at every level. Openness means building and improving every layer of technology in the open, not just centrally curated open-access API programs. Validation isn't the preserve of proprietary rubber-stamp auditors who are likely in collusion with the companies and governments that roll out the technology—it's a right of the people and a socially encouraged hobby.

I believe this vision is more robust and more aligned with our fragmented global 21st century. But we don't have unlimited time to execute it. Centralized security approaches are rapidly evolving, including more centralized data collection and backdoors, and reducing verification entirely to "it was produced by a trusted developer or manufacturer." Centralized alternatives to true open access have been attempted for decades. It perhaps began with Facebook's internet.org , and it will continue, each attempt more complex than the last. We need to act quickly to both compete with these approaches and publicly demonstrate to people and institutions that better solutions are possible.

If we can successfully realize this vision, one way to understand the world we get is as a kind of retro-futurism. On the one hand, we benefit from more powerful technologies that allow us to improve our health, organize ourselves more efficiently and resiliently, and protect ourselves from threats both old and new. On the other hand, we get a world that brings back the properties that were second nature to everyone in 1900: infrastructure that is free and can be disassembled, validated, and modified to suit your needs. Anyone can participate not just as a consumer or an "app builder," but at any layer of the stack. Anyone can be confident that a device will do what it says it will do.

Designing for verifiability comes at a cost: many optimizations to hardware and software deliver much-needed speed improvements at the expense of making the design harder to understand or more brittle. Open source makes it more challenging to monetize under many standard business models. I believe both issues are overblown—but this isn't something the world will believe overnight. This begs the question: what are the pragmatic short-term goals ?

I'll propose one answer: an effort to build a completely open source and validation-friendly stack targeted at high-security, non-performance-critical applications—both consumer and institutional, remote and in-person. This will include hardware, software, and biotech.

Most computations that truly require security don't necessarily require speed. Even where speed is essential, there are often ways to combine high-performance, untrusted components with trusted, low-performance components to achieve high levels of performance and trust for many applications. Achieving maximum security and openness is unrealistic for everything. But we can start by ensuring these properties are available in the areas where they truly matter.