Wanxiang Blockchain Annual Review: Efforts to Break Through the Impossible Triangle of Public Chains (Technical Articles)

Written by: Cui Chen, Chief Economist Office of Wanxiang Blockchain

Reviewer: Zou Chuanwei, Chief Economist of Wanxiang Blockchain

Written by: Cui Chen, Chief Economist Office of Wanxiang Blockchain

Reviewer: Zou Chuanwei, Chief Economist of Wanxiang Blockchain

2022 is coming to an end, and we will look back at the ups and downs of the industry this year. Whether it is technological breakthroughs, application innovations, or the rise and fall of ecology, they have all become historical footnotes in the development of the industry. As in previous years, Wanxiang Blockchain launched a series of important annual review articles at the end of the year: "Public Chain Technology", "Application" and "Regulation", in order to record the epitome of the current industry development.

The impossible triangle problem of the public chain has always been an obstacle restricting the development of public chain technology, which in turn affects the performance of applications on the chain. For a long time, the development goals of the public chain have been focused on how to break through the impossible triangle, or find the best balance in the impossible triangle. The innovation of the public chain is reflected in the updated roadmap of Ethereum, EVM-compatible public chains and modular public chains, high-performance public chains represented by Solana and Aptos, etc. The following will explain the differences between different impossible triangle solutions from the perspective of impossible triangle and transaction process.

Understanding the Impossible Trinity

Impossible triangle concept

The most basic function of the public chain is to record information on the chain and maintain information security, that is, to prevent information from being tampered with (rollback) in an open network (no trust), relying on cryptography, consensus mechanism, distributed network and other underlying layers components. Cryptography includes public-private key cryptography and hash functions, etc., to ensure the correctness of verification signatures and chain structure rules.

Vitalik Buterin, the founder of Ethereum, proposed in a blog post in 2017: Among the three characteristics of scalability, security and decentralization, the blockchain system has at most two at the same time. When discussing the impact of the impossible triangle on the public chain and the breakthrough of the public chain on the impossible triangle, we need to understand the definitions of these three and their impact on the system.

Scalability measures the ability of the public chain to support transaction speed and scale, which is reflected in the time from transaction proposal to confirmation. A public chain with a slow transaction processing speed is difficult to implement many application functions, such as instant payment, which will limit the scope of the application and affect the user experience.

Security measures the ability of the system to resist attacks, and represents the reliability of the system in the face of failures, mainly reflected in fault tolerance and the difficulty of modifying consensus. The low fault tolerance of the system will make the system vulnerable to attack, and modifying the consensus will change the confirmed transactions, which is equivalent to tampering with past transaction records.

Decentralization measures the degree of dispersion of public chain nodes. Since the public chain is not established through a trusted third party, it can only be maintained by a distributed point-to-point network. On this basis, the decentralization of public chain nodes provides a system foundation of trust. Combining cryptography and consensus mechanisms, public chains can function normally. Decentralization also represents the user's right to participate in transaction verification, and also reflects the user's right to speak in the public chain system.

Decentralization is reflected in two levels: the first is measured by the number of nodes, the lower the entry threshold for nodes, the greater the number, and the higher the degree of decentralization; the second is measured by the actual controller, if there are mining pools in the public chain In fact, one role controls multiple nodes, which will bring problems such as centralized transaction review to the system.

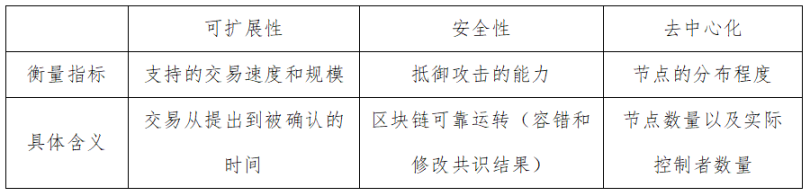

In general, the indicators and specific meanings measured by the Impossible Triangle of Public Chains are shown in the table below.

Table 1: Impossible Triangulation Indicators and Specific Implications

Understanding the Impossible Triangle and Optimization from the Perspective of Transaction Process

The transaction process on the blockchain can be simplified into the following four steps:

①The user signs the transaction and broadcasts it to the node, adding it to the unconfirmed transaction pool;

②Consensus nodes verify and execute transactions, and package these transactions into blocks;

③ The block is broadcast to other nodes in the network;

④Other nodes verify the block and store it after adding it to the blockchain.

These steps affect the three indicators of the public chain from different angles.

1. Scalability

Scalability will be affected by step ②③④. In step ②, the verification, execution and consensus speed of transactions will affect the scalability. Factors such as the blockchain account model, virtual machine, and consensus mechanism will all affect the speed of completing step ②. Taking changing the consensus as an example, if the consensus time is shortened by reducing the number of consensus nodes, it will affect the degree of decentralization of the system.

In step ③, if the number of nodes is large, the speed of synchronization among each node will also be slowed down. When improving scalability by expanding the block capacity, it is difficult to broadcast the block to all nodes within the originally planned time. In the absence of complete synchronization of the network, consensus processing transactions after different blocks will lead to forks, which will affect the security of the network. However, if the synchronization speed is accelerated by reducing the number of nodes, it will affect the decentralization of the system.

The fourth step means the final confirmation of network transactions. If the nodes can quickly verify after receiving the block, then the scalability can be improved, but the problems of compromising security and centralization are similar.

2. Security

The difficulty of being attacked in step ②③④, that is, the difficulty of being controlled by malicious nodes will affect the security of the system. Especially in the second step, it is reflected in the performance of the consensus mechanism. If the consensus mechanism has a low fault tolerance rate or is easily manipulated by malicious parties, it will reduce system security or cause nodes to tend to be centralized.

3. Decentralization

Distributed nodes are the underlying foundation of the public chain. The more nodes join, the more nodes agree with the public chain, and avoid the risk of single point of failure. It can also increase the attack cost of malicious actors, because malicious Those who need to control the number of nodes increase. Expanding the degree of centralization requires lower entry costs for nodes, but as mentioned above, increasing the number of nodes under the same security conditions will reduce the scalability of the system.

When understanding decentralization from the perspective of the actual controller of the node, the focus is on the issue of "censoring transactions". When nodes are responsible for packaging transactions, if they select and sort transactions according to their preferences, it will make it difficult for some transactions to be executed and confirmed on the chain after they are proposed. That is to say, the transactions proposed in step ① are difficult to be selected and verified in step ②.

In general, the public chain can be improved and optimized in several steps of the transaction process, but due to the influence of the impossible triangle, when optimizing in one aspect, it will always be accompanied by at least another negative impact . The public chain needs to find a balance in the impossible triangle to meet more application scenarios. The following is the optimization attempt of different public chains in each link, including the latest route of Ethereum, homogeneous public chain of Ethereum and high-performance public chain.

Ethereum: Optimizing the Impossible Triangle with New Technologies and Frameworks

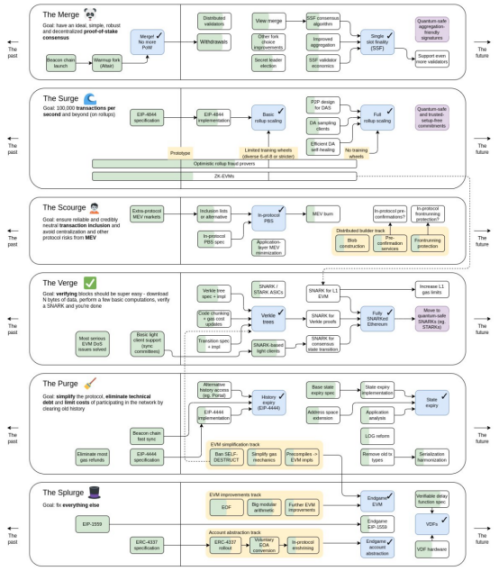

In the recently announced roadmap of Ethereum, we can see some improvements in the impossible triangle and user experience.

Figure 1: The latest roadmap of Ethereum

Merge: The consensus mechanism is transformed from PoW to PoS

The consensus mechanism mainly affects the synchronization process of block generation and verification. After the conversion of Ethereum to PoS, the LMD GHOST + Casper FFG formula mechanism is adopted to achieve two goals: to generate a block within each slot (12 seconds). The block, and the corresponding witness vote, is confirmed for finality after two epochs (one epoch includes 32 slots), and the rollback of the block needs to destroy at least one-third of the ETH pledged on the chain.

In Ethereum's Merge phase planning, Ethereum also plans to shorten the final line confirmation time to a single slot, and transaction confirmation will no longer require a few minutes of waiting time, which will achieve higher efficiency and improve user experience. However, the consensus algorithm needs to be improved to achieve a single slot confirmation, which may reduce the cost of attacking the chain (changing the consensus) and reduce the number of nodes for verification, which will affect the security and decentralization of the public chain.

Surge: Rollup and Danksharding work together to increase transaction processing speed

Ethereum expands through Layer 2 means, specifically Rollup expansion. The second layer network executes the content on the main network outside the chain, and then transmits the verifiable results back to the chain. At present, Rollup in Ethereum is still dominated by Optimistic and ZK routes.

In Optimistic Rollup, due to the universal setting, it has a first-mover advantage in the number of users and the overall locked value. Optimistic has a lot of controversy on the sorter, because the current Arbitrum and Optimism sorters are all produced in a centralized way, which is likely to cause transaction review problems. ZK Rollup focuses on two issues. The first is the construction of zkEVM. The choice between being compatible with EVM and building a virtual machine completely independently is also a choice between practicability and performance. The second is to accelerate the speed of zero-knowledge proofs. Generating zero-knowledge proofs through hardware devices is also an option. In order to further reduce the cost of data availability on the chain, these two types of Rollup have emerged as an off-chain data storage mode, which is suitable for scenarios that require high-frequency interaction, but increases the cost of trust in nodes.

Rollup seems to have solved the impossible triangle problem of the public chain, but Rollup has two inherent problems. First, there is an upper limit to the information processing capability of Rollup. In particular, Rollup relies on the implementation of the underlying network. The carrying capacity of the underlying network determines the operational capability of Rollup. Second, different Rollups on the chain will cause interoperability problems.

In order to allow Rollup to play a greater role, Ethereum's EIP 4844 (proto-danksharding) proposes to expand the block capacity into blob data blocks to undertake the data returned by Rollup to the main chain. Although expanding the block capacity improves scalability, the consensus and synchronization of big data will also cause problems. Therefore, in the Surge phase, it is also planned to launch DAS (Data Availability Sampling).

DAS allows the node to divide the data into several pieces without downloading and verifying all the data, and the node only needs to randomly download a part of it to verify whether the data is lost. The detection accuracy of DAS will be improved through erasure codes. Erasure codes can expand additional data to restore lost original data. It is a data redundancy mechanism. The validity of erasure code expansion data is guaranteed by the cryptographic mechanism KZG .

Assuming that there are 4 data blocks waiting to be verified, the node has a 25% probability of finding that 1 of the original data blocks is missing. After the data is doubled to 8 data blocks using erasure codes, the original data cannot be recovered if more than 50% of the data is lost, that is, the probability of the node finding that the data is lost exceeds 50%. As the number of validating nodes increases, the probability of discovering data loss also increases. Assuming that there are a total of n nodes for random sampling, when 50% of the data is lost, there is only a 1/2 n possibility that all nodes have exactly sampled unlost data blocks. Therefore, in the presence of a large number of nodes, the DAS verification method is sufficient to ensure data security.

Therefore, in general, improving the scalability of the overall block by increasing the block capacity will reduce the synchronization efficiency and affect the security of the system. In order to increase the speed of synchronization, reduce the storage capacity of nodes, and ensure sufficient decentralization, we can only improve the cryptography of the mechanism, but the overall security of the network is still affected.

The roles of proposer and builder of nodes are separated

Ethereum uses PBS (Proposer/Builder Separation) method to divide the work tasks of nodes into two roles, Proposer and Builder. The builder is responsible for building the body of the block and submitting the bid. The proposer only needs to execute the block with the highest bid, and does not know the transaction content in the block to reduce review transactions.

The implementation of Danksharding will have higher bandwidth resource requirements for builders. Builders will become centralized organizations due to professional requirements, while proposers are a broad decentralized group to balance centralization risks. As long as there is one When honest builders exist, Ethereum blocks can be produced normally. In order to prevent the builder from reviewing the transaction, the proposer will pass crList to represent the list of transactions that the proposer requires to package, and the builder needs to use the transactions in crList to fill the block. This is a mechanism to weaken MEV. At the same time, in the large block mode, the nodes are divided into two roles to ensure sufficient decentralization.

Verkle Trees, Historical Expiration, and Multidimensional Fee Markets

Huge historical data will affect the decentralization of Ethereum, especially the growing state data will lead to various inefficiencies. In order not to affect decentralization, while realizing the scalability plan mentioned above, some mechanisms are needed to ensure that the same security standards can be achieved and the system can operate more efficiently.

Verkle tree is a simpler data storage mode. Compared with the existing Merkle tree, it requires less proof space. This is an improvement made by cryptography technology, and it cooperates with the historical data expiration mechanism to reduce the storage pressure of nodes. , continue to lower the node threshold.

The historical data expiration mechanism can solve the problem of data expansion, and the client does not need to store data beyond a certain period of time. Proto-Danksharding can also implement independent logic that automatically deletes blob data after a period of time, so large blocks are no longer an obstacle to scaling. This does not mean that the block data is permanently lost. Before the data is deleted, enough time has been left for users who need the data to back up. There are also nodes that save all historical data in the network. These roles include special protocols, Ethereum Portal Network, block browsers and data service providers, individual enthusiasts and data analysis scholars will save all node data.

To sum up, Ethereum urgently needs performance improvement, and proposes the idea of Rollup and Danksharding to improve performance. At the same time, in order to allow more Rollup data to be stored cheaply and without bloating, a solution to data availability is proposed, and the problem of reduced security brought about by it is weakened. Ethereum still has to repair its own technical debt, and continue to protect the decentralization of nodes through planning such as PBS, history, and state expiration. With the introduction of new technologies and frameworks, Ethereum achieves maximum scalability while ensuring decentralization and security.

EVM compatible chain

Ethereum Homogeneous Public Chain: Solving Different Impossible Triangles at Different Layers

EVM compatible chain

In the past few years, Ethereum has sacrificed scalability in exchange for security and decentralization. It is shown that Ethereum is the public chain project with the largest number of nodes in the world, and has not experienced it during the past few years of operation. In the event of large-scale network interruption, the network will not be interrupted due to the failure and exit of individual nodes, which proves that the network has sufficient redundant backup. At the same time, the nodes need a long consensus synchronization time, the transaction processing speed is slow and the transaction fee increases.

To make a simple distinction, the structure of the Ethereum main network includes an execution layer and a consensus layer. The execution layer refers to the process by which nodes execute user instructions in Ethereum, including transfers and EVM. In the presence of a large number of nodes, consensus and synchronization are bound to be affected. Therefore, the easiest way to improve the performance of Ethereum is to modify its consensus layer and reduce the speed of consensus synchronization to achieve faster efficiency.

This can be seen from the competition of Ethereum homogeneous public chains (that is, various EVM compatible chains). Especially when the execution environment is the same, application migration is easier. Therefore, it can be seen that the homogeneous public chain adopting the Ethereum architecture has adopted such an approach. They have modified the consensus method of Ethereum, reduced the number of nodes and shortened the consensus time, but retained the function of the execution layer. Although it may cause centralization problems, due to the rapid acceptance of the spillover demand for applications on Ethereum, it has replaced Ethereum as the place of issuance of application projects. For example, BSC, Polygon, and Avalanche are all representative public chains of EVM compatible chains. What they have in common is that they have greatly reduced the number of nodes participating in the consensus in the network.

Modular public chain

A "modular public chain" appeared in Ethereum's competing public chains, which layered the functions of Ethereum and operated in a modular manner. This is actually a representative idea. Although the impossible triangle exists, a compromise point can be found in it.

Applications with different priorities will choose public chains with different priorities because they have different requirements for performance, security, and decentralization. For example, the privacy public chain does not allow transaction review to exist, and it is willing to pay extra costs to protect its decentralization. Public chains that carry financial applications pay more attention to security, while game public chains require extremely high performance experience and lower the requirements for decentralization.

Therefore, the modular public chain abstracts each layer of requirements, and divides the blockchain into: consensus layer, execution layer, settlement layer, and data layer. Different layers can have a variety of solutions, and according to the different needs of the chain , to directly integrate these solutions to achieve the best results. At the same time, the schemes of each layer are modularized for public chain switching, so as to balance the application requirements and break through the limitation of the impossible triangle in disguise.

Ethereum Non-Homogeneous Public Chain: Rethinking the Emphasis in the Impossible Triangle

Due to the performance bottleneck of Ethereum, almost all new non-homogeneous public chains have chosen a performance-first plan, combined with PoS consensus, and introduced new technologies to enhance its performance advantages or make up for security defects.

Solana first increased the capacity of the block, and the amount of data carried by the block increased tenfold. Secondly, in order to reduce the number of nodes for each synchronization, Solana will announce the list of responsible nodes in advance. Each transaction only needs to be transmitted to the leader (Leader), and other verifiers only need to verify the part they are responsible for, and do not need to verify the entire area. piece.

In addition, Solana will pre-judge before executing the transaction. If the conditions are met, parallel computing will be used to improve the processing speed of the transaction. If it must be processed serially, it will switch to a less efficient operation mode than Ethereum. It can be seen that Solana sacrifices security and decentralization in pursuit of scalability. When the leader node fails, or when judging whether to process in parallel or not, it will cause network interruption.

Aptos claims to be a representative of a new generation of high-performance public chains, and it continues various functions of the Ethereum public chain in different ways. Aptos adopts the AptosBFT consensus mechanism, which is a BFT-based consensus mechanism that only requires two network round trips to verify and submit blocks, without multiple rounds of voting, and can quickly achieve final confirmation. Aptos blocks only include a summary of transaction records, not all transaction record information, so the number of transactions contained in each block will be higher. It groups transactions into batches and merges them into blocks after a consensus is reached, which is processed in batches in subsequent execution and storage, which can improve efficiency in the process.

Aptos also adopts the parallel processing method, adopts the Block-STM engine, and adopts the parallel processing method for all transactions by default. When a conflict occurs, the unsuccessful transaction will be re-executed. This requires a scheduler to prevent the same transaction from being executed at the same time. And get more security confirmation after re-executing the transaction. In addition, fast state synchronization is also considered by Aptos.

State synchronization refers to the process of synchronizing the result of the state to other nodes after the transaction is completed and the state is transformed. The inefficiency of state synchronization will cause most nodes to fail to synchronize to the latest state information, thus affecting user experience, and it is difficult for new nodes to join the consensus process, affecting the decentralization of the network. Aptos provides a variety of state synchronization methods, including using RocksDB or Merkle proofs of state changes generated by nodes through verifiers, skipping the transaction execution stage to synchronize states. This method reduces the large amount of computing resources required for node synchronization, but it needs to be based on the use of a large number of network resources. Aptos recommends that consensus nodes run on cloud servers, and it is difficult for personal computers to meet its requirements.

Aptos believes that Ethereum's virtual machine is also its bottleneck. Ethereum has no way to update its language on a large scale, but Aptos does not have such a technical burden. Both Aptos and SUI use the Move language, and Move's innovation lies in handling assets as resources. There are certain restrictions on the creation, use and destruction of resources, so there will be no re-entry attacks common in Ethereum, which can make smart contracts more secure, and allow virtual machines to process multiple transactions in parallel, and charge rent based on storage resources is also possible.

To sum up, the new public chain believes that scalability takes precedence over security and decentralization, which is different from Ethereum. Therefore, they re-selected the focus direction in the impossible triangle. Such changes are very obvious to users, and the downtime problem on Solana is inevitable.

Thinking and Summary

The consensus mechanism and distributed node network ensure the reliable operation of the public chain from two aspects:

First, ensure the fault tolerance of the system: the consensus mechanism has a certain fault tolerance, that is, when the proportion of faulty nodes is below a certain percentage, the system can still verify information. Freely joined distributed nodes are able to supplement new normal nodes.

Second, increase the attack cost of the system: the consensus mechanism represents the way nodes reach a consensus on the state of existing blocks, and the party that holds the control of the consensus mechanism represents that the perpetrator has the ability to modify the consensus (modify the account record) and review the transaction (determine the transaction) Sorting and whether to pack on the chain) power. Consensus mechanisms and distributed nodes can increase the difficulty and cost of attacks from the rules.

On this basis, the impossible triangle problem of the blockchain can be understood as follows:

The non-homogeneous public chain of Ethereum, because it has no technical burden, can completely start from scratch, using a new architecture and technical means. Unlike Ethereum, which pursues performance under the premise of sufficient decentralization and security (Ethereum’s homogeneous public chain is in between, but also more inclined to performance), they all choose performance-first path. The advantage of this is that users can feel their progress very intuitively (TPS), but the security and decentralization issues are also a hidden danger.