A16z: Opportunities and Challenges Facing Generative AI

Original Source: Alpha Rabbit Research Notes

Original Source: Alpha Rabbit Research Notes

Original translation: Alpha Rabbit

A16Z recently posted another interesting article, talking about what they thinkGenerative AI Value CaptureQuestions, for example, what are the current problems in the commercialization of generative AI? Where is the greatest value capture? The author has annotated part of the content after translation.

The article has two main parts: the first part includes A16Z’s observations on the entire current track of generative AI, and what problems exist; the second part explains which one can capture the greatest value in addition to the problems, undoubtedly, the foundation The world of facilities(Please note: most of these are A16Z Portofolio, please read with an objective and rational attitude, this article does not constitute any investment advice or recommendation for projects)

first level title

What is generative AI?

first level title

Part I: Observations and Predictions

Artificial intelligence applications are rapidly expanding in scale, but retention is not so easy, and not everyone can establish a commercial scale.

The early stages of generative AI technology have emerged:

For example, hundreds of nascent AI startups are rushing to market to start developing foundational models and building AI-native applications, infrastructure, and tools.

Of course, there will be a lot of hot tech trends, and there will be cases of over-hyping. But the boom in generative artificial intelligence has seen many companies generate real revenue.

For example, models like Stable Diffusion and ChatGPT set historical records for user growth, some apps reached $100 million in annual revenue less than a year after launch, and the performance of AI models on some tasks orders of magnitude higher than human performance.

We find that a technological paradigm shift is taking place. but,The key question that needs to be studied is: Where in the entire market will value be generated?

Over the past year, we have spoken with dozens of founders of generative AI startups and experts in AI at large companies. We observe that so far, infrastructure providers are likely to be the biggest winners in this market, because infrastructure can obtain the most turnover and revenue through the entire generative AI stack.

Although the revenue growth of companies focusing on application development is very fast, these companies often have weaknesses in terms of user retention, product differentiation and gross profit margins.However, most model suppliers have not yet mastered large-scale commercialization capabilities.

To be more precise, those companies that can create the most value, such as being able to train generative artificial intelligence models and apply this technology to new applications, have not yet fully captured most of the value in the industry. Therefore, it is not so easy to predict the industry trend behind.

However, it is important to find ways to understand which parts of the entire industry stack can be truly differentiated and defensible, because this part can have implications for the overall market structure (ie, horizontal and vertical company development) and long-term value drivers (such as profit margins and user retention) have a significant impact.

But so far, it has been difficult to find structural defensibility on the stack (of generative AI) other than the traditional business moat of incumbent companies.

secondary title

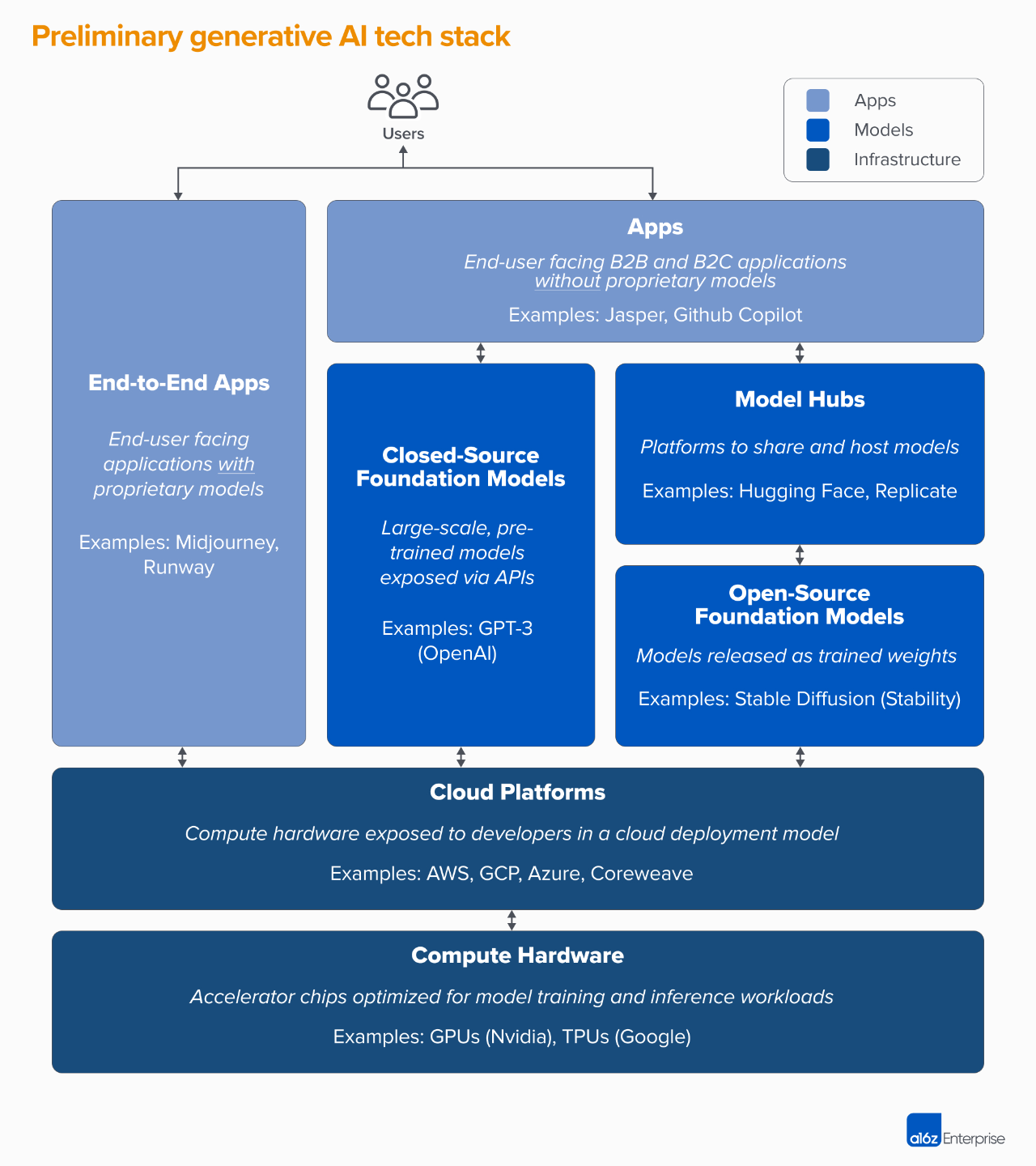

Technology stack: infrastructure, AI models and applications

To understand how the generative artificial intelligence track and market are formed, we first need to define the current stack of the entire industry:

The entire generative AI stack can be divided into three layers:

1. Integrate the generative AI model with user-facing product applications, usually by running your own model pipeline ("end-to-end application"), or rely on third-party APIs

(Note to Alpha Rabbit’s research notes: The model pipeline we are talking about here refers to the output of one model as the input of the next model)

2. Models to power AI products, available as proprietary APIs or open source checkpoints (which in turn require a hosted solution)

(Note: This section says that either the construction method of the entire model and the pre-trained model (also called checkpoint) are open, or the construction method of the entire model and the pre-trained model need to be kept secret, and only one interface API is open. , if it is the former, you have to run training/fine-tuning/inference by yourself, so you need to know what kind of environment and what kind of hardware it can run on, so someone needs to provide a hosting platform to handle the model running environment)

3. Infrastructure providers (i.e., cloud platforms and hardware manufacturers) that run training and inference workloads for generative AI models

It should be noted that what we are talking about here is not an ecological map of the entire market, but a framework for analyzing the market. This article lists some examples of well-known manufacturers in each category, but it does not include all the most current ones. The powerful AIGC application has not discussed MLops or LLMops tools in depth, because this area has not yet reached full-fledged standardization, and we will continue to discuss when we have the opportunity.

The first wave of generative AI applications is beginning to scale, but not easy to retain and differentiate

In previous technology cycles, the traditional view would have been that in order to build a large, independent company, you must have end customers, where end customers include individual consumers and B2B buyers.

Because of this traditional point of view, it is easy for everyone to think that the biggest opportunity in generative artificial intelligence lies in companies that can make end-user-oriented applications.

But so far, that hasn't necessarily been the case.

The growth of generative AI applications has been phenomenal, mainly driven by very novel and use cases, such as image generation, copywriting and coding, and the annual revenue of these three product categories has exceeded 100 million US dollars .

However, growth alone is not enough to build a lasting software company. The key is that this growth must be profitable, that is, users and customers can generate profits once they sign up (high gross profit), and this profit needs to be sustainable in the long run. Sustainable (high retention rate).

In the absence of strong technical differentiation between companies, B2B and B2C applications can only succeed through network effects, data advantages, or building increasingly complex workflows.

However, in the field of generative artificial intelligence, the above assumptions may not hold true.Among the start-up companies we researched that make generative artificial intelligence APPs, the gross profit margin varies widely. A few companies can reach 90%, and most companies have a gross profit margin as low as 50-60%. This is mainly affected by the cost of the model.

Although we can see the current top-of-funnel (Top-of-funnel) growth,However, it is unclear whether current customer acquisition strategies can be sustained, because I've seen a lot of pay-acquisition efficiency and retention start to drop.

Many applications currently on the market lack differentiation, because these applications mainly rely on similar underlying artificial intelligence models, and have not found killer applications and data that can obviously have exclusive network effects and are difficult for other competitors to replicate. work process.

secondary title

Looking to the future, what problems do generative AI applications face?

In vertical integration ("Model + Application")aspect

If the artificial intelligence model is used as a consumer service, application developers can quickly iterate with a small team model, and gradually replace the model supplier with the advancement of technology. But other developers disagree. They believe that the product is the model, and training from scratch is the only way to create defensibility, which refers to continuous re-training on proprietary product data. But this requires higher capital, and at the cost of a stable product team.

Build features and apps

Generative AI products come in many forms: desktop apps, mobile apps, Figma/Photoshop plugins, Chrome extensions...even Discord bots. It is easier to integrate artificial intelligence products where users are already using them and have habits, because the user interface is simpler. But which of these companies will become independent companies? Which ones will be absorbed by the AI giants Microsoft or Google?

Will it be consistent with the hyper cycle released by Gartner?

first level title

Part II: Large-scale commercial implementation of generative artificial intelligence

In the first part, we talked about the current stack of generative AI and some of the problems it faces. The second part continues:

About the large-scale commercial implementation of generative artificial intelligence

And where does Winner Takes All capture the most value?

Have other questions above?

What are the current problems in the industry?

Although the invention of the model has made generative artificial intelligence technology widely known, it has not yet reached the level of large-scale commercial implementation

We would not be witnessing such successful generative AI techniques today without the research efforts of companies like Google, OpenAI, and Stability, and the engineering efforts of these companies. Whether it is the new model architecture we have seen, or the expansion of the training pipeline, it is mainly due to the powerful capabilities of the current large language models (LLMs) and image models.

However, if we look at the revenue of these companies, compared with such a large usage volume and the popularity of the market, the revenue is not very high. In terms of image generation, the community of Stable Diffusion has exploded. But Stability's main checkpoint is open, and that's the core tenet of Stability's business.

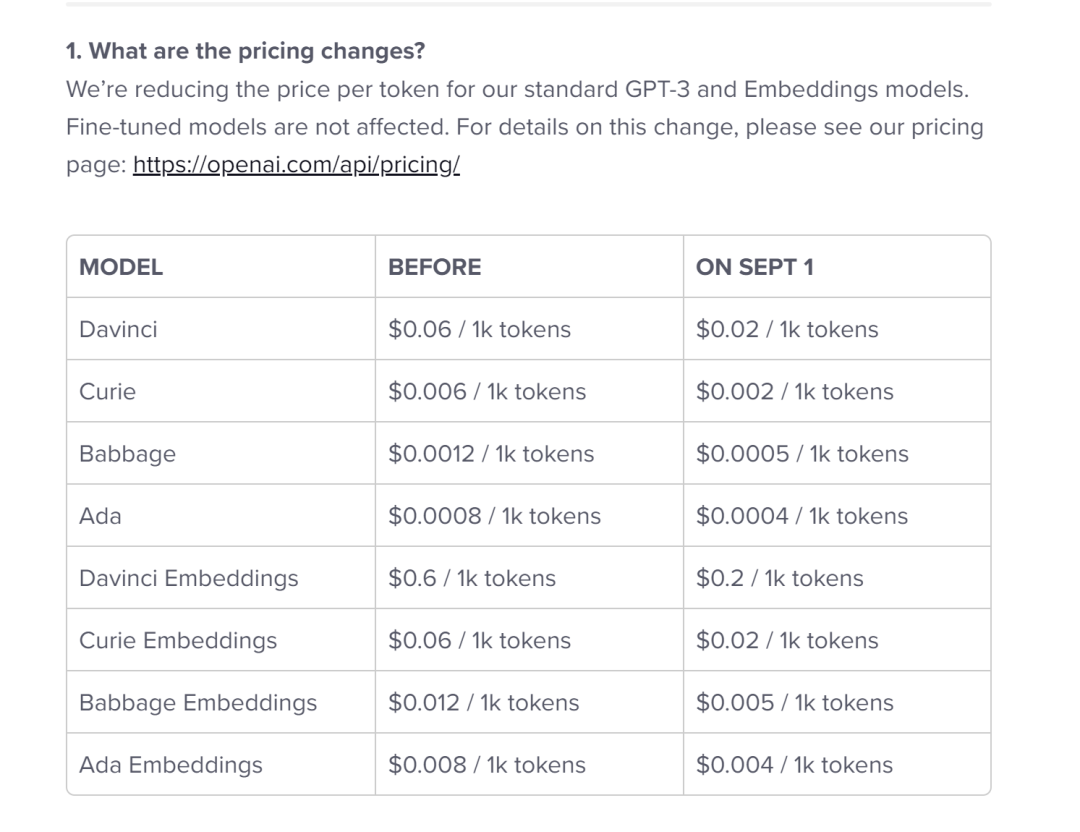

In terms of natural language models, OpenAI is known for GPT-3/3.5 and ChatGPT.But so far, there have been fewer killer apps built on top of OpenAI, and the price has dropped once. (See below)

(Think why the price is reduced?)

Of course, these may only be temporary phenomena at present. Stability is a new type of startup, not focused on commercialization. OpenAI has the potential to have a massive business, and as more killer apps are built, OpenAI could earn a significant portion of all natural language industry category revenues,Especially if OpenAI's integration with Microsoft's portfolio goes well, the high usage of these models could lead to massive revenue.

But there are also hidden dangers:

For example, if the model is open-sourced, it can be hosted by anyone, including other companies that don't shoulder the cost of training large-scale models (thousands or hundreds of millions of dollars).

And it's not clear that the closed-source model can maintain its dominance indefinitely. For example, let’s say we’re starting to see large-model LLMs built by companies like Anthropic, Cohere, and Character.ai approaching OpenAI’s performance levels, trained on similar datasets (i.e. the internet), and employing similar model architectures.

The Stable Diffusion example shows that if an open source model achieves a certain level of performance and community support, other alternatives on the same track may find it very difficult to compete.

Perhaps the most obvious gain for model providers so far is commercialization related to hosting(Note: This refers to either opening up the construction method of the entire model and the pre-trained model (also called checkpoint) mentioned in the previous article, or keeping the construction method of the entire model and the pre-trained model secret, Only one interface API is open. If it is the former, you have to run training/fine-tuning/reasoning yourself, so you need to know what kind of environment and what kind of hardware it can run on, so someone needs to provide a hosting platform to process the model operating environment);

And the demand for proprietary APIs (such as from OpenAI) is growing rapidly. For example, open source model hosting services (such as Hugging Face and Replicate) have emerged as hubs for easily sharing and integrating models, even creating indirect network effects between model producers and consumers. There is also a strong assumption that it is possible to achieve profitability for the company through fine-tuning and escrow agreements with corporate clients.

However, the model provider still faces problems:

commercialize.The prevailing view is that the performance of AI models will converge over time. When talking to app developers, this level of consistent performance hasn't happened so far, as there are top players in both text and image models. The advantage of these companies is not in the unique model architecture, but based on high capital requirements, proprietary product interaction data and scarce AI talents.

But can these be long-term sustainable advantages for a company?

Risk of breaking away from the model vendor.Relying on model suppliers is the way many app companies start and even grow their business on suppliers, but once they reach scale, app developers have an incentive to build and/or host their own models. Many model vendors have an uneven distribution of customers, with a small number of applications capturing most of the revenue.What if these customers do not use the supplier's model and turn to their own internal artificial intelligence model development?

Will capital matter?The vision of generative AI is so great that many model vendors have begun to incorporate the public good into their mission. This has not hindered their financing at all. But what needs to be discussed is whether the model vendors are really willing to capture value, and whether they should get it.

Get the infrastructure and get the world.

Everything in Generative AI uses cloud-hosted GPU (or TPU) services. Whether it's a model vendor or a research lab, running a training workload, or a hosting company running inference/fine-tuning, FLOPS is the key to generative AI.

(Note to Alpha Rabbit research notes: FLOPS is the abbreviation of floating point operations per second, which means the number of floating point operations per second, which is understood as calculation speed. It is an indicator to measure hardware performance. Usually when we evaluate a model, we first need Look at its accuracy. When the accuracy is not enough, you tell others how fast my model predicts, and how small the memory is when deployed. It is useless. But when your model reaches a certain accuracy After the degree, further evaluation indicators are needed to evaluate the model:

This includes:

1) The computing power required for forward propagation, which reflects the performance requirements of hardware such as GPU;

2) The number of parameters, which reflects the memory size occupied. Why add these two indicators? Because this is related to the implementation of your model algorithm. For example, if you want to deploy deep learning models on mobile phones and cars, there are strict requirements on model size and computing power. Everyone must know what the model parameters are and how to calculate them, but the computing power required for forward propagation may still be a little doubtful. So here is a total of the computing power required for forward propagation. It is embodied by FLOPs.

Reference material: Zhihu A Chai Ben Chai: https://zhuanlan.zhihu.com/p/137719986 )

So a lot of the money in generative AI ends up going to infrastructure companies. As a rough estimate, on average, application companies spend around 20-40% of their revenue on inference and fine-tuning per customer. And that revenue is typically paid directly to cloud providers for compute instances or third-party model providers, who in turn spend about half of their revenue on cloud infrastructure.So, we can extrapolate: 10-20% of total generative AI revenue today goes to cloud providers.

In addition, startups training their own models have also raised billions of dollars in venture capital, and most of them (up to 80-90% in early rounds) are usually spent on cloud providers. Many tech companies spend hundreds of millions of dollars a year on model training, either with external cloud providers or directly with hardware manufacturers.

For an AIGC nascent market, most of it is spent on the three major clouds:Amazon Web Services (AWS), Google Cloud (GCP) and Microsoft Azure, these cloud providers collectively spend more than $100 billion per year in capex to ensure they have the most comprehensive, reliable and cost-competitive platforms.

Especially in the field of generative artificial intelligence, these cloud vendors can give priority to scarce hardware (such as Nvidia A 100 and H 100 GPU)

(Alpha Rabbit Note: A 100 looks like the following

Also read this article:Breaking | Interpretation about the U.S. stopping Nvidia from selling some products to China 20220901

As a result, competition has emerged, where challengers such as Oracle, or startups such as Coreweave and Lambda Labs, have burgeoned with solutions aimed at large model developers, in terms of cost, usability and personalized support. To compete, these companies also expose finer-grained resource abstractions (ie, containers), whereas large clouds only offer virtual machine instances due to the limitations of GPU virtualization.

[Note to Alpha Rabbit’s Research Notes: For example, when we want to shop, send messages, and use online banking on the Internet, we are all interacting with cloud-based servers. That is to say, when we use the client (mobile phone, computer, Ipad) to perform various operations, we need to send a request to the server, and each operation requires the corresponding server to process each request and then return a response.

A large number of requests and responses made by thousands of users at the same time require strong computing power (think about when we were shopping on Double Eleven, countless users frantically placed orders at the same time, and the shopping cart would suddenly get stuck), this At this time, computing power is very important. As we said earlier, virtual machines are part of computing capabilities. When we use cloud computing solutions from cloud service providers, we can choose to use virtual machines according to the current capabilities and needs of the enterprise.

What is a virtual machine?

It is an emulator of a computer system, which can provide the functions of our real computer in a completely isolated system. The system virtual machine can provide a complete system platform that can run a complete operating system, such as the Windows system we use. MAC OS system, etc. A program virtual machine is a computer program that can run independently in an emulator. In other words, if you buy a virtual machine provided by a cloud service provider, it is like buying a piece of land from a cloud service provider, and then you can install various software and run various tasks on the virtual machine, just like we bought it ourselves. It is like building a house on the land that came here.

What is a container? Containers, we usually understand as rice bowls, utensils and other tools that can hold things. What is the container technology often mentioned in IT? In fact, this word comes from the translation of Linux Container. In English, the word Container has the meaning of container and container (in terms of technical metaphor, the main meaning of container is container). But since container is easier to read in Chinese, we use the Chinese container as a common word. If you want to understand Linux Container technology vividly, you can imagine the container at the seaside freight terminal in your mind after reading this.

The container in the freight terminal is used to carry goods. It is a steel box standardized by specification. The characteristic of the containers is that they are all square, and the format is uniform, and they can be stacked on top of each other.

In this way, the goods can be put into the giant freight ship in the container, and the manufacturers who need to transport the goods can deliver the goods more quickly and conveniently. The emergence of the container provides more efficient transportation services for the manufacturers. According to this convenient transportation service, in order to be easy to use in the Chinese environment, the concept of the image of the container is quoted in the computer world. 】

In our opinion, the biggest winner in generative AI so far is Nvidia, which runs the vast majority of AI workloads.Nvidia's data center GPU revenue of $3.8 billion in the third quarter of fiscal 2023 was largely devoted to generative AI use cases.

(GPU: graphics processing unit (English: graphics processing unit, abbreviation: GPU), also known as display core, visual processor, display chip, is a graphics processing unit that is specially designed for use in personal computers, workstations, game consoles, and some mobile devices (such as tablet computers) , smart phones, etc.) to do image and graphics-related computing work on the microprocessor)

NVIDIA has built a strong moat around this business through decades of investment in the GPU ecosystem and long-term in-depth applications in academia. A recent analysis found that Nvidia's GPUs were cited 90 times more often in research papers than the top AI chip startups.

Of course, other hardware options do exist, including Google TPUs; AMD Instinct GPUs; AWS Inferentia and Trainium chips; and startups like Cerebras, Sambanova, and Graphcore.

Intel entered the market with its own high-end Habana chips and Ponte Vecchio GPUs. But so far, few of Intel's new chips have captured significant market share. Two other notable exceptions are Google, whose TPUs have been gaining traction in the steady proliferation community and some large GCP deals, and TSMC, which is thought to make all the chips listed here, including Nvidia GPUs (Intel uses its own fab and TSMC to make chips).

What we found: Infrastructure is a profitable, persistent, seemingly defensible layer of the stack

However, questions that infrastructure companies need to answer include:

What about stateless workloads?

What this means is that no matter where you rent an Nvidia GPU, it's the same. Most AI workloads are stateless, i.e. model inference does not require an attached database or storage (note: it does not require external storage or database, except for the model weights themselves). This means that AI workloads may be easier to migrate to the cloud than traditional application workloads. In this case, how do cloud providers create stickiness that prevents customers from running to cheaper options?

What if chips are not scarce anymore?

Pricing by cloud providers and Nvidia, because GPUs are in scarce supply, can be expensive. Vendors have told us that the listing price of the A 100 has continued to rise since its launch, which is very unusual for computing hardware. So what happens to cloud providers when this supply constraint is eventually removed through increased production and/or adoption of new hardware platforms?

Can Xinjinyun break through the siege?

We think vertical cloud will take market share from the Big Three with more specialized products. So far in AI, the new cloud player has gained momentum with modest technology differentiation and Nvidia backing. For example, existing cloud providers are both their largest customers and emerging competitors. So, the long-term question for these emerging cloud companies is, can they overcome the scale advantages of the three giants?

So, where does the value accumulate the most? How do we vote to capture the greatest value?

There is no clear answer yet, but based on the early data on generative AI currently available, combined with the experience of early AI and machine learning startups, the following judgments are made:

In today's generative AI, there is almost no systemic moat in any sense.We see the current application, there is not much product differentiation, and the signs of this are very obvious. The reason is that these apps use similar AI models. Therefore, what the current models are facing is that it is impossible to judge where their differences are in a longer period of time. They are trained on similar data sets and architectures; and cloud providers are also similar, and everyone's technology is basically the same, because running The same GPU; even the hardware companies, make the chips in the same factories.

certainly,There are still standard moats—scale moats exist, for example, the same startups, I have better financing than you, my financing ability is stronger; or supply chain moats, I have GPUs, you don’t; or ecosystem moats , For example, my software has more users than you, and I started earlier, I have time and user scale barriers; or the algorithm moat, for example, my algorithm is stronger than yours. The moat in the sales field, I am better than you in selling goods, I am the leader in the channel; or the moat in the data field, for example, I collect more data than you.

However, none of these moats have long-term advantages and are not sustainable. Also, it’s too early to tell exactly where in these stacks strong, immediate network effects will prevail.

Based on the available data, it is too early to tell whether there will be a long-term, winner-take-all opportunity in generative AI.

It sounds weird, but for us, this is good news.

Precisely because the potential size of the entire market is difficult to grasp, it is closely related to software and everyone's attempts. We expect many players to participate in this market, and everyone will compete with conscience at all levels of the generative AI stack. We expect successful companies that can run horizontally and vertically.

However, this is determined by the end market and user. For example, if the main differentiator of the end product lies in the AI technology itself, then verticalization (that is, the tight integration of user-facing applications with native models) is likely to win out.

And if AI is part of a larger, long-tail feature set, then perhaps horizontalization is the real trend. Of course, as time goes by, we should also see the establishment of more traditional moats, and even some brand new moats.

Regardless, one thing is for sure, generative AI is changing industries. Everyone is continuing to learn, a lot of value will be released, and the technological ecology will change as a result. Everyone is on the way.