IOSG Ventures:拆解数据可用性层,模块化未来中被忽视的乐高积木

原文标题:《IOSG Weekly Brief |拆解数据可用性层:模块化未来中被忽视的乐高积木 #136》

原文作者:Jiawei,IOSG Ventures

tl;dr

对于轻客户端的数据可用性,采用纠删码来解决该问题几乎没有异议,不同点在于如何确保纠删码被正确编码。Polygon Avail 和 Danksharding 中采用了 KZG 承诺,而 Celestia 中采用了欺诈证明。

对于 Rollup 的数据可用性,如果把 DAC 理解为联盟链,那么 Polygon Avail 和 Celestia 所做的就是使数据可用性层更加去中心化——相当于提供「DA-Specific」的公链,以此提升信任级别。

未来的 3 至 5 年内,区块链的架构必然由单体化向模块化演变,各层呈现低耦合状态。未来可能出现 Rollup-as-a-Service(RaaS)、Data Availability-as-a-Service(DAaaS)等许多模块化组件的提供商,实现区块链体系结构的可组合性乐高。模块化区块链是支撑下一个周期的重要叙事之一。

模块化区块链中,执行层已经「四分天下」,后来者寥寥;共识层逐鹿中原,Aptos 和 Sui 等崭露头角,公链竞争格局虽未尘埃落定,但其叙事已是新瓶装旧酒,难以寻找合理的投资机会。而数据可用性层的价值仍然有待被发掘。

模块化区块链 Modular Blockchain

在聊数据可用性之前,我们先花点时间对模块化区块链进行简要回顾。

图片来源:IOSG Ventures, 据 Peter Watts 改制

关于模块化区块链的分层暂无严格定义,一些分层方式从以太坊出发,另一些则偏向通用化的视角,主要看在何语境下进行讨论。

执行层:两件事发生在执行层。对单笔交易而言,执行交易并发生状态更改;对同批次的交易而言,计算该批次的状态根。当前以太坊执行层的一部分工作分给了 Rollup,即我们熟知的 StarkNet、zkSync、Arbitrum 和 Optimism 等。

结算层:可以理解为主链上的 Rollup 合约验证状态根的有效性(zkRollup)或欺诈证明(Optimistic Rollup)的过程。

共识层:无论采用 PoW、PoS 或其他共识算法,总之共识层是为了在分布式系统中对某件事达成一致,即对状态转换的有效性达成共识。在模块化的语境下,结算层和共识层的含义有些相近,故也有一些研究者把结算层和共识层统一起来。

历史状态层:由 Polynya 提出(仅针对以太坊而言)。因为在引入 Proto-Danksharding 之后,以太坊只在一定时间窗口内维护即时数据可用性,之后则进行修剪操作,把这项工作交给其他人。例如 Portal Network 或是其他存储这些数据的第三方可被归类于这一层。

数据可用性层:数据可用性存在什么问题?对应的解决方案各自是什么?这是本文要集中讨论的问题,在此先不对它进行概括。

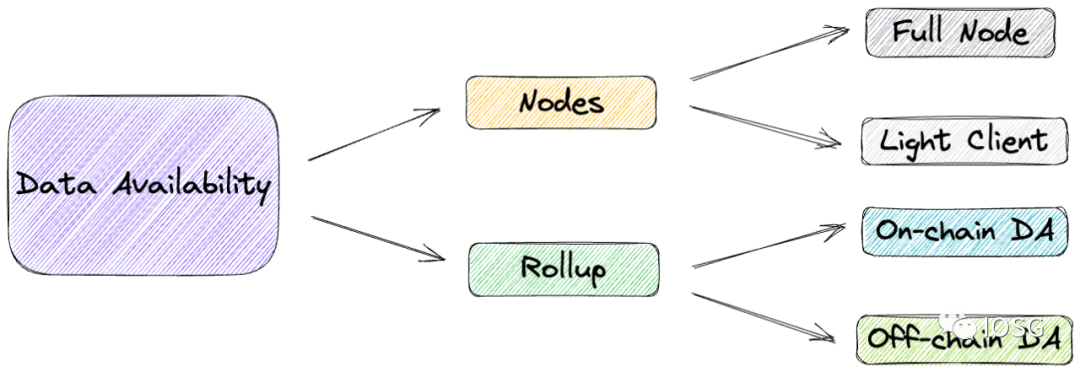

图片来源:IOSG Ventures

回到 18、19 年,数据可用性更多在于轻客户端节点的语境;而在之后的 Rollup 角度下数据可用性又有着另一层含义。本文将分别从「节点」和「Rollup」的两个不同语境来解释数据可用性。

DA in Nodes

图片来源:https://medium.com/metamask/metamask-labs-presents-mustekala-the-light-client-that-seeds-data-full-nodes-vs-light-clients-3bc785307ef5

我们首先来看全节点和轻客户端的概念。

由于全节点亲自下载和验证每个区块中的每笔交易,因此不需要诚实假设来确保状态被正确执行,有着很好的安全性保证。但运行全节点需要存储、计算能力和带宽的资源要求,除了矿工以外,普通用户或者应用没有动力去运行全节点。况且,如果某个节点仅仅需要在链上验证某些信息,运行全节点显然也是非必要的。

这是轻客户端在做的事情。在 IOSG 的文章「多链生态:我们的当前阶段与未来格局」中我们简要介绍了轻客户端。轻客户端是区别于全节点的一种说法,它们往往不与链进行直接交互,而依赖邻近的全节点作为中介,从全节点请求所需要的信息,例如下载区块头、或是验证账户余额。

作为节点的轻客户端可以很快地同步整条链,因为它只下载和验证区块头;而在跨链桥模型中,轻客户端又作为智能合约——目标链的轻客户端只需要验证源链的代币是否被锁定,而无需验证源链的所有交易。

问题出在哪?

这样存在一个隐含问题:既然轻客户端只从全节点那里下载区块头,而不是自己下载和验证每笔交易,那么恶意的全节点(区块生产者)可以构造一个包含无效交易的区块,并把它发送给轻客户端来欺骗它们。

我们容易想到采用「欺诈证明」来解决这个问题:即只需要 1 个诚实的全节点监测区块的有效性,并在发现无效区块后构造一个欺诈证明、将其发送给轻客户端来提醒它们。或者,在收到区块后,轻客户端主动询问全网是否有欺诈证明,如果一段时间后没有收到,那么可以默认该区块是有效的。这样一来,轻客户端几乎可以获得与全节点等同的安全性(但仍然依赖于诚实假设)。

然而,在以上论述中,我们实际上假设了区块生产者总是会发布所有的区块数据,这也是生成欺诈证明的基本前提。但是,恶意的区块生产者可能在发布区块的时候隐藏掉其中的一部分数据。这个时候,全节点可以下载这个区块,验证它是无效的;但轻客户端的特点使它们无法这样做。并且由于缺少数据,全节点也无法生成欺诈证明来警告轻客户端。

另一种情况是,可能由于网络原因,一部分数据在稍后才会上传,我们甚至无法判断这时的数据缺失是客观条件所致还是区块生产者有意为之——那么欺诈证明的奖惩机制也就无法生效。

这就是我们要讨论的在节点中的数据可用性问题。

图片来源:https://github.com/ethereum/research/wiki/A-note-on-data-availability-and-erasure-coding

上图中给出了两种情况:其一,恶意的区块生产者发布了数据缺失的区块,这时诚实的全节点发出警告,但随后该生产者又补充发布了剩余的数据;其二,诚实的区块生产者发布了完整的区块,但这时恶意的全节点发出了假警告。在这两种情况下,网络中的其他人在 T3 之后看到的区块数据都是完整的,但都有人在其中作恶。

这样看来,采用欺诈证明来确保轻客户端的数据可用性是存在漏洞的。

解决方案

2018 年 9 月,Mustafa AI-Bassam(现 Celestia CEO)和 Vitalik 在合著的论文中提出采用多维纠删码来检查数据可用性——轻客户端只需要随机下载一部分数据并验证,就能确保所有数据块是可用的,并在必要时重建所有数据。

采用纠删码来解决轻客户端的数据可用性问题几乎没有异议,Polygon Avail、Celestia(以及以太坊的 Danksharding)中都采用了 Reed-Solomon 纠删码。

不同点在于如何确保纠删码被正确编码:Polygon Avail 和 Danksharding 中采用了 KZG 承诺,而 Celestia 中采用了欺诈证明。两者各有优缺,KZG 承诺无法抗量子,而欺诈证明依赖一定的诚实假设和同步假设。

除 KZG 承诺外,还有采用 STARK 和 FRI 的方案可用于证明纠删码的正确性。

(注:纠删码和 KZG 承诺的概念在 IOSG 的文章「合并在即:详解以太坊最新技术路线」中有提及,由于篇幅所限,在本文中不作展开解释)

DA in Rollup

Rollup 中的数据可用性是:在 zkRollup 中,需要使任何人可以自行重建 Layer2 的状态,以确保抗审查;在 Optimistic Rollup 中,需要确保 Layer2 的所有数据都被发布,这是构建欺诈证明的前提。那么问题在哪?

图片来源:https://forum.celestia.org/t/ethereum-rollup-call-data-pricing-analysis/141

我们来看 Layer2 的费用结构,除了固定花费外,与每批次交易笔数相关的变量主要在于 Layer2 的 Gas 花费以及链上数据可用性的支出。前者的影响微乎其微;而后者需要为每个字节恒定支付 16 gas,整体占到 Rollup 成本的 80%-95% 之多。

(链上)数据可用性很贵,怎么办?

一是降低在链上存放数据的成本:这是协议层做的事情。在 IOSG 的文章「合并在即:详解以太坊最新技术路线」中,我们提到以太坊正在考虑引入 Proto-Danksharding 和 Danksharding 来为 Rollup 提供「大区块」,即更大的数据可用性空间,并采用纠删码和 KZG 承诺解决随之而来的节点负担问题。但从 Rollup 的角度去想,被动等待以太坊来为自己做适配是不现实的。

二是把数据放到链下。下图列出了当前的链下数据可用性方案,通用化方案包括 Celestia 和 Polygon Avail;在 Rollup 中作为用户可选的方案包括 StarkEx、zkPorter 和 Arbitrum Nova。

图片来源:IOSG Ventures

(注:Validium 原本特指 zkRollup 与链下数据可用性相结合的扩容方案,为方便起见,本文中以 Validium 指代链下数据可用性方案并一同参与比较)

以下我们具体来看这些方案。

DA Provided by Rollup

在最简单的 Validium 方案中,由中心化的数据运营商来负责确保数据可用性,用户需要信任运营商不会作恶。这样的好处是成本低,但实际上几乎没有安全保证。

于是,StarkEx 在 2020 年进一步提出了由数据可用性委员会(DAC)维护的 Validium 方案。DAC 的成员是知名且在法律管辖区内的个人或组织,信任假设是他们不会串通和作恶。

Arbitrum 今年提出了 AnyTrust,同样采用数据委员会来确保数据可用性,并基于 AnyTrust 构建了 Arbitrum Nova。

zkPorter 则提出由 Guardians(zkSync Token 持有者)来维护数据可用性,他们需要质押 zkSync Token,如果发生了数据可用性故障,那么质押的资金将被罚没。

三者都提供了称为 Volition 的选项:用户按需自由选择链上或链下数据可用性,根据具体的使用场景,在安全性和成本之间自行取舍。

图片来源:https://blog.polygon.technology/from-rollup-to-validium-with-polygon-avail/

General DA Scenarios

提出上述方案基于这样的想法:既然普通运营商的信誉度不够高,那么就引入更权威的委员会来提高信誉度。

一个小型委员会的安全程度足够高吗?以太坊社区早在两年前就提出了 Validium 的勒索攻击问题:如果窃取了足够的委员会成员的私钥,使得链下数据可用性不可用,那么可以威胁用户——只有他们支付足够的赎金才能从 Layer2 提款。据 Ronin Bridge 和 Harmony Horizon Bridge 被盗的前车之鉴,我们无法忽略这样的可能性。

既然链下的数据可用性委员会并非足够安全,那么如果引入区块链作为信任主体来保证链下数据可用性呢?

如果把前述的 DAC 理解为联盟链,那么 Polygon Avail 和 Celestia 所做的就是使数据可用性层更加去中心化——相当于提供了「DA-Specific」的公链,拥有一系列的验证节点、区块生产者和共识机制,以此提升信任级别。

除了安全性的提升之外,如果数据可用性层本身就是一条链,那么它其实可以不局限于为某个 Rollup、或者某个链提供数据可用性,而是作为通用化的解决方案。

图片来源:https://blog.celestia.org/celestiums/

我们以 Celestia 在以太坊 Rollup 上的应用 Quantum Gravity Bridge 为例进行解释。以太坊主链上的 L2 Contract 像往常一样验证有效性证明或欺诈证明,区别在于数据可用性由 Celestia 提供。Celestia 链上没有智能合约、不对数据进行计算,只确保数据可用。

L2 Operator 把交易数据发布到 Celestia 主链,由 Celestia 的验证人对 DA Attestation 的 Merkle Root 进行签名,并发送给以太坊主链上的 DA Bridge Contract 进行验证并存储。

这样实际上用 DA Attestation 的 Merkle Root 代替证明了所有的数据可用性,以太坊主链上的 DA Bridge Contract 只需要验证并存储这个 Merkle Root,开销得到了极大的降低。

(注:其他数据可用性方案还有 Adamantium 和 EigenLayr。Adamantium 方案中的用户可以选择托管自己的链下数据,在每次状态转换后都签名确认自己的链下数据可用,否则资金将被自动发回主链来确保安全;或者用户可以自由选择数据提供商。EigenLayr 是偏向学术的方案,提出了 Coded Merkle Tree 和数据可用性预言机 ACeD。这里暂不展开讨论)

小结

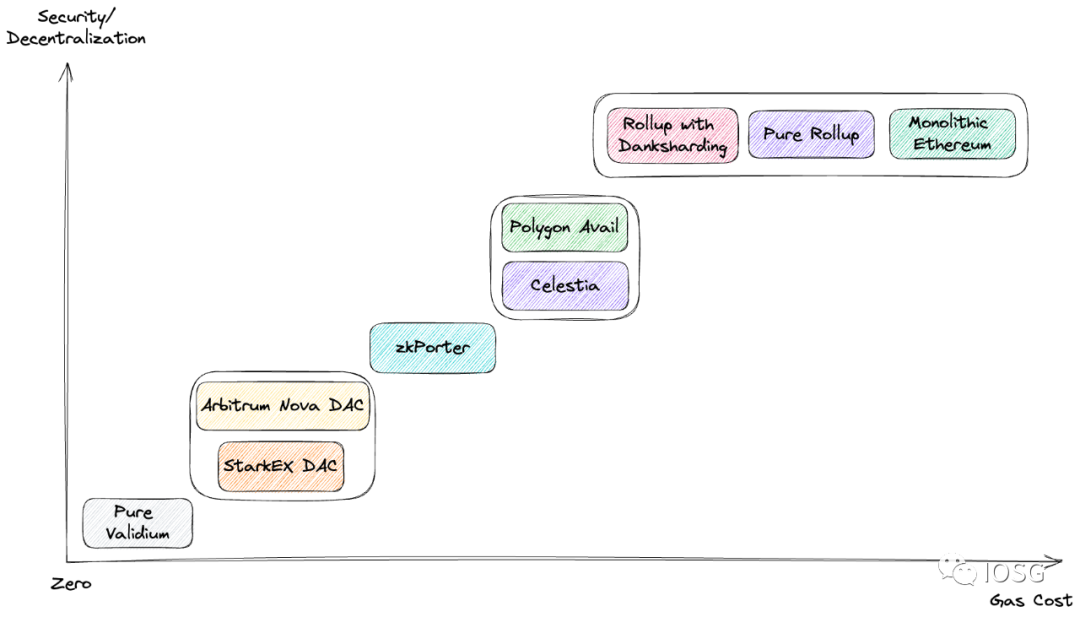

图片来源:IOSG Ventures,据 Celestia Blog 改制

在逐一讨论上述方案后,我们从安全性 / 去中心化程度、Gas 花费的角度来做一个横向比较。注意,该坐标图仅代表笔者的个人理解,作为模糊的大致划分而非定量比较。

左下角的 Pure Validium 安全性 / 去中心化程度和 Gas 花费都是最低的。

中间部分是 StarkEx 和 Arbitrum Nova 的 DAC 方案、zkPorter 的 Guardians 验证者集方案,以及通用化的 Celestia 和 Polygon Avail 方案。笔者认为采用 zkPorter 采用 Guardians 作为验证者集,对比 DAC 来说安全性 / 去中心化程度稍高;而 DA-Specific 区块链的方案对比一组验证者集又要稍高。与此同时 Gas 花费也相应增加。当然这只是极粗略的比较。

右上角的方框内是链上数据可用性的方案,有着最高的安全性 / 去中心化程度和 Gas 花费。从方框内部来看,由于这三种方案的数据可用性都由以太坊主链提供,故它们具有等同的安全性 / 去中心化程度。纯 Rollup 方案对比单体化的以太坊来说显然 Gas 花费更低,而在引入 Proto-Danksharding 和 Danksharding 之后,数据可用性的成本将进一步得到降低。

注:本文讨论的「数据可用性」语境大多在以太坊之下,需要注意 Celestia 和 Polygon Avail 是通用化的方案,并不限于以太坊本身。

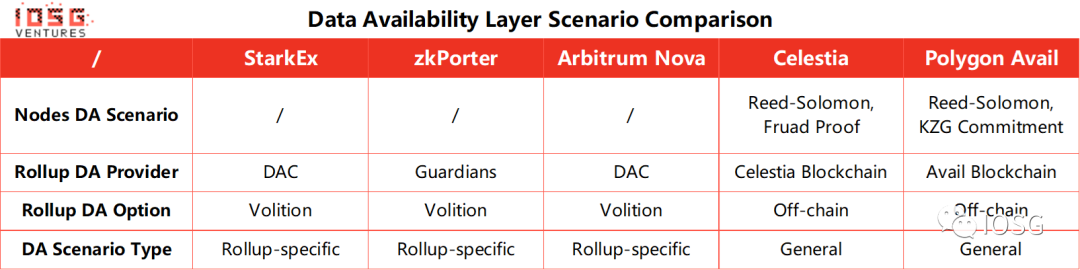

最后我们在表格中对上述方案做个总结。

图片来源:IOSG Ventures

Closing Thoughts

在讨论完上述数据可用性问题之后,我们发现所有方案本质上是在三难困境的相互制约下做权衡取舍,而方案之间的区别在于权衡的「细粒度」不同。

从用户角度考虑,协议提供同时链上和链下数据可用性的选项是合理的。因为在不同的应用场景之下、或者不同的用户群体之间,用户对安全性和成本的敏感程度亦各不相同。

上文更多讨论了数据可用性层对以太坊和 Rollup 的支持。在跨链通信上,Polkadot 的中继链为其他平行链提供了数据可用性的原生安全保证;而 Cosmos IBC 依赖于轻客户端模型,故确保轻客户端能够验证源链和目标链的数据可用性至关重要。

模块化的好处在于可插拔和灵活性,能够按需为协议做适配:例如卸下以太坊的数据可用性包袱,同时确保安全和信任级别;或是在多链生态下提升轻客户端通信模型的安全级别,降低信任假设。不仅限于以太坊,数据可用性还可以在多链生态、甚至未来更多的应用场景下发挥作用。

我们认为:未来的 3 至 5 年内,区块链的架构必然会由单体化向模块化演变,各层呈现低耦合状态。未来可能出现 Rollup-as-a-Service(RaaS)、Data Availability-as-a-Service(DAaaS)等许多模块化组件的提供商,实现区块链体系结构的可组合性乐高。模块化区块链是支撑下一个周期的重要叙事之一。

其中,执行层的估值巨兽(即 Rollup)已经「四分天下」,后来者寥寥;共识层(即各个 Layer1)逐鹿中原,在 Aptos 和 Sui 等公链开始崭露头角后,公链竞争格局虽未尘埃落定,但其叙事已是新瓶装旧酒,难以寻找合理的投资机会。

而数据可用性层的价值仍然有待被发掘。