计算机领域的下一步是什么?

计算机行业的发展有两个基本独立的周期:金融周期和产品周期。最近有很多关于我们在金融周期中的位置的争论。金融市场得到了大量的关注。它们往往会出现不可预测的波动,有时甚至是疯狂的波动。相比之下,产品周期得到的关注相对较少,尽管它实际上是推动计算机行业向前发展的因素。我们可以尝试通过研究过去和推断未来来理解和预测产品周期。

(New computing eras have occurred every 10–15 years)

技术产品周期是平台和应用之间相互促进的互动关系。新的平台促成了新的应用,而新的应用又使新的平台更有价值,从而形成了一个正反馈循环。更小的、分支的技术周期一直在发生,但每隔一段时间——从历史上看,大约每10到15年——主要的新周期就会开始,并且完全重塑计算景观。

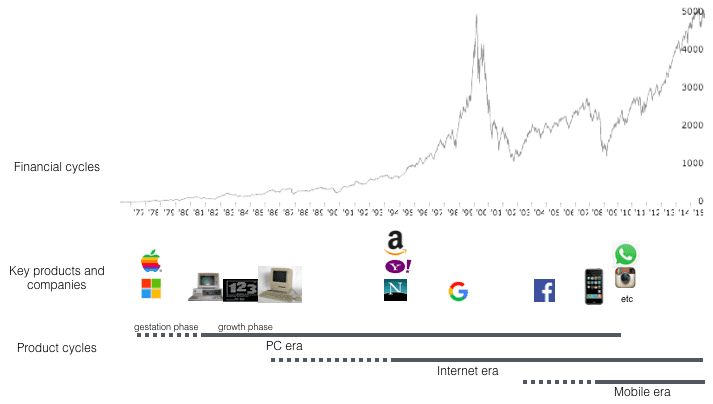

(Financial and product cycles evolve mostly independently)

个人电脑使企业家们能够创建文字处理器、电子表格和许多其他桌面应用程序。互联网使搜索引擎、电子商务、电子邮件和信息传递、社交网络、SaaS商业应用和许多其他服务成为可能。智能手机实现了移动信息、移动社交网络和按需服务,如共享汽车。今天,我们正处于移动时代的中期。很可能还有更多的移动创新还未到来。

每个产品时代都可以分为两个阶段。1)酝酿阶段(the gestation phase),即新平台刚推出时,但昂贵、不完整和/或难以使用;2)增长阶段(the growth phase),即新产品出现时,解决了这些问题,拉开了指数增长期的序幕。

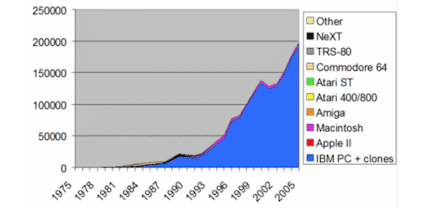

Apple II于1977年发布(Altair于1975年发布),但这正是1981年IBM PC的发布拉开了PC增长阶段的序幕。

(PC 每年销售量 (千), 来源: http://jeremyreimer.com/m-item.lsp?i=137)

互联网的酝酿阶段发生在80年代和90年代初,当时它主要是学术界和政府使用的一种基于文本的工具。1993年,Mosaic网络浏览器的发布开启了增长阶段,并一直持续到现在。

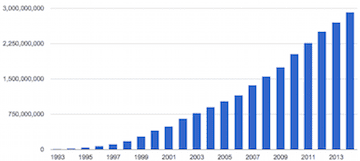

(世界范围的网络用户,来源: http://churchm.ag/numbers-internet-use/)

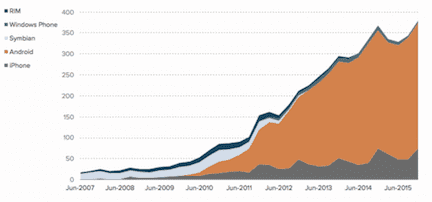

90年代有有功能的手机,21世纪初有Sidekick和黑莓(Blackberry)等早期的智能手机,但智能手机的增长阶段真正开始于2007-8年,当时发布了iPhone,然后是Android。此后,智能手机的采用度开始呈爆炸式增长:今天约有2B人拥有智能手机。到2020年,全球80%的人口将拥有一部智能手机。

(每年世界范围智能手机销售量(百万))

如果10-15年的模式重演,下一个计算时代应该会在未来几年进入它的增长阶段。在这种情况下,我们应该已经处于酝酿阶段了。在硬件和软件方面都有一些重要的趋势,让我们看到了计算机的下一个时代可能是什么。在这里,我谈一谈这些趋势,然后对未来可能出现的情况提出一些建议。

硬件:小型、廉价、无处不在

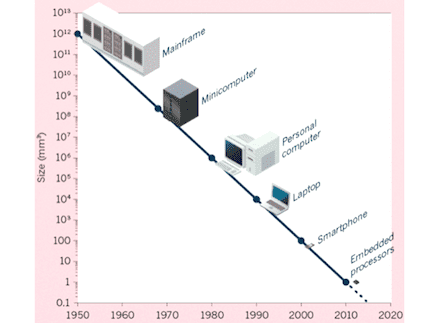

在大型主机时代,只有大型机构才能买得起电脑。小型组织可以买得起微型计算机,家庭和办公室可以买得起个人电脑,个人可以买得起智能手机。

(计算机正在稳步变小,来源:http://www.nature.com/news/the-chips-are-down-for-moore-s-law-1.19338)

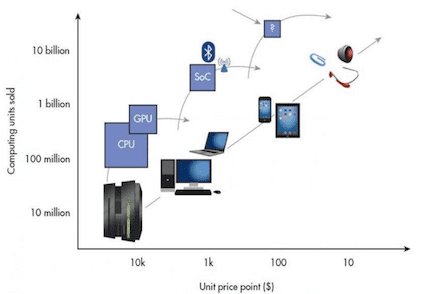

我们现在正进入这样一个时代:处理器和传感器变得如此之小和便宜,以至于计算机的数量将比人的数量多很多。

这有两个原因。一个是半导体行业在过去50年里的稳步发展(摩尔定律)。第二个是Chris Anderson所说的 "智能手机战争的和平红利":智能手机的巨大成功导致了对处理器和传感器的大规模投资。如果你拆解现代无人机、VR头盔或物联网设备,你会发现大部分是智能手机组件。

在现代半导体时代,重点已经从独立的CPU转向被称为片上系统的专业芯片束(bundle)。

(计算机的价格正在逐步下跌,来源:https://medium.com/@magicsilicon/computing-transitions-22c07b9c457a#.j4cm9m6qu\)

典型的片上系统(systems-on-a-chip)捆绑了高能效的ARM CPU以及用于图形处理、通信、电源管理、视频处理等方面的专用芯片。

(Raspberry Pi Zero: 1 GHz Linux computer for $5)

这种新的架构使基本计算系统的价格从约100美元降至约10美元。Raspberry Pi Zero是一台1GHz的Linux电脑,你可以花5美元买到。以类似的价格,你可以买到一个支持wifi的微控制器,来运行一个版本的Python。很快这些芯片的价格将低于一美元。将计算机嵌入到所有任何东西中,将具有成本效益。

同时,在高端处理器中仍有令人印象深刻的性能改进。特别重要的是GPU(图形处理器),其中最好的是由Nvidia制造的。GPU不仅是对传统的图形处理有用,而且对机器学习算法和虚拟/增强现实设备也有用。Nvidia的路线图承诺在未来几年内会大幅的提高性能。

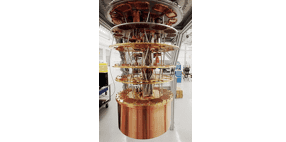

(Google’s quantum computer, source: https://www.technologyreview.com/s/544421/googles-quantum-dream-machine/)

一种通配符技术是量子计算,今天它主要存在于实验室中,但如果实现商业化,可以为生物学和人工智能等领域的某些类别的算法带来数量级的性能改进。

软件:AI的黄金时代

今天,在软件领域有着许多令人兴奋的事情发生。分布式系统就是一个很好的例子。随着设备的数量成倍增长,1)在多台机器上任务 并行化2)在设备间通信和协调变得越来越重要。有趣的分布式系统技术包括像Hadoop和Spark这样的系统,用于大数据问题并行化,以及用于保护比特币/区块链的数据和资产。

但最令人兴奋的软件突破也许正发生在人工智能(AI)领域。人工智能有一个漫长的炒作和失望的历史。Alan Turing本人曾预言,到2000年机器将能够成功模仿人类。然而,也有充分的理由认为,人工智能现在可能最终进入一个黄金时代。

“机器学习是一种核心的、变革性的方式,我们正在重新思考我们正在做的一切。" - 谷歌首席执行官Sundar Pichai

人工智能的很多兴奋点都集中在深度学习上,这是一种机器学习技术,由现在著名的2012年谷歌项目推广,该项目使用一个巨大的计算机集群来学习识别YouTube视频中的猫咪。深度学习是神经网络的后代,这项技术可以追溯到1940年代。它是由多种因素组合而成的,包括新的算法、廉价的并行计算和大数据集的广泛使用,使其重新焕发生机。

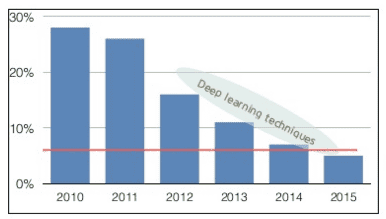

(ImageNet challenge error rates, 来源:http://www.slideshare.net/nervanasys/sd-meetup-12215 (red line = human performance)

我们很想把深度学习当作另一个硅谷的流行语来看待。然而,这种兴奋是由令人印象深刻的理论和现实世界的结果所支持的。例如,在使用深度学习之前,ImageNet挑战赛——一个受欢迎的机器视觉竞赛——获胜者的错误率在20-30%之间。使用深度学习,获胜算法的准确率稳步提高,并在2015年超过了人类的表现。

许多与深度学习相关的论文、数据集和软件工具都是开源的。这产生了民主化的效果,使个人和小型组织能够建立强大的应用程序。WhatsApp仅用50名工程师就能建立一个服务于9亿用户的全球信息传递系统,相比之下,前几代信息传递系统则需要成千上万的工程师。这种 "WhatsApp效应 "现在正发生在人工智能领域当中。像Theano和TensorFlow这样的软件工具,结合了用于训练的云数据中心,以及用于部署的廉价GPU,使得小型工程师团队能够建立最先进的人工智能系统。

例如,在这里,一个单独的程序员在一个副业项目中使用TensorFlow对黑白照片进行着色。

(左:黑白; 中:自动彩色化; 右:真实色彩。来源:http://tinyclouds.org/colorize/)

而在这里,一家小型创业公司创造了一个实时物体分类器:

这当然会让人想起科幻电影中的一个著名场景:

大型科技公司发布的深度学习的首批应用之一是谷歌照片中的搜索功能,它的智能程度令人震惊。

我们很快就会看到各种产品的智能大幅升级,包括:语音助手、搜索引擎、聊天机器人、3D扫描仪、语言翻译机、汽车、无人机、医疗成像系统等等,甚至更多。

未来一万家初创企业的商业计划很容易预测。以X为例,再加上人工智能。这是一个大问题,现在它就在这里。- Kevin Kelly

构建人工智能产品的初创企业将需要保持对特定应用的激光关注,以与那些将人工智能作为首要任务的大型科技公司竞争。人工智能系统随着更多数据的收集而会变得更好,这意味着有可能创造一个数据网络效应的良性飞轮(更多用户→更多数据→更好的产品→更多用户)。地图创业公司Waze利用数据网络效应,生产出比他自己资本更雄厚的竞争对手更好的地图。成功的人工智能初创公司将遵循类似的策略。

软件+硬件:新的电脑

目前有各种新的计算平台处于酝酿阶段,且假以时日它们就会变得更好——并可能进入增长阶段——因为它们融合了硬件和软件方面的最新进展。尽管它们的设计和包装非常不同,但它们有一个共同的主题:它们通过在世界的顶部嵌入一个智能虚拟化层而给我们带来新的,增强的能力。下面是对一些新平台的简要概述:

汽车(cars)。像谷歌、苹果、优步和特斯拉等大型科技公司正在对自动驾驶汽车投入大量资源。像特斯拉Model S这样的半自动驾驶汽车已经公开发售,并将迅速改进。完全自动驾驶将需要更长的时间,但可能不会超过5年。目前已经有完全自主的汽车,几乎与人类司机一样优秀。然而,由于文化和监管方面的原因,完全自主的汽车在被广泛允许之前,可能需要比人类司机做的更好的多。

期待看到对自动驾驶汽车的更多投资。除了大型科技公司之外,大型汽车制造商也开始非常认真地对待自动驾驶。你甚至会看到一些由初创公司制造的有趣产品。深度学习软件工具已经变得如此之好,以至于一个单独的程序员能够做出一辆半自动的汽车:

无人机(drones)。今天的消费级无人机包含现代硬件(主要是智能手机组件加上机械部件),但软件相对简单。在不久的将来,我们将看到融合了先进的计算机视觉和其他人工智能的无人机,使它们更安全,更容易驾驶,也更有用。休闲摄像将继续流行,但也会有重要的商业用例。有数以千万计的危险工作,涉及攀登建筑物、塔楼和其他结构,都可以使用无人机来更安全和有效地进行。

物联网(Internet of Things)。物联网设备的明显用例是节约能源、安全和便利。Nest和Dropcam是前两类的流行例子。在便利类别中,最有趣的产品之一是亚马逊的Echo。

(Io T的三个主要用例)

大多数人认为Echo是一个噱头,直到他们尝试了它,他们才对它的实用性感到惊讶。这是一个很好的演示,说明永远在线的语音可以作为一个用户界面是多么有效。在我们拥有能够进行完整对话的具有普遍智能的机器人之前,还需要一段时间。但是,正如Echo所显示的,今天的语音可以在有限的环境中取得成功。随着最近在深度学习方面取得的突破使之进入了生产设备,语言理解也会随之迅速提高。

物联网也将在商业背景下被采用。例如,带有传感器和网络连接的设备对监测工业设备非常有用。

可穿戴设备(Wearables)。今天的可穿戴计算机在多个方面受到限制,包括电池、通信和处理。那些已经成功的可穿戴设备都专注于狭窄的应用,如健身监测。随着硬件组件的不断改进,可穿戴设备将像智能手机那样支持丰富的应用,释放出广泛的新应用。与物联网一样,语音可能将成为主要的用户界面。

虚拟现实(Virtual Reality)。2016年对VR来说是令人兴奋的一年:Oculus Rift和HTC/Valve Vive(可能还有索尼Playstation VR)的推出,意味着舒适和沉浸式的VR系统最终将公开发售。VR系统需要真正的好,以避免 "uncanny valley"的陷阱。正确的VR需要特殊的屏幕(高分辨率,高刷新率,低持久性),强大的显卡,以及追踪用户精确位置的能力(之前发布的VR系统只能追踪用户头部的旋转)。今年,公众将首次体验到所谓的 "临场感(presence)"——当你的感官被充分欺骗就会感觉完全进入了虚拟世界。

VR头盔将继续改进,并变得更加实惠。主要的研究领域将包括。1)创建渲染和/或拍摄的VR内容的新工具,2)直接从手机和头盔进行跟踪和扫描的机器视觉,以及3)托管大型虚拟环境的分布式后端系统。

增强现实(Augmented Reality)。AR可能会在VR之后出现,因为AR需要VR所需要的大部分东西,再加上额外的新技术。例如,AR需要先进的、低延迟的机器视觉,以便在同一互动场景中令人更加信服地结合真实和虚拟物体。

(Real and virtual combined (from *The Kingsmen*))

也就是说,AR的到来可能比你想象的要快。这个演示视频是直接通过Magic Leap的AR设备拍摄的:

接下来会是什么?

有可能10-15年的计算周期模式已经结束,移动是最后的时代。也有可能下一个时代在一段时间内不会到来,或者只有上面讨论的新计算类别的一个子集最终会变得很重要。

我倾向于认为我们正处于不是一个而是多个新时代的风口浪尖。“智能手机战争的和平红利 "创造了新设备的寒武纪爆炸,而软件的发展,尤其是人工智能,将使这些设备变得智能和有用。上面讨论的许多未来主义技术在今天都已经存在,并将在不久的将来广泛使用。

观察家们注意到,许多这些新设备正处于 "尴尬的青春期"。这是因为它们正处于酝酿阶段。就像70年代的个人电脑)(PCs)、80年代的互联网和21世纪初的智能手机一样,我们看到的是一个还没有完全到来的未来的碎片。但是,未来即将到来:市场有涨有跌,兴奋度有起有伏,但是计算技术却在稳步前进。